The Report That Didn't Answer the Question

Your CFO schedules an urgent meeting after your Q3 access review is completed.

She opens the email you sent last week containing the review results—a 47-page PDF with detailed tables showing every certification decision for every user across every application.

She asks: "Did we pass the review?"

You respond: "Yes, the completion rate was 94%."

She looks confused. "I don't know what that means. Did we find anything wrong? Are we at risk?"

You flip through pages looking for the revocation summary. She stops you: "Never mind. Just email me three bullets I can take to the board."

Your 47-page report has none of those bullets.

Later that afternoon, your external auditor emails: "Can you send evidence that Q3 access reviews were completed per SOX requirements? I need the certification decisions with timestamps, proof of remediation, and validation testing."

You forward him the same 47-page PDF.

He replies: "This doesn't match our testing procedures. Can you provide the specific evidence fields we need?"

You spend six hours reconstructing an audit package from scattered sources—emails, Jira tickets, Slack threads, screenshots from various systems.

Meanwhile, your IT Director messages: "What was our completion rate by department? I need to know which teams are struggling so we can fix the process."

That data exists somewhere in your spreadsheets, but it's not in the report.

One access review. Three stakeholders. Three completely different needs. One useless report that satisfies no one.

Most IT teams make this mistake. They create a single access review report and send it to everyone. It's too detailed for executives, too generic for IT operations, and poorly organized for auditors.

The solution isn't a better universal report. It's three different reports, each designed for a specific audience with specific questions.

In this guide, I'll show you exactly how to structure access review reports for three distinct audiences: executive leadership, IT operations teams, and auditors. These aren't theoretical frameworks—they're formats I've seen work at dozens of companies, from early-stage SaaS to public enterprises going through SOX audits.

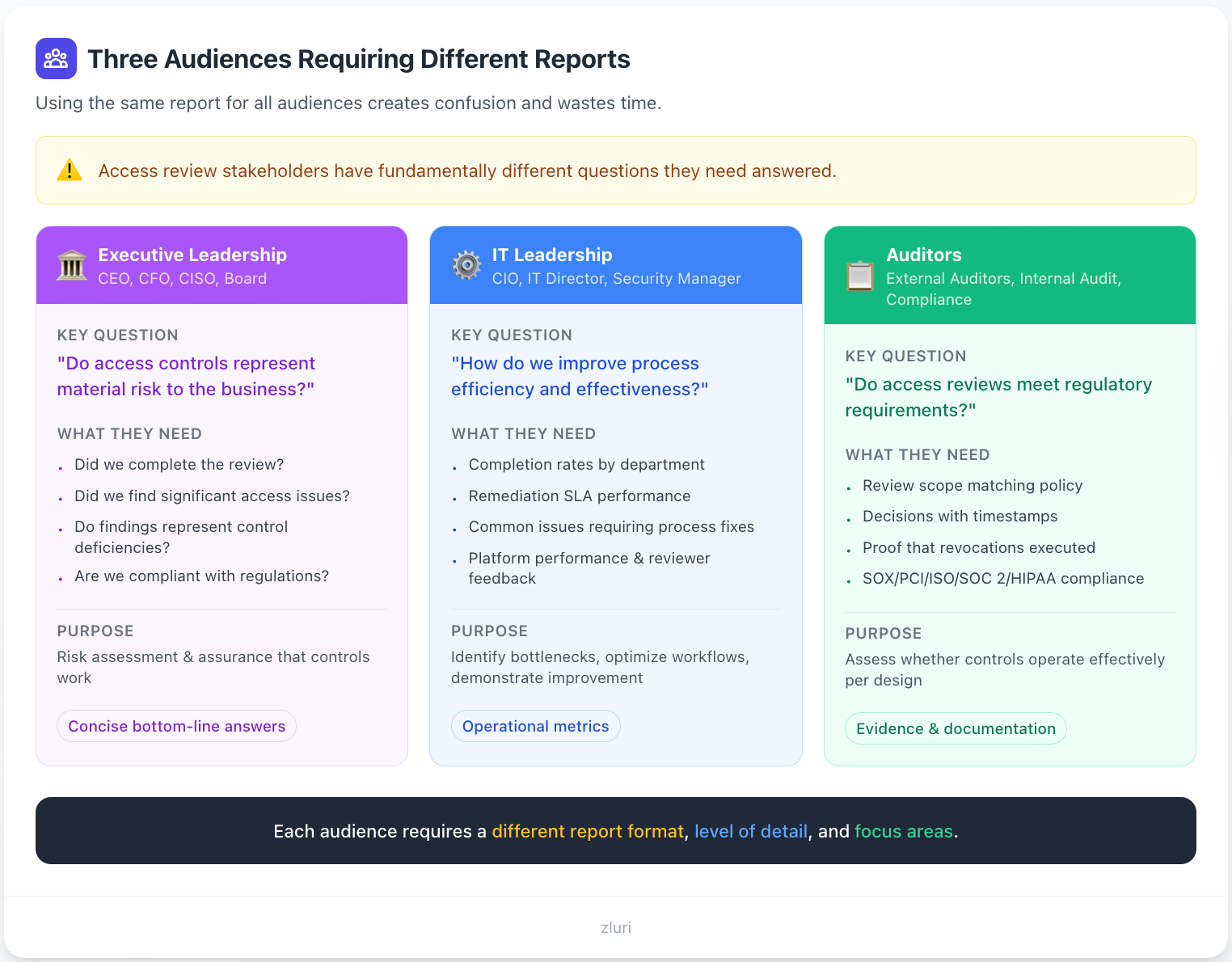

Understanding Your Three Audiences (And Why One Report Fails Everyone)

Before we dive into report formats, let's be clear about what each audience actually cares about. This isn't about dumbing things down or hiding information—it's about relevance.

Executive Leadership: Risk Assessment Over Details

Who they are: CEO, CFO, CISO, Board members

What they're really asking:

- Does this represent material risk to the business?

- Are we compliant with regulations that could fine us or shut us down?

- Do I need to allocate more budget or headcount to fix this?

- Can I sign off on this for the board/auditors/regulators?

What they absolutely don't care about:

- Individual certification decisions for each user

- Department-by-department completion percentages

- Platform performance metrics

- Step-by-step remediation procedures

How they read reports:

Executives skim. They look at headers, bold text, and visual indicators (✅ ⚠️ ❌). They'll spend 2-3 minutes on your report unless something jumps out as problematic. If it looks fine, they move on. If it looks concerning, they'll ask questions.

I've watched CFOs receive 47-page access review reports. They open the PDF, scroll through once, realize they can't find the answer they need, and close it. Then they call you for a verbal summary anyway. The report becomes a decoration.

Your executive report should answer these four questions in the first 30 seconds:

- Did we complete the review? (Yes/No)

- Did we find serious problems? (Yes/No)

- Are we compliant? (Yes/No)

- What action are we taking? (Brief list)

Everything else is optional detail they can request if interested.

IT Leadership: Process Intelligence Over Compliance Checkboxes

Who they are: CIO, IT Director, Security Manager, Identity Governance Team Lead

What they're really asking:

- Where are the bottlenecks in our process?

- Which departments or applications are problematic?

- Is our access governance platform delivering value?

- What should we fix for the next review?

- How do we compare to last quarter?

- Can we justify the platform cost at budget time?

What they don't care about:

- Executive-level risk narratives

- Detailed audit evidence formatting

- Compliance framework citations

- High-level strategy discussions

How they read reports:

IT leaders dig into metrics. They compare current performance to previous periods. They look for patterns that reveal systemic issues. They want data they can act on.

When I show IT directors completion rates by department, their first question is always: "Why is that department slower?" When I show them application-level revocation rates, they immediately ask: "What's causing that access sprawl?"

They're looking for process problems to fix, not just confirmation that the review happened.

Your IT operational report should identify:

- Bottlenecks: Which departments miss deadlines? Why?

- Problem applications: Which systems have excessive inappropriate access?

- Root causes: Why is access being revoked? (Role changes? Dormant accounts? Projects ending?)

- Automation opportunities: Which manual processes are slowing remediation?

- ROI justification: What's the time savings from your platform investment?

This is operational intelligence, not compliance documentation.

Auditors: Systematic Evidence Over Narrative Summaries

Who they are: External auditors, internal audit, compliance teams, regulatory examiners

What they're really asking:

- Does this evidence prove the control operated as designed?

- Can I verify the completeness and accuracy of this data?

- Are there gaps that represent control deficiencies?

- Does this meet the specific testing procedures for SOX/SOC 2/PCI/HIPAA?

- Can I trace this evidence back to authoritative sources?

What they don't care about:

- Your narrative about why things are improving

- Operational metrics for process optimization

- Executive risk assessments

- Your interpretation of what the data means

How they read reports:

Auditors follow testing procedures. They have a checklist of specific evidence items they need to see. They verify timestamps, check for completeness, confirm that remediation actually happened. They're looking for gaps and inconsistencies.

When an auditor asks for "access review evidence," they don't want a summary. They want:

- The complete population of access reviewed

- Certification decisions with timestamps and owner IDs

- Remediation tickets showing execution

- Validation testing proving revocation occurred

- Audit trail showing no retroactive modifications

If you can't provide this systematically, they'll note a control deficiency—not because your review didn't happen, but because you can't prove it happened the way you claim.

Your auditor evidence package should demonstrate:

- Control design: How is the control supposed to work?

- Scope completeness: Did you review everything you said you would?

- Execution evidence: Timestamped proof that activities occurred

- Remediation proof: Evidence that revoked access was actually removed

- Audit trail integrity: Tamper-evident logs showing systematic collection

This is forensic documentation, not business reporting.

The Three Report Formats: What Makes Each One Work

Now that you understand what each audience needs, let's look at the three report formats. I'll show you the principles that make each format effective, common mistakes to avoid, and when to use each one.

Format 1: Executive Summary (Actually One Page)

The core principle: Lead with answers, not data.

Most "executive summaries" fail because they're written like compressed technical reports. They start with scope, move through methodology, present findings, then eventually get to conclusions buried on page 3.

Executives read in reverse. They want the conclusion first, then supporting evidence only if they don't trust the conclusion or if it's concerning.

What an effective executive summary looks like:

The entire report fits on one page (occasionally two if absolutely necessary). It uses this structure:

Section 1: Bottom Line Assessment (3-4 lines)

✅ Review completed successfully / ⚠️ Issues found requiring attention / ❌ Control deficiency identified

Include completion rate, compliance status, and critical issue remediation status. This section answers "should I worry?"

Section 2: Key Findings (3-5 bullets maximum)

What did you find? Not every finding—just the ones that matter at an executive level:

- High-risk access (terminated employees still active, segregation of duties violations)

- Compliance impact (are we audit-ready?)

- Trends that indicate systemic issues (inappropriate access rate above benchmark)

Each finding should be one sentence. If you need more explanation, it belongs in the IT operational report, not here.

Section 3: Performance Trend (4-quarter view)

Show completion rates, revocation rates, and remediation time over the last 4 quarters. This gives executives context: Are we getting better or worse?

A single quarter metric means nothing. "14% revocation rate" could be good or terrible depending on your trend. If you were at 22% last quarter, that's an improvement. If you were at 8%, that's degradation.

Section 4: Recommendations (2-3 maximum)

Don't just report problems—tell executives what you're doing about them. Each recommendation should have:

- The action (implement RBAC)

- The timeline (next 30 days)

- The expected impact (reduce inappropriate access from 14% to <10%)

Section 5: Risk Assessment (simple table)

Show current state, target state, and action plan for 2-3 key risk areas. This gives the board confidence that you're managing risk actively.

What you deliberately exclude from executive reports:

- Detailed methodology descriptions

- Department-by-department breakdowns

- Application-level analysis

- Platform performance metrics

- Complete lists of all findings

- Process improvement discussions

- Cost/ROI analysis

- Individual certification decisions

All of that belongs in other reports. The executive summary is ruthlessly focused on "what leadership needs to know."

When to use executive format:

- Board presentations

- CFO/CEO quarterly updates

- Executive leadership meetings

- Investor or M&A due diligence

- Any situation where the audience needs confidence, not details

Format 2: IT Operational Report (Metrics That Drive Process Improvements)

The core principle: Surface operational intelligence, not just compliance metrics.

Most IT operational reports just confirm that the review happened: "Completion rate 94%, remediation complete, status green." That's not operational intelligence—that's a status update.

Effective operational reports reveal where the process works, where it breaks, and why. They turn raw review data into actionable insights about your access governance maturity.

What an effective IT operational report looks like:

This report is substantially longer than the executive summary—typically 5-8 pages. It uses this structure:

Section 1: Completion Performance by Department

Don't just report the overall completion rate (94%). Break it down by department and show average days to complete.

This immediately reveals process issues:

- Legal department at 0% completion → need escalation process

- Executive reviews taking 7+ days → need to schedule earlier for travel

- Finance completing in 4 days → study their workflow for best practices

Section 2: Certification Decision Breakdown with Root Cause Analysis

Show the summary (85% approved, 14% revoked, 1% modified) but don't stop there. Dig into WHY access was revoked:

- 43% due to role changes → access not removed during internal transfers

- 29% due to dormant accounts → need auto-disable policy

- 20% due to project completion → temporary access not tracked

Each revocation reason reveals an upstream process gap. The real value is identifying these gaps so you can fix them.

Section 3: Application-Level Analysis

Which applications have the highest revocation rates? This tells you where access governance is weakest.

When you see AWS Console at 36% inappropriate access, that's not a review problem—that's a provisioning problem. Developers are getting standing admin access when they only need temporary elevated permissions.

When you see GitHub at 24% inappropriate, that's a lifecycle problem. People join project teams, projects end, but they're never removed from the org.

This analysis turns access review findings into access governance strategy.

Section 4: Privileged Access Deep Dive

Privileged accounts deserve separate analysis. Show revocation rates by privilege type (AWS admin, database admin, Salesforce admin, Xero admin, etc.).

If 3 AWS admin accounts belonged to contractors whose contracts ended, that's an offboarding control gap. If 1 Salesforce admin account belonged to someone who transferred to marketing, that's a role change detection gap.

Section 5: Remediation Performance Analysis

This is where you measure execution, not just planning:

- SLA compliance by priority level

- Average remediation time

- Late remediations with root cause

- Remediation speed by application type

Legacy apps requiring manual remediation create delays. If 4 legacy systems (10% of apps) account for 60% of late remediations, that tells you exactly where to focus integration efforts.

Section 6: Platform Performance Metrics

If you're using an access governance platform, this section justifies the investment:

- Platform uptime

- User satisfaction scores

- Support ticket volume

- Mobile usage rates

This operational data helps you optimize the tool and justify renewal at budget time.

Section 7: Cost & ROI Analysis

IT leadership needs to justify platform investments. Calculate:

- Total time investment (hours)

- Labor cost (hours × blended rate)

- Platform cost

- Cost per user reviewed

- ROI vs. manual process

- Quarterly and annual savings

When you can show "we save 244 hours per quarter using the platform versus manual process," that's a compelling ROI story.

Section 8: Reviewer Experience Metrics

Better reviewer experience produces better data quality:

- Average time per review

- Completion rates

- Support request volume

- Satisfaction scores

Track these quarter-over-quarter. If average review time dropped from 38 minutes to 32 minutes, your UI improvements are working.

Section 9: Recommendations for Next Quarter

Based on all the data above, provide specific, actionable recommendations:

- Process improvements (implement RBAC, automate role change detection)

- Platform enhancements (group-based reviews, mobile app)

- Training improvements (enhanced decision criteria, recorded videos)

- Expected outcomes (reduce inappropriate access from 14% to <10%)

What you deliberately exclude from IT operational reports:

- Executive risk narratives

- Detailed audit evidence documentation

- Compliance framework citations

- Individual certification decisions

- Auditor evidence formatting

When to use IT operational format:

- IT leadership meetings

- Process improvement planning

- Platform evaluation and ROI justification

- Quarterly operational reviews

- Team retrospectives

- Budget justification discussions

Format 3: Auditor Evidence Package (Systematic Documentation)

The core principle: Anticipate testing procedures, not just compliance questions.

Many companies treat auditor evidence packages like afterthoughts. The auditor asks for evidence, you scramble to gather it from scattered sources, you assemble a ZIP file, you hope it's what they need.

This approach fails because you're reacting to auditor requests instead of anticipating their testing procedures.

Effective evidence packages are structured around how auditors actually test controls, not how you think about your internal process.

What an effective auditor evidence package looks like:

This is the longest of the three formats—typically 15-25 pages plus exhibits. It uses this structure:

Section 1: Control Objective

Define what the control is supposed to achieve. Auditors need to understand the control objective before they can test whether it works.

Include:

- Control ID and description

- Control owner

- Control frequency

- Regulatory requirements satisfied (SOX 404, PCI DSS 7.1, HIPAA 164.308, SOC 2 CC6.2)

Section 2: Review Scope

List every application reviewed, its classification (financial, CDE, ePHI, business-critical), review frequency, user count, and compliance scope.

This demonstrates completeness. Auditors will compare this scope to your documented policy to verify you reviewed everything you said you would.

Section 3: Control Design Documentation

Document how the control is designed to work:

- Policy reference

- Process steps

- Roles and responsibilities

This section proves the control is designed properly before auditors test execution.

Section 4: Control Operating Effectiveness Evidence

This is the heart of the evidence package. Provide systematic proof that the control operated as designed:

4.1 Review Launch Documentation

- Launch notification email with delivery confirmation

- Platform configuration screenshots with dates

- Deadline documentation

4.2 Certification Owner Decisions

- Complete certification decision log (CSV export with all 3,214 records)

- Sample certification records showing user, application, owner, decision, timestamp, justification

The key thing here is that timestamps MUST come from the platform database, not spreadsheet cells you could edit. This is tamper-evident evidence.

4.3 Remediation Execution Evidence

- Remediation ticket log from Jira

- Sample tickets showing creation date, completion date, days to complete, assigned team

- SLA compliance metrics

4.4 Remediation Validation Testing

- Validation test methodology

- Sample size (10% of revoked access)

- Test results (attempted authentication for revoked users)

- Screenshots proving access was denied

This proves that revoked access was actually removed, not just marked "complete" in a ticket.

4.5 Audit Trail Documentation

- Complete platform audit trail export

- Events captured (campaign creation, reviewer access, decisions, remediation, validation)

- Audit trail integrity confirmation (no retroactive modifications)

This is what separates audit-ready evidence from scattered documentation. The platform captured everything as it occurred, not reconstructed from memory.

Section 5: Completion Metrics

Show completion rates by compliance scope:

- SOX ITGC (Financial): 100%

- PCI DSS (CDE): 100%

- HIPAA (ePHI): 100%

- SOC 2 (In-Scope): 100%

Auditors care most about compliance-scoped systems. If those are 100% complete, that's the critical finding.

Section 6: Control Deficiency Assessment

This is your conclusion:

- Testing conclusion (no deficiencies identified)

- Control design adequacy

- Operating effectiveness assessment

- Basis for conclusion

- Observations

Auditors will either agree with your assessment or identify gaps. Make it easy for them to concur.

Section 7: Management Representation

Executive certification that the evidence is complete and accurate. CISO and CFO signatures.

This satisfies audit requirements and adds credibility.

Section 8: Evidence Exhibits

Attach all supporting documentation:

- Launch emails

- Platform screenshots

- Complete decision logs

- Remediation tickets

- Validation results

- Audit trail exports

- Policy documents

- Training materials

What you deliberately exclude from auditor evidence packages:

- Executive risk narratives

- Operational improvement discussions

- Cost/ROI analysis

- Process optimization recommendations

- Trend analysis beyond what's required for testing

When to use auditor evidence format:

- External audits (SOX, SOC 2, ISO)

- Regulatory examinations

- Internal audit reviews

- Pre-audit preparation

- Compliance assessments

The Scattered Evidence Problem: Why Most Teams Fail Audits

Let me tell you what happens at most companies when auditors ask for evidence.

Your SOC 2 auditor emails: "Can you show me evidence that Q2 access reviews completed per your policy requirements?"

You think: "Sure, we did the review. We have evidence." Then you start gathering:

- Excel spreadsheet with certification decisions (saved in SharePoint, but which folder?)

- Email thread where you notified certification owners (scattered across sent folder)

- Jira tickets showing remediation completion (requires custom query you have to remember)

- Slack messages confirming validation testing (search "access review" in #security channel)

- Calendar invites for follow-up meetings (check Outlook)

- Screenshots of completed reviews (somewhere in your Downloads folder)

You spend 6 hours reconstructing the evidence package from six different systems. You create a new folder, copy relevant emails, export Jira reports, screenshot Slack conversations, compile everything into a ZIP file.

You send it to the auditor.

The auditor reviews your package and responds: "This evidence exists, but it's not systematically collected. How do I know this is complete? How do I verify the timestamps are accurate? Where's the tamper-evident audit trail?"

Your evidence is real. But your evidence collection process is not audit-ready.

The fundamental problem is that you treat audit evidence as something you gather after the fact, rather than something that's systematically generated as the control operates.

When access reviews run through modern governance platforms, evidence generation is automatic:

Every action is captured systematically. Every timestamp tamper-evident. Every piece of evidence is traceable to authoritative sources.

When the auditor asks for evidence, you export the complete audit trail from the platform. Done.

This is the difference between:

Reactive evidence gathering: "Let me find those emails and compile that spreadsheet"

vs.

Systematic evidence generation: "Here's the complete audit trail export from our platform"

The latter passes audits. The former creates control deficiency findings even when the control actually operated.

How to Make This Work at Your Company

Here's how to implement multi-format reporting for your next access review:

Step 1: Identify your three audiences

Who receives each report type?

- Executive summary → CFO, CISO, CEO, board

- IT operational report → CIO, IT Director, Security Manager, Identity Team Lead

- Auditor evidence package → External auditors, internal audit, compliance team

Step 2: Set up templates for each format

Don't write these from scratch every quarter. Create templates once, then populate them with data from each review.

Download our ready-to-use templates at the end of this article.

Step 3: Decide who prepares each report

Typically:

- Security team prepares all three (same data, different presentations)

- IT operations helps with operational metrics

- CISO reviews executive summary before distribution

Step 4: Brief each audience on what they'll receive

Tell executives: "You'll get a one-page summary with bottom-line assessment. If you need details, ask."

Tell IT teams: "You'll get operational metrics for process improvement."

Tell auditors: "Evidence packages will be ready when you request them."

Step 5: Generate reports after each review

Make this part of your standard process:

- Complete access review

- Generate executive summary → send within 1 week

- Generate IT operational report → send within 1 week

- Generate evidence package → archive, send to auditors on request

Step 6: Iterate based on feedback

After the first few reports, ask each audience:

- Is this helpful?

- What's missing?

- What could we remove?

Refine your templates based on their feedback.

What Success Looks Like

You'll know multi-format reporting is working when:

Executives stop asking follow-up questions: They get answers to their questions immediately, without needing to schedule clarification meetings.

IT teams start implementing improvements: They use operational metrics to identify bottlenecks and fix them, not just acknowledge that the review happened.

Auditors say "this is exactly what we needed": They don't request additional evidence or note documentation gaps.

Your workload decreases: Once templates are set up, generating three reports takes less time than fielding questions about one confusing report.

Stakeholders actually read your reports: You know they're reading when they reference specific findings in conversations.

That's the difference between reporting that documents compliance and reporting that drives value.

Get Started

Your next access review completes in a few weeks. Before you write the report, ask yourself, who's reading this?

Then write that report, not some generic document that tries to be everything and ends up being useful to no one.

The difference between effective and ineffective reporting is simple: does your audience get answers to the questions they actually care about?

- Executives: "Are we at risk? Are we compliant?"

- IT teams: "Where are the bottlenecks? What should we fix?"

- Auditors: "Does this evidence prove the control works?"

Three audiences. Three reports. Right information for the right people.

See how modern platforms auto-generate these reports → Book a Demo

Access governance platforms like Zluri can automatically generate all three report formats from your review data, with systematic evidence collection built in.

Related Reading

→ New to access reviews? Start with our User Access Review Checklist

→ Looking to improve your process? See Access Review Best Practices

→ Preparing for an audit? Read our SOX Compliance Access Reviews guide

FAQs About Multi-Format Reporting

Can't I just send the IT operational report to everyone? It has all the information.

No. Executives won't read it—it's too long and too detailed. Auditors don't want operational metrics—they want systematic evidence organized for testing. Each audience needs information formatted for their specific use case.

Our executive team likes details. Should I make the executive summary longer?

If your executives genuinely read 8-page reports, you're lucky. But test this by sending them a one-page summary and see if they request more detail. Most won't. They'll appreciate the brevity.

We're a company with just 200 people. Do we really need three different reports?

If you have executives, an IT team, and auditors, yes. The size of your company doesn't change what each audience needs. A startup CFO still wants bottom-line assessment, not operational metrics.

How do we generate three reports without tripling our workload?

Modern access governance platforms can auto-generate these reports from your review data. You're not writing three reports manually—you're configuring three report templates that pull from the same source data.

Should we create these reports before or after the review?

Set up the templates before the review starts. Then generating reports becomes a click, not a project.

What if we use spreadsheets for access reviews instead of a platform?

You'll need to manually compile data into each report format. This is doable but time-consuming. The evidence package will be particularly challenging because you'll need to manually assemble evidence from multiple sources.

Can we share the IT operational report with executives if they ask?

Absolutely. The point isn't to hide information—it's to give each audience the right starting point. If executives want operational details, share them.

Do auditors actually care about this level of documentation?

Yes. I've seen companies receive control deficiency findings not because their review process was bad, but because they couldn't provide systematic evidence. Auditors need to verify controls through documentation, not trust.

How often should we generate these reports?

Executive summary: After every review (quarterly for compliance-scoped systems)

IT operational report: After every review (for process improvement)

Auditor evidence package: After every review (archive for when auditors request it)

What if our metrics are terrible? Do we still send executive reports?

Yes, but frame them honestly. If completion rate is 60%, say "⚠️ Review incomplete - 40% of reviews missed deadline" and explain the action plan. Bad news delivered clearly is better than bad news hidden in 47 pages.

.png)

.svg)