Your quarterly access review is complete. 847 users certified across 23 applications. IT revoked 127 inappropriate access grants. You exported the evidence package for your SOX auditor.

Three weeks later, she asks: "Did you validate that manager hierarchies were current before launching reviews?"

You didn't check.

"How did you confirm that revocations were actually executed across all integrated systems?"

You show her the Jira tickets marked "complete."

"Did anyone test that users can no longer authenticate?"

No validation testing occurred.

Finding: Material weakness in access controls.

Your review was 90% complete. The missing 10%—pre-launch validation and post-remediation verification—failed your audit.

This isn't about effort. Your team worked hard. It's about execution gaps. It's about the validation steps that seem optional until the auditor asks for evidence, the data quality checks you skip to save time, the testing nobody remembers to do after remediation completes.

Organizations conducting manual reviews report forgotten validation steps in roughly two-thirds of failed audits. Every access review touches 40-50 critical execution checkpoints across five phases. Missing any checkpoint creates compliance gaps, evidence deficiencies, or operational failures.

In this guide, we'll share every critical checkpoint, organized by the five execution phases where reviews typically fail. We'll show you what goes wrong when teams skip steps, and how to structure systematic execution that passes audits.

The Five Phases Where Reviews Fail

Complete access review cycles contain five distinct phases:

Phase 1: Pre-Launch Preparation (1-2 weeks before) ensures foundational data accuracy and stakeholder readiness. Reviews launched with stale manager hierarchies or incomplete application inventories create rework and evidence gaps.

Phase 2: Review Launch (Day 1) establishes scope, assigns owners, communicates expectations. Poor launch communication causes 40-60% completion rates instead of 85-95%.

Phase 3: In-Flight Management (Days 2-14) monitors progress, sends reminders, escalates overdue certifications. Passive monitoring = low completion. Active management = compliance.

Phase 4: Remediation Execution (Days 15-21) implements approved changes and validates successful revocation. Incomplete validation creates the most common audit finding.

Phase 5: Post-Review Activities (Days 22-30) generates evidence, analyzes metrics, communicates results. Poor documentation means recreating evidence during audits.

Each phase contains critical checkpoints that get skipped under deadline pressure. Let's examine where execution breaks down.

Phase 1: Pre-Launch Preparation

The Manager Hierarchy Problem

You assume HR data updates automatically. The reality is that promotions lag weeks in systems, managers change but hierarchies don't, and departed employees remain "active" until payroll processes.

One financial services company launched Q2 reviews using January manager hierarchies. Three managers had been promoted, two had left, one department reorganized. Result: 127 users assigned to wrong reviewers, 18 orphaned accounts with no reviewer, 6 days of rework.

The forgotten validation step: Checking if manager data reflects current org structure before assigning reviews.

Critical pre-launch checkpoints:

✓ Verify HR data updated within last 7 days

✓ Confirm all managers in hierarchy still employed

✓ Validate department assignments reflect recent reorgs

✓ Identify orphaned accounts without assigned reviewers

Most platforms pull manager data at launch time. If your source data is stale, your entire review assignment is wrong. You need a systematic pre-launch validation that confirms data accuracy before you send 300 managers their review assignments.

Zluri syncs with HR systems (like BambooHR) and IDP/SSO (like Google Workspace, EntraID, Okta, etc.) continuously (a big differentiator compared to other user access review solutions) and flags data quality issues before launch—but even with manual processes, the checkpoint is simple. Verify your org chart matches reality within the last week, not the last quarter.

The 30-Day Data Gathering Death March

For manual processes, extracting current access data consumes weeks:

Week 1: Email 23 application owners requesting user lists. Seven respond with CSVs. Four say "I'll get that to you." Twelve don't respond.

Week 2: Follow up with non-responders. Three more replies. Two are on vacation. Seven are still silent. You escalate to their managers.

Week 3: Chase the holdouts. One sends a PDF screenshot (unusable). Two send the wrong format.

Week 4: You have most data, but five app owners sent 30-45 day old exports. You request refreshed data. More delays.

Reviews that should take 14 days consume 30+ because data gathering is serial, manual, and dependent on humans who have other priorities.

The forgotten checkpoint: Ensure data is current within 48 hours, not 45 days.

When access data is 30 days old, reviewers certify access that already changed. Users terminated three weeks ago still appear active. New hires aren't visible. Your review certifies a reality that no longer exists.

Critical data extraction checkpoints:

✓ Export user lists from all in-scope applications

✓ Capture current role/group assignments

✓ Include last login dates (identify dormant accounts)

✓ Verify data freshness within 48 hours of launch

Platforms with direct integrations extract data regularly (with 24-48 hours sync). Manual exports require systematic coordination: specific data format requirements, firm deadlines, consequences for non-compliance.

Without process enforcement, you're still chasing app owners while your review deadline expires.

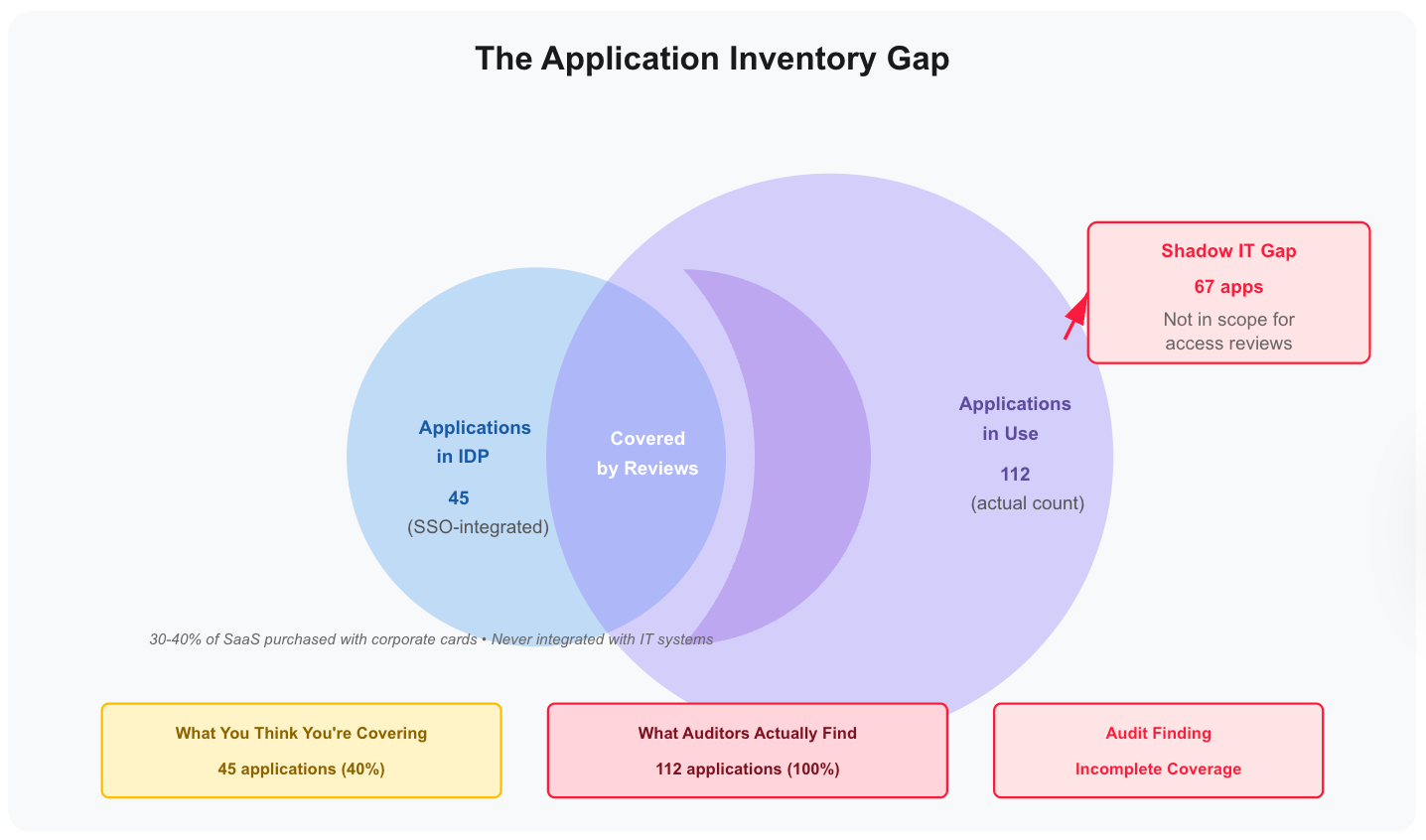

The Application Inventory Gap

Your policy says "review all applications with sensitive data." You launch reviews covering 80 applications visible in your IDP.

During the audit, the auditor asks about applications purchased with corporate cards—not integrated with SSO. You discover 32 of 60 applications weren't in scope.

Finding: Incomplete coverage for access review requirements.

The forgotten checkpoint: Verifying your application inventory is complete before defining scope.

30-40% of SaaS applications are purchased with corporate cards and never integrated with IT systems. They don't appear in your IDP, your CASB doesn't see them, your review process ignores them.

Critical inventory validation checkpoints:

✓ List all applications in review scope

✓ Verify completeness (not just what's in IDP/SSO)

✓ Confirm application owners assigned

✓ Document why any applications are excluded

An IGA platform with a built-in discovery engine identifies shadow IT automatically. Manual processes require detective work: expense report analysis, browser extension monitoring, conversations with procurement.

Either way, incomplete inventory = audit findings. Your scope must cover what policy requires, not just what's convenient to review.

Phase 2: Review Launch

Launch failures typically involve access problems or communication gaps.

The platform access crisis: You click "launch." Send notifications. Thirty percent bounce due to spam filters. Fifteen managers can't log in due to SSO issues. Ten didn't receive notifications at all.

You discover this at day 10 when completion rates lag. Too late.

Critical launch checkpoints:

✓ Verify notification delivery (check bounce rates immediately)

✓ Test certification owner logins (sample 5-10 users)

✓ Confirm mobile access works if supported

✓ Resolve access blockers within first hour

Launch isn't "set and forget." It's "launch and immediately verify everything worked."

The communication gap happens when notifications are generic: "You have access reviews due November 30." Managers don't know what this means, why it matters, how long it takes, who to ask for help.

Clear launch communication includes: review purpose, compliance importance, time commitment estimate (30-60 minutes), deadline, support contact, direct link to review portal.

Company-wide visibility matters. If you notify only certification owners, their team members are confused when asked questions.

Post in #general: "Access review season—managers will need 60 minutes this week to certify team access. Questions go to security@company.com."

Phase 3: In-Flight Management

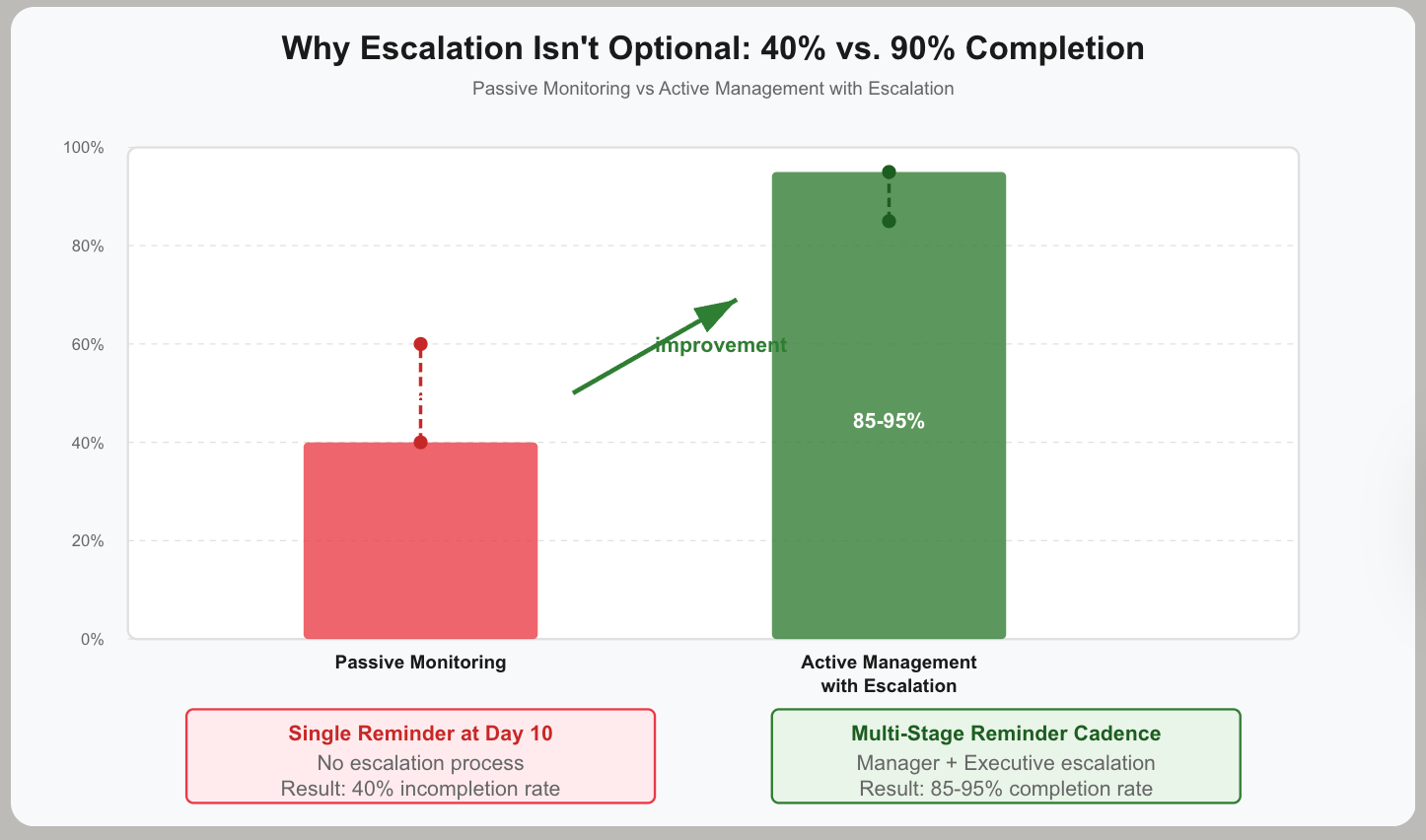

This phase separates 90% completion from 40% completion.

The Rubber-Stamping Problem

One healthcare company celebrated 93% completion rates. Then they analyzed decisions: 38 of 47 certification owners approved 100% of access with zero revocations.

Completion rate: 93%

Completion effectiveness: 19%

High completion masked that 81% of reviewers rubber-stamped everything without evaluation.

The forgotten checkpoint: Monitoring decision patterns during reviews, not just completion rates.

Critical in-flight monitoring:

✓ Track daily completion rates

✓ Flag reviewers approving 100% (possible rubber-stamping)

✓ Flag reviewers revoking >50% (possible over-correction)

✓ Review escalations requiring security team input

When you see someone approve 147 of 147 access grants in 4 minutes, that's not careful evaluation—that's "click approve until this goes away."

Automated platforms can flag suspicious patterns in real-time. Manual processes require daily spot-checking: export decisions, sort by timestamp, identify reviews completed impossibly fast.

Then intervene: "I noticed you completed Sarah's review in 3 minutes with zero changes. Can we walk through a few access grants together to confirm the evaluation process?"

The Reminder Strategy That Actually Works

Teams typically send one reminder at day 10. People miss it or ignore it.

Effective reminder cadence:

- Day 3: Friendly nudge ("don't forget, 11 days remaining")

- Day 7: More urgent tone ("deadline approaching, support available")

- Day 10: Clear escalation warning ("will notify your manager tomorrow")

- Day 11: Manager escalation (notify certification owner's manager)

- Day 14: Executive escalation (notify department heads and CISO)

Multiple reminders at different intervals catch people when they're ready to act. A single reminder assumes everyone checks email consistently and acts immediately.

The escalation conversation: "We don't want to bother executives." Result: 40% completion.

Escalation isn't optional—it's how you enforce accountability. The first time you escalate to the CTO that an engineering director hasn't completed reviews, you only need to do it once. Next quarter, that director completes early.

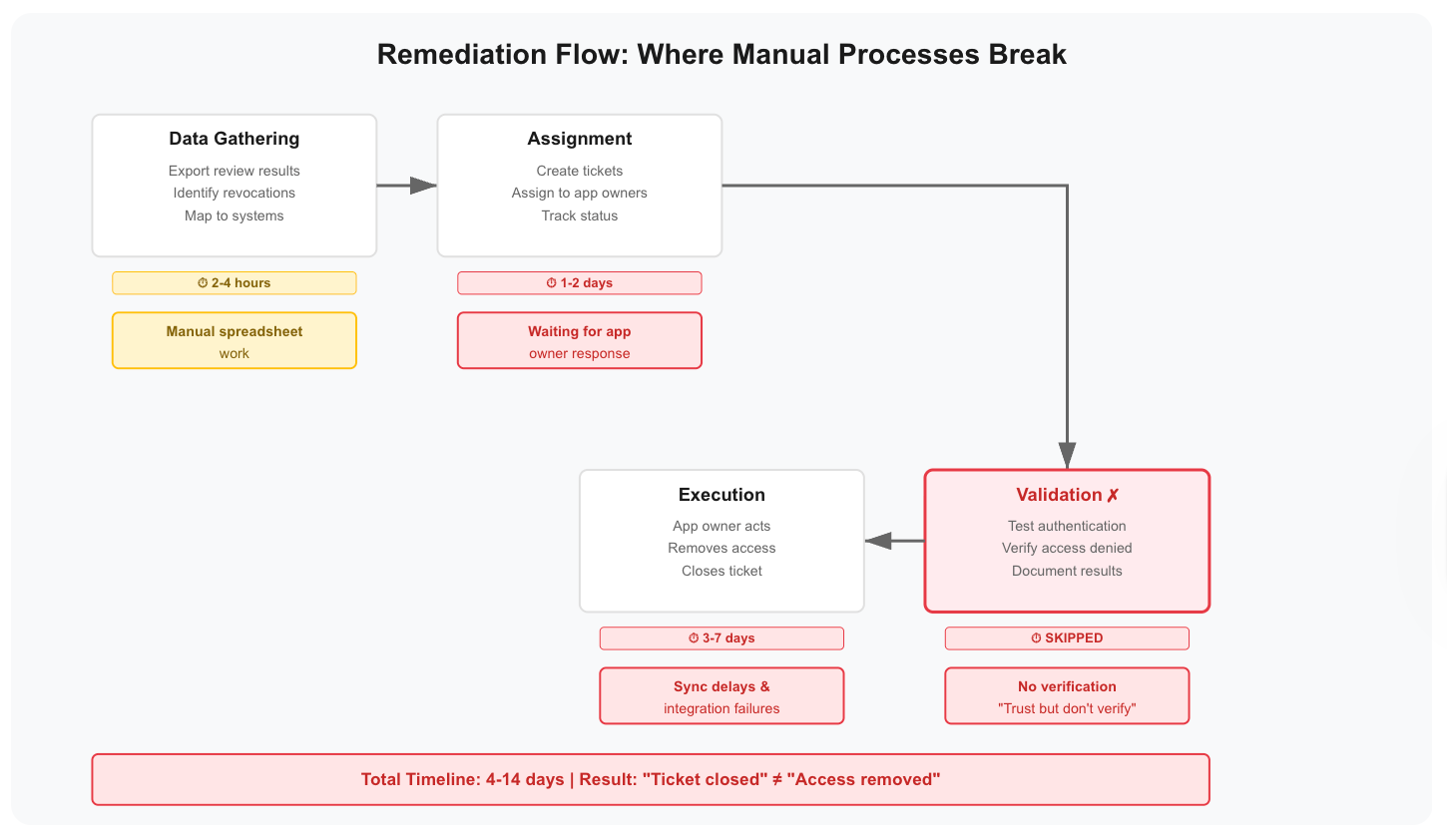

Phase 4: Remediation Execution

This is where reviews die.

The Manual Remediation Death Spiral

Review complete. 127 access grants marked for revocation.

Apps with SSO integration: IT revokes in Okta, access automatically propagates. Takes 2 hours for 60 users. Done.

Apps without SSO: Manual revocation required.

Log into Salesforce admin panel → find user → change profile → save

Log into GitHub → find user → remove from organization → confirm

Log into AWS Console → find IAM user → delete access keys → remove from groups

Log into NetSuite → find user → set inactive → confirm

Contact vendor support for apps without admin access

Document completion in Jira

For 67 users across 15 non-integrated apps: 8-12 hours of manual work.

If an app admin is on vacation, if credentials don't work, if the vendor takes 3 days to respond—remediation stretches to weeks.

Six weeks after your review closed, 40% of revocations haven't executed. Not because IT is lazy. Because manual remediation at scale is impossible.

Critical remediation checkpoints:

✓ Prioritize by risk (terminated employees, privileged access first)

✓ Set SLAs (critical: 24 hours, high: 5 days, medium: 10 days)

✓ Assign tickets to specific technical teams

✓ Track incomplete remediations with completion plans

Platforms with provisioning integrations execute revocations automatically across connected applications. Manual processes require systematic ticket management with actual enforcement of SLAs.

Without SLA enforcement, remediation never completes. "We'll get to it" becomes "we forgot about it" becomes audit finding.

The Validation Gap That Failed Your Audit

Your Jira tickets show "Status: Complete" for all 127 revocations.

The auditor asks: "How did you validate that revocations were actually executed?"

You explain: "IT marked the tickets complete."

"Did anyone test that users can no longer authenticate?"

No.

Finding: No evidence that access revocations were validated.

The forgotten checkpoint: Testing that revocations worked, not trusting that tickets closed.

Integration failures happen. Sync delays occur. Nested groups create backdoor access. Clicking "revoke" doesn't guarantee access removed.

Critical validation checkpoints:

✓ Verify group removal propagated to downstream apps

✓ Test sample accounts (attempt authentication)

✓ Confirm access denied messages appear

✓ Check for backdoor access paths

✓ Document validation results with screenshots

Ten percent sampling is auditor-acceptable. Test 13 of 127 revocations. Attempt to authenticate. If access still works, your remediation failed.

Platforms with real-time validation testing can verify revocations executed successfully. Manual processes require systematic sampling: pick random revoked users, test authentication, document results.

Evidence not documented = evidence doesn't exist for audit purposes.

Phase 5: Post-Review Activities

The Scattered Evidence Nightmare

Your SOC 2 auditor asks: "Show me evidence that Q2 access reviews completed per policy."

You compile:

- Excel spreadsheet with certification decisions (SharePoint)

- Email thread notifying certification owners (sent folder)

- Jira tickets showing remediation (custom query required)

- Slack messages confirming validation (search #security channel)

- Screenshots of completed reviews (somewhere in Downloads folder)

Six hours reconstructing evidence from scattered sources.

The auditor reviews your package: "This evidence exists, but it's not systematically collected. How do I know this is complete? Where's the tamper-evident audit trail?"

Your evidence is real. Your collection process isn't audit-ready.

The forgotten checkpoint: Configuring automatic evidence collection before the review, not manually compiling during the audit.

Critical evidence checkpoints:

✓ Export all certification decisions with timestamps

✓ Include remediation tickets with completion dates

✓ Attach validation testing results

✓ Capture audit trail logs

✓ Store in retention system (7 years for SOX)

Automated platforms generate complete evidence packages with tamper-evident audit trails. Manual processes require systematic collection: define evidence requirements upfront, capture as execution happens, organize for audit access.

Generate the complete package immediately post-review while everything's fresh. Don't wait for the auditor to request it.

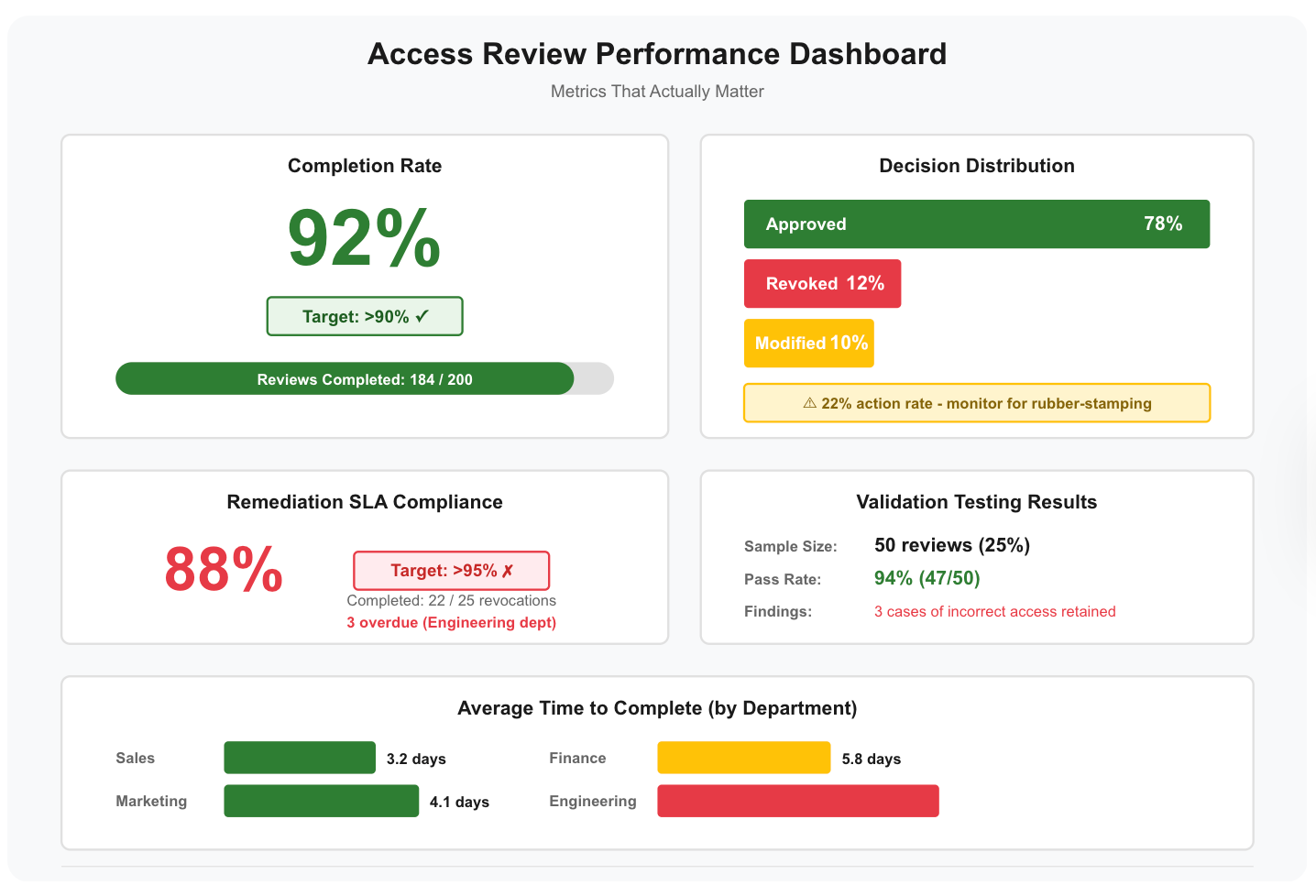

The Metrics That Actually Matter

Most teams celebrate completion rate and stop.

Metrics to track:

- Completion rate (target: >90%)

- Decision distribution (approve/revoke/modify percentages)

- Remediation SLA compliance (target: >95%)

- Validation testing results (sample size and pass rate)

- Time to complete (by department, to identify struggling areas)

Completion rate measures participation. Decision distribution reveals rubber-stamping. Remediation SLA reveals execution effectiveness. Validation results prove controls work.

90% completion with 40% remediation = 54% actual effectiveness.

Measure both halves.

What Actually Prevents Audit Findings

Access reviews fail at three predictable points:

1. Stale data at launch → Reviews assign to wrong people, certify outdated access

2. No decision quality monitoring → Rubber-stamping passes reviews without value

3. Missing validation → Revocations don't execute but tickets marked complete

The difference between passing and failing audits comes down to validation steps that seem optional:

- Did you verify manager hierarchies were current before launch?

- Did you monitor decision patterns to catch rubber-stamping?

- Did you test that revocations actually executed?

Your review was 90% complete. The missing 10% failed your audit.

Systematic Execution vs. Heroic Effort

This article describes 47 critical checkpoints across five phases. No one remembers all of them.

You need systematic execution: a checklist your team follows every cycle, with assigned owners, due dates, and completion tracking.

Put the checklist in your workflow tool. If you run reviews in Jira, create tickets for each checkpoint. If you use Asana, build a template. Track progress daily during active phases.

After your first cycle, review what you missed. Add forgotten steps while the pain is fresh. Each cycle should expose fewer surprises.

The automation question: How many hours does your team spend on manual execution each quarter?

Data extraction: 40 hours

Manager hierarchy validation: 6 hours

Review monitoring and reminders: 20 hours

Remediation coordination: 30 hours

Validation testing: 12 hours

Evidence compilation: 8 hours

Total: 116 hours per quarter = 464 hours per year

That's 12 weeks of full-time work annually for one person.

Platforms like Zluri automate data extraction (continuous sync), manager hierarchy validation (HR integration), notification management (workflow engine), remediation execution (provisioning integration), validation testing (real-time verification), and evidence generation (automatic audit trail).

Automation doesn't eliminate the checkpoints—it ensures they happen systematically without human memory or manual coordination.

Calculate your hours. Then decide if manual execution makes sense at your scale.

See how automated platforms enforce checklist compliance → Book Demo

.png)

.svg)