Your former sales manager left four months ago. She still has admin access to Salesforce, full read/write to your customer database, and owner permissions on 12 Slack channels containing deal negotiations. Your quarterly access review is in two weeks.

Sounds like a problem? It is.

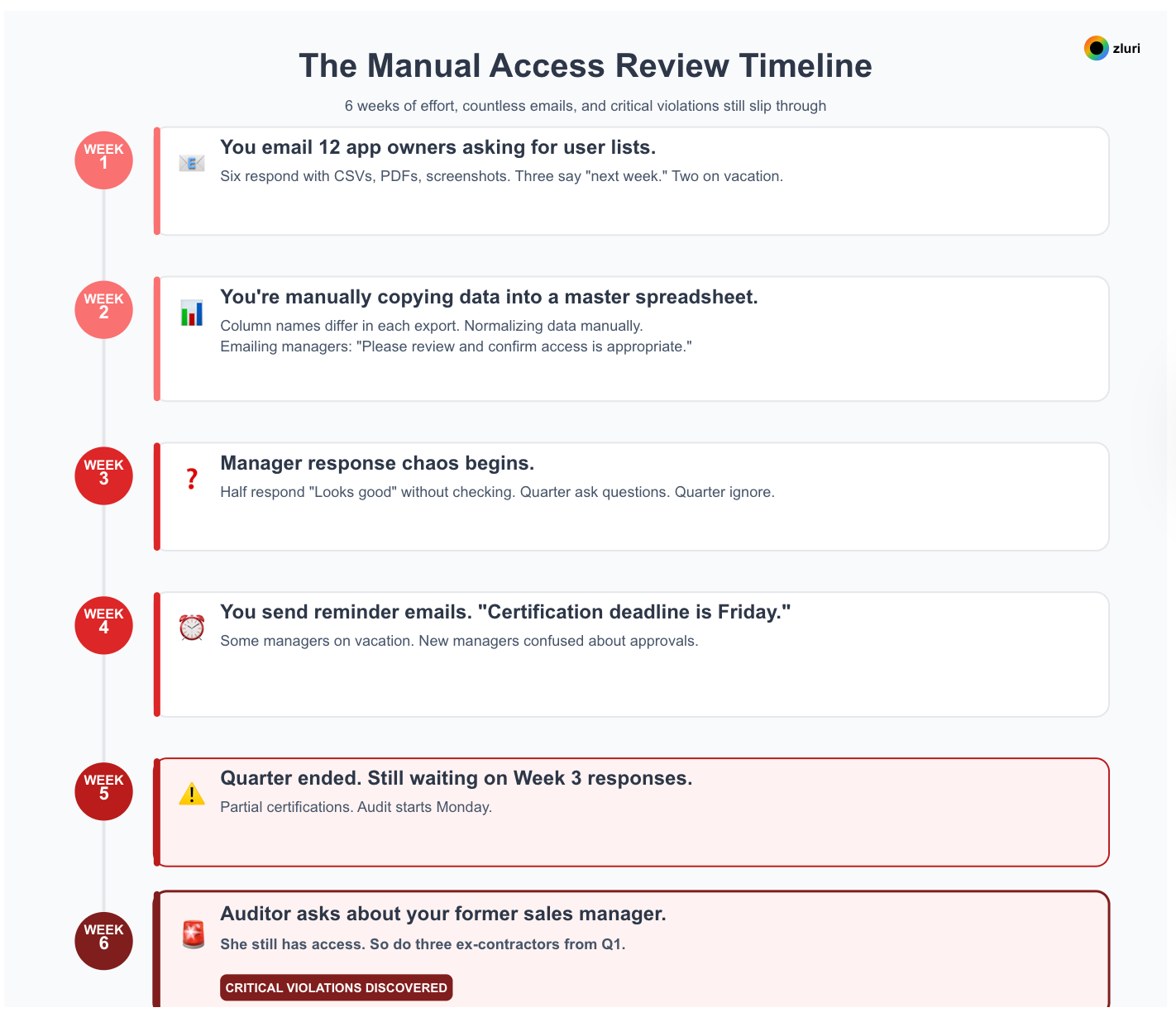

Here's what happens when you run that review:

Week 1: You email 12 app owners asking for user lists. Six respond immediately—CSVs, screenshots, one sends a PDF. Three say they'll "get to it next week." Two are on vacation. One replies: "What format do you need?"

Week 2: You're manually copying data into a master spreadsheet. Column names differ in each export. You're normalizing data manually. You start emailing managers: "Please review these users and confirm access is appropriate."

Week 3: Half the managers respond with "Looks good" without actually checking. A quarter ask clarifying questions you can't answer. A quarter ignore it entirely.

Week 4: You send reminder emails. "Certification deadline is Friday." Some managers are now on vacation. New managers don't know what their predecessors approved. You're tracking responses in another spreadsheet.

Week 5: The quarter is over. You're still waiting on responses from Week 3. You have partial certifications. The audit starts Monday.

Week 6: The auditor asks about your former sales manager. You discover she still has access. So do three contractors whose projects ended in Q1.

We've watched this exact pattern play out at hundreds of companies. Weeks of effort, dozens of people involved, and critical violations still slip through. The reviews happened. The violations persisted.

This isn't about compliance checkboxes. It's about whether your access reviews actually make you more secure or just create the illusion of control.

What is a user access review?

Before we explain what access reviews should be, let's acknowledge what they are at most companies: a quarterly event where IT emails managers asking, "Are these people still on your team?" and waits for responses that may or may not arrive before the deadline.

When managers do respond, they're looking at a spreadsheet with no context. Is this access new (granted last week) or old (been there for years)? They don't know. So they approve everything to avoid blocking someone's work.

A user access review (also called access certification or entitlement review) is the process of periodically validating that users have appropriate access to applications and data based on their current role, responsibilities, and employment status.

What you're actually reviewing

User-to-app relationships

Who has access to which applications? What level of permissions do they have? Is this access still necessary?

Role-based entitlements

Does their access match their current job function? Have they accumulated permissions from previous roles (privilege creep)? Are there excessive admin rights?

Group memberships

Many organizations grant access through SSO groups—not individual app assignments. When someone joins "Engineering-FullStack," they automatically get access to 15-20 apps. Group-based access reviews let you certify at the group level: "Should this person be in this group?" rather than reviewing 2,000 users across 200 apps (400,000 data points).

What's not an access review

- Initial access provisioning (that's onboarding)

- Real-time access monitoring (that's continuous governance)

- Audit access log review (that's activity monitoring)

The cadence

Most organizations conduct access reviews quarterly or semi-annually. Compliance frameworks have specific requirements:

- SOC 2: At least annually, quarterly recommended

- PCI DSS: At least quarterly for systems handling cardholder data

- HIPAA: Annually minimum

- ISO 27001: Annually minimum

- SOX: Quarterly for financial systems

Types of user access review

Access reviews aren't one-size-fits-all. Organizations typically use a combination of approaches based on their size, maturity, and risk profile.

- User-based reviews take the most granular approach, reviewing individual users across all their applications. This method catches everything—every user, every app, every permission level. But it's also the most time-intensive.

A manager reviewing 50 users across 10 apps faces 500 individual decisions. When you scale this to 2,000 users across 100 apps, you're looking at 200,000 data points to certify.

This approach works well for small teams (under 100 people) or for high-risk applications where you need complete granularity, but it becomes overwhelming quickly as the organization grows.

- Group-based reviews represent the modern approach to access certification. Instead of reviewing individual user-to-app assignments, you review SSO groups and their entitlements.

When someone joins "Engineering-FullStack," they automatically get access to 15-20 apps. Group-based reviews let you certify at the group level rather than reviewing each app individually—this approach is 10x faster and aligns with how access is actually granted.

The tradeoff: it requires a mature SSO group structure. If your groups are messy or inconsistently applied, group-based reviews won't work well. This method is best for organizations with SSO adoption and well-defined group taxonomies.

- Application-based reviews flip the approach entirely. Instead of asking "what does this user have access to?", you ask "who has access to this critical application?" This focuses the review on specific high-risk systems rather than trying to certify everything at once. You might review all users with access to your financial systems, customer database, or admin tools.

The benefit of this approach is you can prioritize compliance-critical apps (SOX, HIPAA scope) and complete those reviews first.

On the other hand, the limitation of this approach is you may miss cross-app privilege creep where someone accumulated excessive access across multiple less-critical systems.

This approach works well for compliance-driven reviews where specific applications need quarterly certification.

- Role-based reviews organize certifications by job function rather than by application or individual user. You review all engineers, all sales reps, all finance team members.

This method catches role-based anomalies—cases where someone's access doesn't match their job function, or where they've retained permissions from previous roles.

The challenge of this approach is that it requires well-defined role taxonomy and consistent application of roles across your organization. If roles are vague or inconsistently assigned, the reviews become difficult to execute.

Organizations with mature RBAC (role-based access control) implementation get the most value from this approach.

Most organizations use a combination of these methods. Start with application-based reviews for SOX/HIPAA-scoped apps that need quarterly certification, then expand to group-based reviews for everything else.

User-based reviews can supplement in specific cases—perhaps for executives or for applications with unusually sensitive data. Role-based reviews work well as an annual exercise to catch systemic issues that individual app reviews might miss.

Why manual user access review fail

Most organizations know access reviews are important. The question is: why do IT teams spend weeks and involve dozens of people every quarter on something that still leaves critical violations unaddressed? Why does the process feel like a compliance checklist instead of actual security?

IT emails 15 managers asking them to review their team's access. Six respond within a week. Four respond with "looks fine" without checking. Three forward to someone else who never responds. Two are on vacation. By the time everyone's input arrives, the quarter is over.

IT teams spend days on this. Managers spend hours. GRC teams are already preparing for the next cycle. And the contractor from last year's project? Still has admin access.

Reason 1. Time drain: Manual reviews consume 149 person-days per quarterly cycle

Manual access reviews aren't just slow—they're resource black holes. We tracked the actual time investment across 50 quarterly review cycles: multiple people across IT, Security, and GRC involved for days at a time. Not once. Every quarter.

A typical company spends 149 person-days per quarterly review. That's one pattern repeated four times a year. The cumulative time investment is equivalent to multiple full-time employees doing nothing but access reviews.

Reviewers aren't looking at 20 decisions. They're looking at hundreds or thousands. A manager reviewing 50 users across 10 apps faces 500 individual decisions with no context about whether access is new or old, whether it's been used or dormant, or whether it's appropriate for that role.

So "Approve All" becomes the default. Not because managers don't care, but because they're drowning in data they can't evaluate.

Reason 2. Manual processes have significantly lower effectiveness in enforcing access policies

We compared organizations with manual processes to those with automation. Manual processes have significantly lower effectiveness rates when it comes to actually enforcing access policies during reviews.

Why?

Because manual reviews optimize for completion, not accuracy. The goal becomes "finish the review" not "catch the violations."

Reason 3. Sixty percent of manual reviews regularly miss deadlines due to remediation delays

We surveyed 215 IT leaders. Sixty percent said their manual reviews regularly miss deadlines. The culprit? Remediation.

The review itself might complete on time. But then comes the remediation phase: actually removing access. That happens manually, app by app, often via tickets that sit in IT queues for weeks.

Reason 4. Security incidents trace back to issues access reviews should have caught

Many security incidents can be traced back to the exact issues access reviews are supposed to catch: over-permissive access that existed for months, ex-employees who retained access, third-party vendors with persistent elevated permissions.

These violations were identified in reviews. They persisted anyway.

Reason 5. Manual processes mean you're always behind when auditors ask for evidence

When auditors ask for evidence of access reviews, they want proof reviews happened on schedule, evidence violations were remediated, and audit trails showing WHO approved/revoked WHAT and WHEN.

"We're working on remediation" isn't acceptable. Manual processes mean you're always behind, always exposed.

Reason 6. Your best security talent spends weeks per quarter on reviews instead of strategic work

Your best IT and security talent spending weeks per quarter on access reviews = time NOT spent on strategic security initiatives, innovation projects, actual threat response, or system improvements.

The root cause manual user access review fail is the visibility problem

Most access review failures trace back to a single architectural flaw: they're built on incomplete visibility.

The industry—both traditional IGA vendors and those calling themselves "modern"—optimizes for governance. They assume you already know what exists in your environment, then help you manage it. But that assumption is wrong.

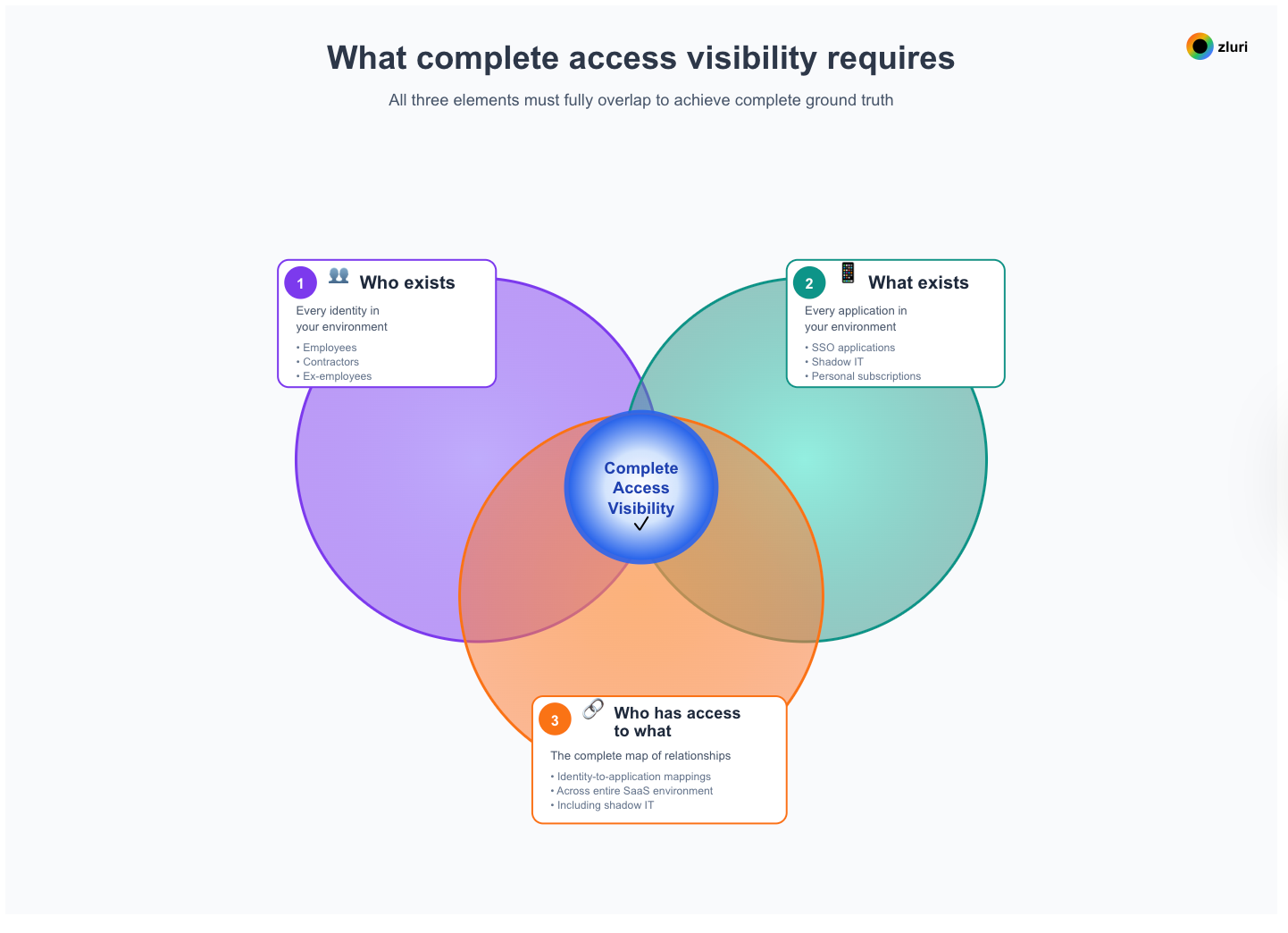

What complete access visibility requires

If you're going to govern access, you need to know three things with complete accuracy:

- Who exists - Every identity in your environment (employees, contractors, ex-employees, service accounts, external users)

- What exists - Every application in your environment (SSO apps, shadow IT, personal subscriptions, legacy tools)

- Who has access to what - The complete map of relationships between identities and applications

We call this the Discovery Triad: three interdependent discoveries that together give you ground truth about your access landscape.

Most access review solutions—traditional and modern—rely on IdP/SSO-based visibility:

- Identities: Pulled from IdP, HRMS, or employee directory

- Applications: Only apps integrated with IdP/SSO

- Access mapping: Only relationships visible through your identity provider or single sign on

That gives you partial truth. They see the access landscape visible to your IdP/SSO. Everything else—identities that bypass your directory, applications purchased outside IT approval, access relationships that never touch SSO—remains invisible.

The visibility gap

We measured this gap across 215 companies. The typical organization has 94 apps visible in their IdP. Comprehensive discovery finds 187. That gap—93 applications with associated identities and access relationships—is invisible to IdP-based access reviews.

The math gets worse when you account for the full Discovery Triad:

- Identities not in your directory: Contractor accounts created directly in apps, service accounts, external collaborators, orphaned accounts from acquisitions

- Applications outside SSO: Personal ChatGPT subscriptions, department Airtable purchases, legacy tools predating SSO adoption, vendor portals accessed via personal email

- Access relationships outside IdP: Direct app logins, shared credentials, API keys, OAuth grants that bypass SSO

You can't govern what you don't know exists. Access reviews built on IdP or SSO based visibility aren't reviewing your complete access landscape—they're reviewing the portion visible to your identity provider.

Even vendors positioning as "modern IGA" or "autonomous" primarily depend on IdP/SSO integration

Even vendors positioning themselves as "modern" or "autonomous" ultimately depend on IdP/SSO integration. They understand visibility matters. They just solve for the wrong definition of visibility.

They're optimizing for governance efficiency once you've connected your IdP/SSO. The assumption: if it's not in your IdP or SSO, it shouldn't exist. But that's not how companies actually work. Departments buy tools. Developers spin up services. Employees use personal accounts for work. None of that touches your IdP—but all of it creates access risk.

The industry treats IdP and SSO coverage as the goal. Complete visibility should be the goal, with IdP/SSO integration as one input among many.

What access reviews should look like

Here's what changes when you start with complete visibility:

Ground truth requires discovery methods beyond SSO/IdP integration

Access reviews should be built on complete discovery of the full Discovery Triad—who exists, what exists, and who has access to what. Not just what's visible to your IdP/SSO.

That requires discovery methods beyond IdP/SSO integration. For example, finance system data shows application purchases IT never approved. Browser activity reveals which SaaS tools employees actually use. Desktop agents identify installed applications. CASB logs capture cloud traffic. Each discovery method reveals identities, applications, or access relationships invisible to your IdP.

The goal is to establish ground truth about your access landscape before attempting governance.

Identification and remediation should happen in the same system, not across scattered tools

Most access review platforms identify violations, then stop. You get dashboards showing who has inappropriate access. Then you get CSV exports and Jira tickets.

Someone still has to:

- Log into Salesforce, find each user, remove their license

- Log into GitHub with different credentials, navigate different UI, remove organization access

- Create NetSuite support tickets, wait 3 business days for confirmation

- Email engineering about custom tools, follow up when they don't respond

We've tracked this: six weeks after access reviews "complete," a substantial portion of identified violations still exist.

Access reviews should close the loop. Identify violations AND remediate them—directly from the platform where the review happened. The action should be as automated as the identification.

For apps with APIs: one-click remediation. For apps without APIs: guided workflows that still capture audit trails. The key difference: you're not exporting problems to other systems. You're solving them where you found them.

Continuous validation catches violations as they emerge, not months later

Quarterly access reviews are backward-looking by design. You're certifying access that's been in place for 3-6 months. By the time you identify a violation, damage may already be done.

Access reviews shouldn't be events—they should be continuous validation. Between quarterly certifications, the system should flag anomalies in real-time:

- Dormant accounts suddenly active

- External users gaining admin privileges

- New applications bypassing SSO

- Contractors accessing systems months after projects end

This isn't replacing quarterly reviews—it's making them more effective by catching violations as they emerge, not months later.

Context and intelligence prevent "approve all" behavior during reviews

Showing managers a spreadsheet of 500 access decisions without context guarantees "Approve All" behavior. Access reviews should provide intelligence:

- Usage patterns: This access was granted 18 months ago and never used

- Risk scoring: This user has admin access to 12 financial systems—role requires 2

- Anomaly detection: This contractor account was dormant for 6 months, logged in yesterday

- Compliance mapping: This SOX-scoped app has 8 users with excessive permissions

The goal is to turn raw access data into risk-weighted insights that make decisions obvious, not overwhelming.

How to implement user access reviews: building on ground truth

The principles above require specific architectural choices. Here's what that looks like in practice, using Zluri as an example of a platform built around complete Discovery Triad coverage.

Establishing ground truth requires nine discovery methods, not one

Establishing ground truth requires discovery methods beyond SSO/IdP integration. Zluri uses 9 methods simultaneously:

- SSO/IdP (Okta, Google Workspace, Entra ID) → Login events, authorized apps

- Finance/Expense (NetSuite, QuickBooks) → Transaction data, employee purchases

- Direct Integrations (300+ apps) → User access, licenses, audit logs

- Desktop Agents → Installed apps, background processes

- Browser Extensions → Web-based SaaS usage

- MDMs → Mobile device apps

- CASBs → Cloud traffic monitoring

- HRMS → Employee data, department mapping

- Directories (Azure AD) → User identities, group memberships

Each method reveals different aspects of the Discovery Triad. SSO/IdP is one input—not the complete picture. Finance data shows applications purchased outside IT approval. Browser extensions reveal personal subscriptions used for work. Desktop agents identify legacy tools that predate SSO adoption.

Discovery should run continuously, not as periodic scans or manual imports. In Zluri's implementation, new app purchases, new user sign-ups, and new group memberships become visible within 24 hours—establishing continuous ground truth.

Automatic remediation eliminates weeks of manual work across multiple systems

When access reviews identify violations, the system should remediate directly—not export to other tools. This is also called closed-loop remediation.

Here's the difference:

IdP-based approach:

- Identify violations → Export CSV → Create Jira tickets → IT manually logs into each app → Weeks later, maybe some are remediated

Ground truth approach (Zluri example):

- Identify violations → Click "Revoke Access" → Done

For apps with API integrations, remediation is automatic. For apps without APIs, Zluri provides SDKs or guided manual workflows—but the audit trail is still captured in the system. No scattered Jira tickets. No "we're still working on it" when auditors ask six weeks later.

The system should also power automated remediation playbooks:

- When someone leaves → access removed across all apps automatically

- When someone changes roles → entitlements adjust based on new group membership

- When high-risk violations detected → immediate remediation or escalation

Intelligence transforms spreadsheets into risk-weighted decisions

Access review platforms shouldn't just show who has access—they should provide context that makes decisions obvious. Zluri's intelligence layer includes:

- Usage intelligence: Who's licensed but never logged in, who hasn't accessed in 90+ days, who logged in yesterday after 6 months inactive

- Risk scoring: Which apps have access to sensitive data, which users have excessive admin privileges, which contractors still have access after projects ended

- Compliance mapping: SOX-critical apps with over-provisioned access, HIPAA-covered apps with external user access, SOC 2 scope apps with inactive users

- AI-driven anomalies: Access patterns that don't match department norms, dormant accounts suddenly active, privilege creep across years

Group-based reviews reduce decision fatigue significantly. Instead of reviewing 2,000 users across 100 apps (200,000 data points), review at the group level—certify 50 SSO groups and their entitlements for 10x faster reviews with the same security outcome.

Indefinite audit log retention provides evidence without retention paywalls

Access review platforms should maintain indefinite audit logs—not limited retention windows with paid upgrades for longer history. When auditors ask for evidence from 18 months ago, you should have complete trails showing who reviewed, what decisions were made, when remediation occurred, and proof of completion.

Zluri stores all audit logs indefinitely with no paywall—unlike solutions like Okta that charge for retention beyond 90 days.

The impact of complete visibility on access reviews

Organizations that moved from IdP-based to comprehensive Discovery Triad coverage saw substantial improvements. Based on data we tracked across automated access review implementations:

Error reduction: Organizations went from roughly half reporting effective policy enforcement to more than two-thirds with that level of confidence.

Resource efficiency:

- Fewer people managing the process: down from 20+ to mid-teens

- Faster completion: down from 7 days to 4 days per cycle

- Dramatic reduction in total person-days: from 149 person-days to 55

Time to value

Unlike traditional IGA implementations that take 6-12 months, platforms built on ground truth principles can launch first automated reviews within weeks. Discovery engine starts finding apps on day one. First review campaign by week 2-3.

The ROI math of moving from manual to automated user access review

For a company running quarterly reviews:

- Manual: 149 person-days × 4 quarters = 596 person-days/year

- Automated: 55 person-days × 4 quarters = 220 person-days/year

- Savings: 376 person-days/year = 1.9 FTEs (~2 full-time employees)

At an average IT salary of $100K, that's $190K in labor savings annually. Plus reduced security risk, faster compliance, and better audit outcomes.

Getting started with your first user access review

Week 1: Enable discovery

Turn on comprehensive discovery across all available methods to establish ground truth. Let it run for 7 days to complete the Discovery Triad—identities, applications, and access mappings. Most organizations discover 60-80 apps they didn't know existed.

Week 2: Define scope

Don't try to review everything at once. Start with:

- High-risk apps (finance, customer data, admin tools)

- Compliance-critical apps (SOX, PCI DSS, HIPAA, SOC 2, ISO 27001 scope)

- Apps with external user access

Week 3: Launch first campaign

Use group-based reviews where possible. Configure:

- Who reviews (manager, app owner, or IT)

- Approval workflows (single-level for most, multi-level for critical)

- Remediation playbooks (auto-revoke or manual)

Week 4: Review & remediate

Reviewers certify access directly in the platform. Violations are remediated immediately via closed-loop workflows. Reports are automatically generated for audit evidence.

Ongoing: Quarterly cadence

After the first review, set up recurring campaigns. Automation handles reminders, tracks completion, and ensures you never miss a deadline.

Advanced: Continuous governance

Once comfortable with quarterly reviews, enable real-time risk detection across the complete Discovery Triad. Systems built on ground truth can flag anomalies between reviews—dormant accounts suddenly active, external users gaining excessive access, new applications bypassing SSO—allowing immediate action, not quarterly delays.

The future is continuous access governance

The future isn't quarterly access reviews built on IdP-based visibility—it's continuous access governance built on ground truth.

Access validated in real-time across the complete Discovery Triad. When someone changes roles, entitlements adjust automatically. When an app is flagged as risky, access is reviewed immediately. Compliance audits become "pull this report" not "spend 2 weeks gathering evidence."

Organizations with complete Discovery Triad coverage are moving toward this model. Discovery is continuous. Intelligence is real-time. Actions are immediate. And governance decisions are based on what actually exists—not what your IdP/SSO thinks exists.

Access reviews shouldn't be a compliance checkbox that drains resources. They should be lightweight, continuous, and actually make your organization more secure.

We've watched the majority of organizations plan to automate access reviews within the year. The question isn't whether to automate—it's whether you'll build on ground truth or keep certifying partial visibility.

See who has access to what in your environment and prioritize access risks.

[Start Discovery Scan] → Book a Demo to understand your complete access landscape across all applications

.png)

.svg)