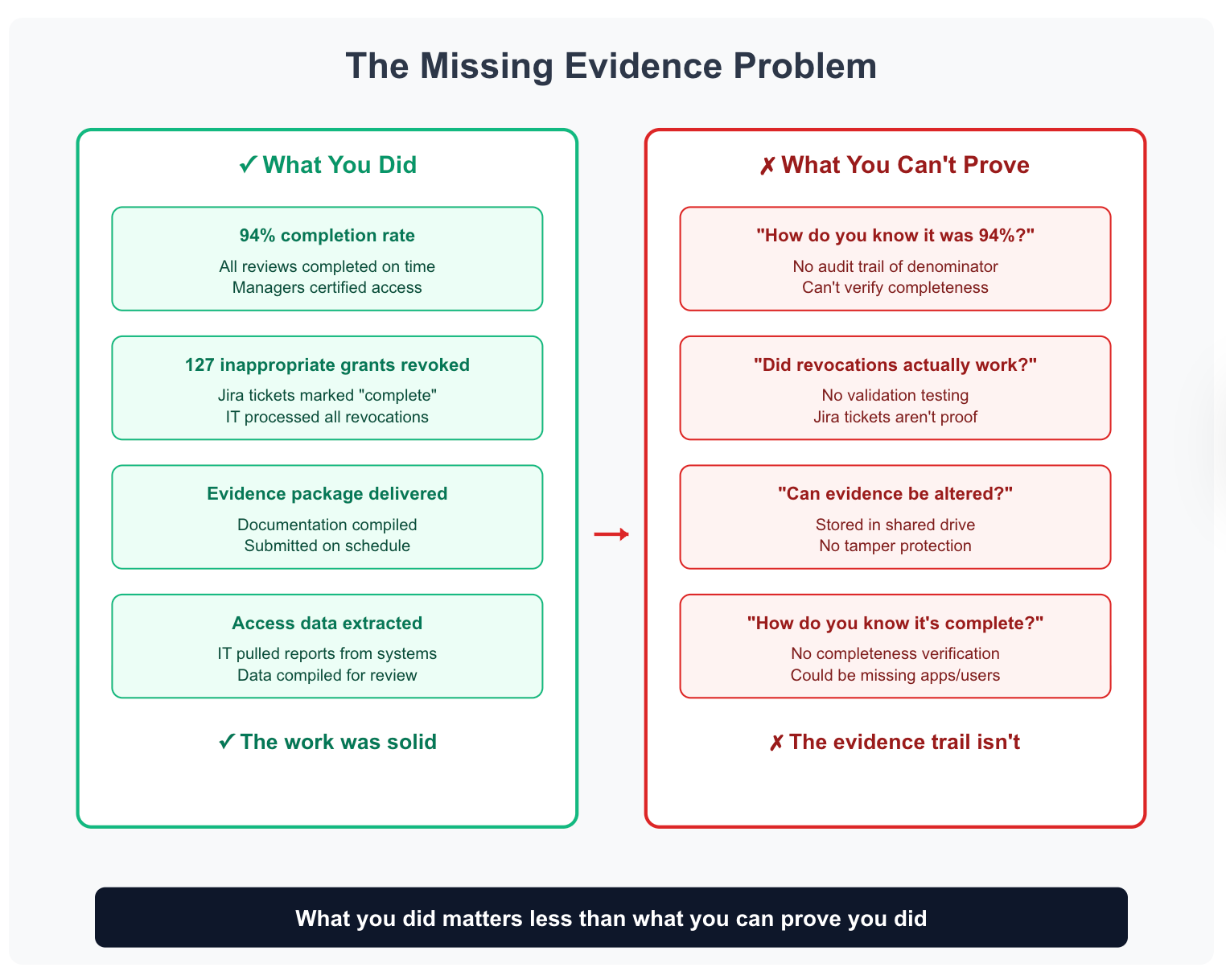

The Evidence That Wasn't There

Your external auditor reviews your Q3 access review documentation. She confirms: 94% completion rate, 127 inappropriate access grants revoked, evidence package delivered on time. Everything looks perfect.

Then she asks: "Can I see proof that the 127 revocations were actually executed?"

You show her Jira tickets marked "complete."

She asks: "Did anyone test that users can no longer access these systems?"

No validation testing occurred.

She continues: "Can I see the access data used for this review?"

You explain IT extracted it from systems.

She asks: "How do I verify the extract was complete—that no users or applications were missed?"

You have no completeness check.

Your access review executed flawlessly. But from the audit perspective, control effectiveness can't be proven. What you did matters less than what you can prove you did.

We've seen this pattern repeatedly: the underlying work is solid, but the evidence trail is incomplete.

A financial services company completed quarterly reviews religiously for one year, only to discover during their SOX audit that they'd been storing evidence in a shared drive where files could be modified. The auditor rejected everything.

Another company in tech had perfect completion metrics but couldn't demonstrate that certification owners actually understood what they were certifying—no training records, no qualification documentation.

In this guide, we explain:

- what auditors evaluate during access review audits,

- testing procedures for major compliance frameworks,

- evidence requirements that satisfy audit standards,

- common findings during the audit,

- which evidence matters and which documentation auditors reject, and

- how to build audit-ready processes that generate proof by default.

What Auditors Actually Test

Auditors check whether you completed access reviews. But they don't stop there. They evaluate whether access review controls operate effectively to achieve intended security outcomes.

Completion means nothing if the control can't prove it worked.

Testing focuses on two dimensions: control design and operating effectiveness.

Control design evaluates whether the control, if operating as designed, would effectively prevent or detect relevant risks. The question is: if this control is logically capable of achieving its objective?

Design testing examines:

- Review frequency matching risk profile (quarterly for high-risk systems, annual for low-risk)

- Certification owner knowledge (managers for business need, technical leads for technical appropriateness)

- Complete scope coverage (all financial systems for SOX, all cardholder data systems for PCI)

- Appropriate remediation timelines (24 hours for privileged access, 5 days for standard)

- Escalation procedures for overdue reviews

Design deficiency means the control couldn't work even if executed perfectly. Annual access reviews for financial systems under SOX fail by design because SOX mandates quarterly reviews.

Operating effectiveness evaluates whether the control actually functioned as designed during the period. The question is: did this control work in practice?

Testing methods:

- Sample selection: Auditor picks users, applications, or transactions to test

- Evidence examination: Reviews audit trails, approval records, remediation tickets

- Reperformance: Auditor independently verifies findings (attempts authentication to validate revocation)

- Interview: Discusses process with control owners

- Recalculation: Independently computes metrics

Operating effectiveness deficiency means good design, bad execution. Policy requires quarterly reviews, but Q2 review was delayed and completed in Q3.

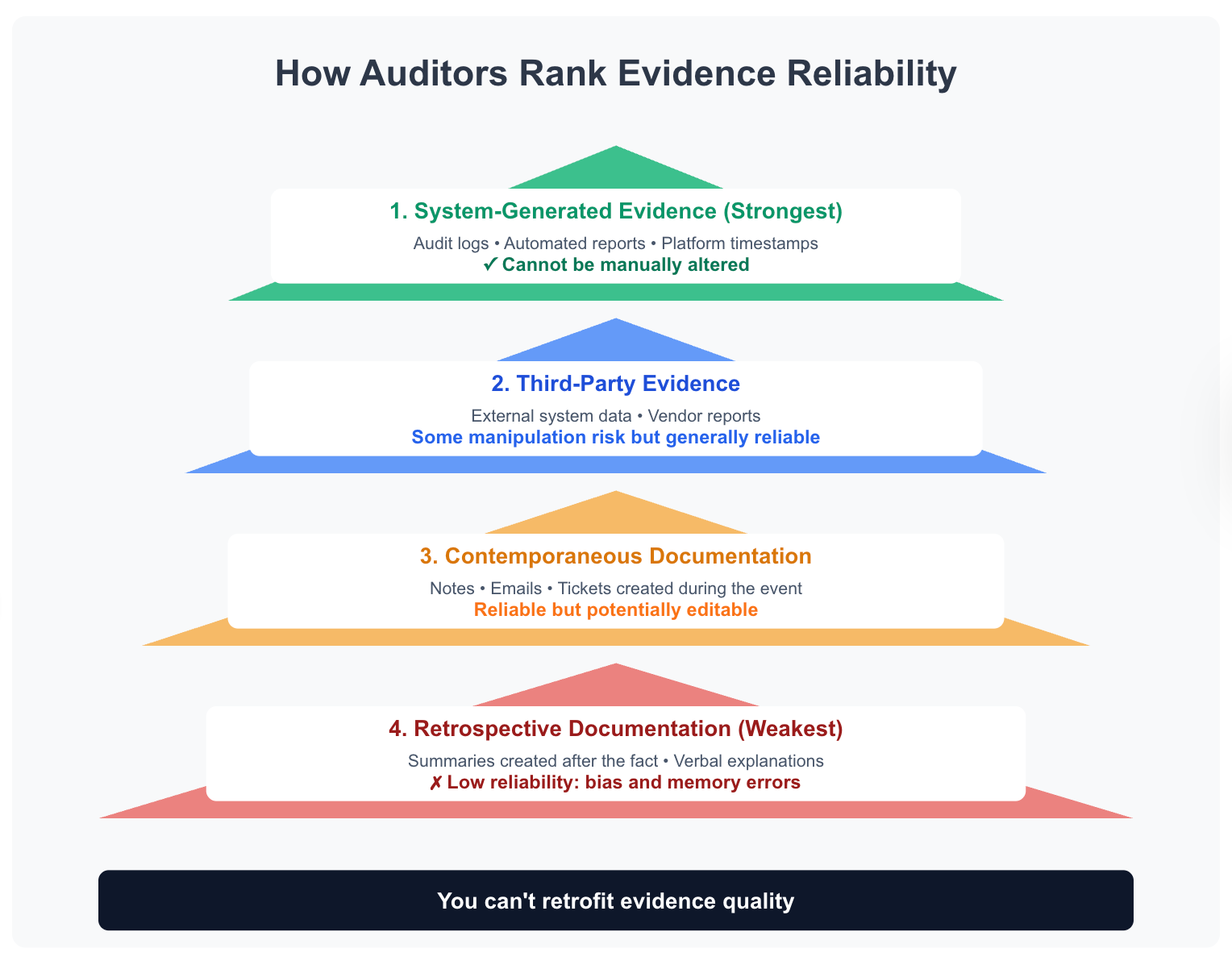

The Evidence Hierarchy

Auditors rank proof by reliability:

System-generated evidence (strongest): Audit logs, automated reports, platform timestamps. Can't be manually altered.

Third-party evidence: External system data, vendor reports. Some manipulation risk but generally reliable.

Contemporaneous documentation: Notes, emails, tickets created during the event. Reliable but potentially editable.

Retrospective documentation (weakest): Summaries created after the fact, verbal explanations. Low reliability due to bias and memory errors.

System-generated audit logs showing who certified what access with timestamps beat manager emails saying "I reviewed everything in October."

You can't retrofit evidence quality.

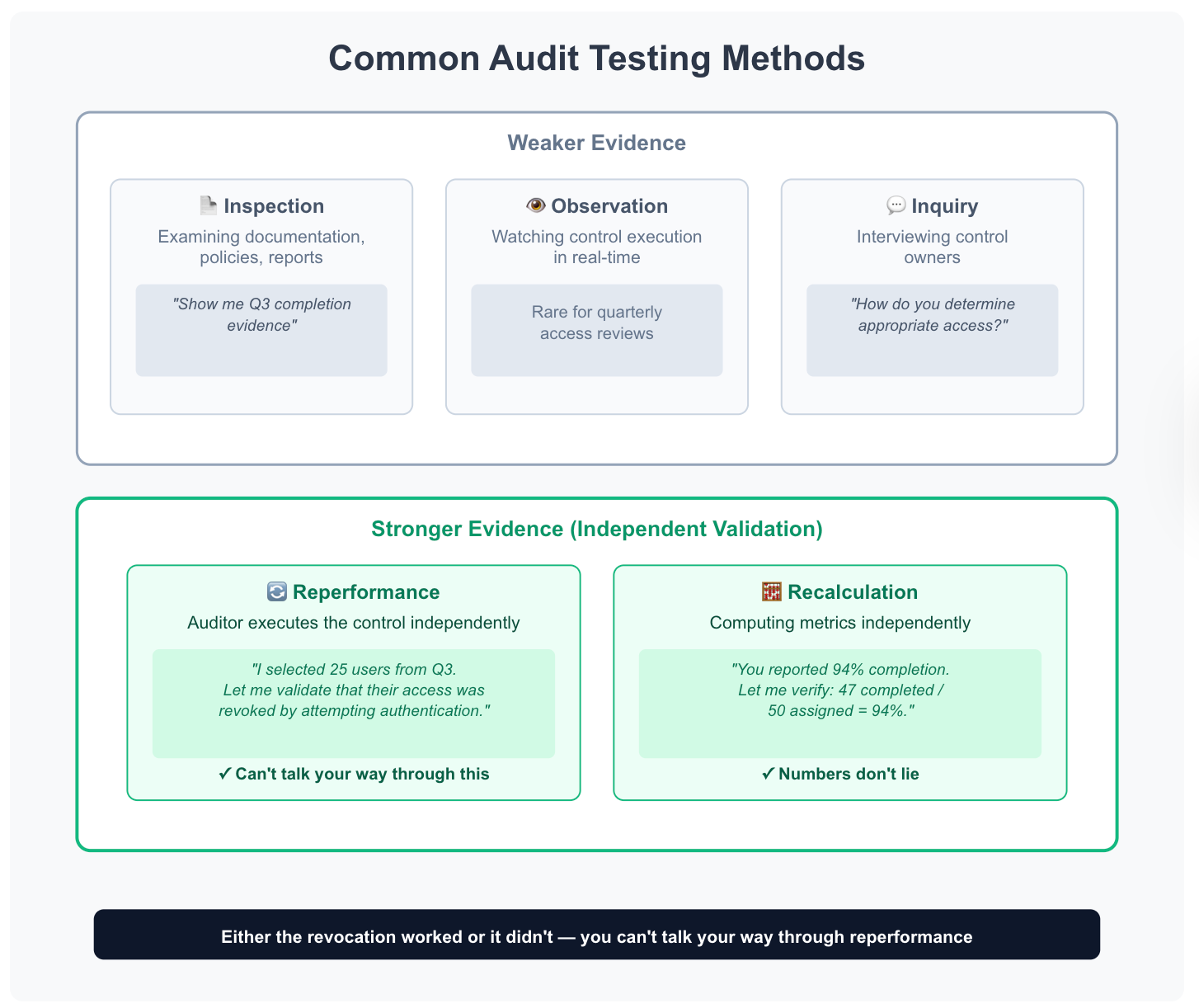

Common Testing Methods

Inspection: Examining documentation, policies, reports. "Show me Q3 completion evidence."

Observation: Watching control execution real-time. Rare for quarterly reviews.

Inquiry: Interviewing control owners. "How do you determine appropriate access?"

Reperformance: Auditor executes the control independently. "I selected 25 users from Q3. Let me validate that their access was revoked by attempting authentication."

Recalculation: Computing metrics independently. "You reported 94% completion. Let me verify: 47 completed / 50 assigned = 94%."

Reperformance and recalculation provide strongest evidence because the auditor validates independently.

You can't talk your way through reperformance—either the revocation worked or it didn't.

Framework-Specific Audit Procedures

Different compliance frameworks emphasize different aspects of access review controls.

SOX 404 ITGC Audit Procedures

SOX audits focus on IT General Controls supporting financial reporting accuracy. Access review controls prevent unauthorized or inappropriate access to financial systems and data.

Scope determination: Auditor identifies all applications supporting financial reporting—ERP systems, accounting software, payroll, consolidation tools, and reporting databases.

Only these in-scope systems require quarterly SOX reviews. Out-of-scope systems can use annual or semi-annual reviews.

Review frequency validation: SOX requires quarterly reviews for in-scope financial systems. Auditor verifies reviews occurred each quarter (Q1, Q2, Q3, Q4) with completion dates within fiscal quarter boundaries.

Delayed reviews—Q2 review completed in Q3—fail testing.

Certification owner qualification: Auditor evaluates whether certification owners possess appropriate knowledge to evaluate financial system access.

Finance managers reviewing accounting system access = appropriate. Engineering managers reviewing accounting system access = questionable.

Privileged access controls: Auditor samples privileged accounts and validates appropriate oversight. Who has admin access to financial systems? Were these accounts reviewed by qualified personnel?

Is privileged usage monitored?

We recently talked to an IT director in manufacturing. They ran meticulous quarterly reviews—high completion rates, thorough documentation, prompt remediation.

But during their SOX audit, the auditor found gaps in how they monitored privileged database access to their ERP system.

The review process never distinguished between standard user access and privileged accounts that could modify financial data directly. What seemed like a successful access review program required significant remediation.

Remediation verification: Auditor selects sample of revoked access and validates actual removal. Attempts authentication using test credentials. Reviews application logs confirming no post-revocation activity.

Insufficient remediation evidence = operating effectiveness failure.

Evidence retention: SOX requires 7-year evidence retention. Auditors request access review evidence from 2+ years prior.

Missing historical evidence = documentation deficiency, may require audit scope expansion or qualification.

Management certification: CFO and CEO certify internal controls under SOX 302 and 404. Access review controls factor into certification.

Auditors evaluate whether management assessment accurately reflects control effectiveness.

Common SOX findings you need to anticipate:

- Quarterly review frequency not maintained (reviews delayed or skipped)

- Incomplete scope (not all financial systems reviewed)

- Privileged access not sufficiently controlled or monitored

- Insufficient evidence of remediation execution

- Missing historical evidence beyond current year

SOC 2 Type II Audit Procedures

SOC 2 audits evaluate controls relevant to Trust Services Criteria: Security, Availability, Processing Integrity, Confidentiality, Privacy.

Access reviews address Security (CC6.2, CC6.3) and sometimes Confidentiality/Privacy.

Control description review: SOC 2 report includes detailed control descriptions. Auditor verifies access review control description accurately reflects actual implementation.

Description says "quarterly" but practice is "annual" = description exception.

Traditional GRC platforms generate control descriptions automatically based on policy settings, but actual practice often diverges from policy.

You configure quarterly reviews in the system, but operational reality means they happen every 5-6 months. The auditor compares your control description against what actually happened, and the mismatch becomes a finding.

We've seen this with multiple companies where the documented process looks perfect but execution tells a different story.

Testing period coverage: Type II reports cover 6-12 month periods. Auditors sample 1-2 review cycles within the period.

Must demonstrate consistent execution across periods, not just single reviews.

System scope validation: SOC 2 defines specific systems in scope per trust services criteria. Access reviews must cover all in-scope systems. Out-of-scope systems don't require testing.

Auditor validates no scope gaps.

Complementary user entity controls (CUECs): Some access review responsibilities may fall to customers using your service. SOC 2 report specifies which controls require customer implementation.

Auditor validates appropriate CUEC documentation.

Testing sample sizing: SOC 2 uses risk-based sampling. Higher-risk controls (privileged access) get larger samples (25+ items).

Lower-risk controls (standard user access) get smaller samples (10-15 items).

Exception handling: SOC 2 reports include exceptions—deviations from control description. Access reviews that missed deadlines, had incomplete remediations, or skipped systems get documented as exceptions.

Multiple exceptions = qualified opinion.

Common SOC 2 findings:

- Control description doesn't match implementation (says quarterly, actually semi-annual)

- Inconsistent execution across testing period (Q1 thorough, Q3 rushed)

- Incomplete scope (some in-scope applications not reviewed)

- Excessive exceptions (>10% of sample failed testing)

- Missing evidence for specific testing period requested

ISO 27001 Audit Procedures

ISO 27001 certification audits evaluate Information Security Management System (ISMS) including access control processes.

Control A.9.2.5 specifically addresses access rights review.

Statement of Applicability (SoA) review: Auditors examine whether you claimed A.9.2.5 control applicable. If applicable, control implementation must be demonstrated.

If not applicable, justification required.

Control objective verification: A.9.2.5 objective: "Ensure authorized user access rights are reviewed at regular intervals."

Auditors validate the review process has achieved this objective.

Review frequency determination: ISO doesn't mandate specific frequency (unlike SOX quarterly requirement). You define appropriate frequency in ISMS documentation.

Auditor validates actual frequency matches documented policy.

Documented procedure examination: ISO requires documented procedures. Auditors review access review procedure, evaluates completeness, validates actual execution matches procedure.

Risk assessment alignment: ISO emphasizes risk-based approach. Auditor evaluates whether access review frequency and scope align with risk assessment findings.

High-risk systems should have more frequent reviews.

Continuous improvement evidence: ISO requires continual improvement. Auditors look for evidence that the access review process improves based on findings, metrics, and lessons learned.

Static process without improvement = ISMS weakness.

Traditional GRC approaches struggle here. They're designed for compliance checkbox completion, not continuous improvement.

You execute the same review process quarter after quarter without learning from findings or adapting to changing risk. The auditor sees no evidence of process refinement, no metrics analysis, no systematic improvement—just mechanical execution.

We've helped multiple clients transition from static review processes to adaptive frameworks that demonstrate the continuous improvement ISO requires.

Corrective action tracking: When access reviews identify issues, corrective actions are required.

Auditors validate corrective actions documented, assigned, tracked, and completed per ISO nonconformity process.

Common ISO 27001 findings:

- Documented procedure doesn't match actual practice

- Review frequency inappropriate for risk level

- No evidence of continuous improvement

- Corrective actions not tracked or completed

- Inconsistent execution across organization units

PCI DSS Audit Procedures

PCI DSS audits focus specifically on cardholder data environment (CDE) protection.

Access review controls fall under Requirements 7 (restrict access to cardholder data) and 8 (identify and authenticate access).

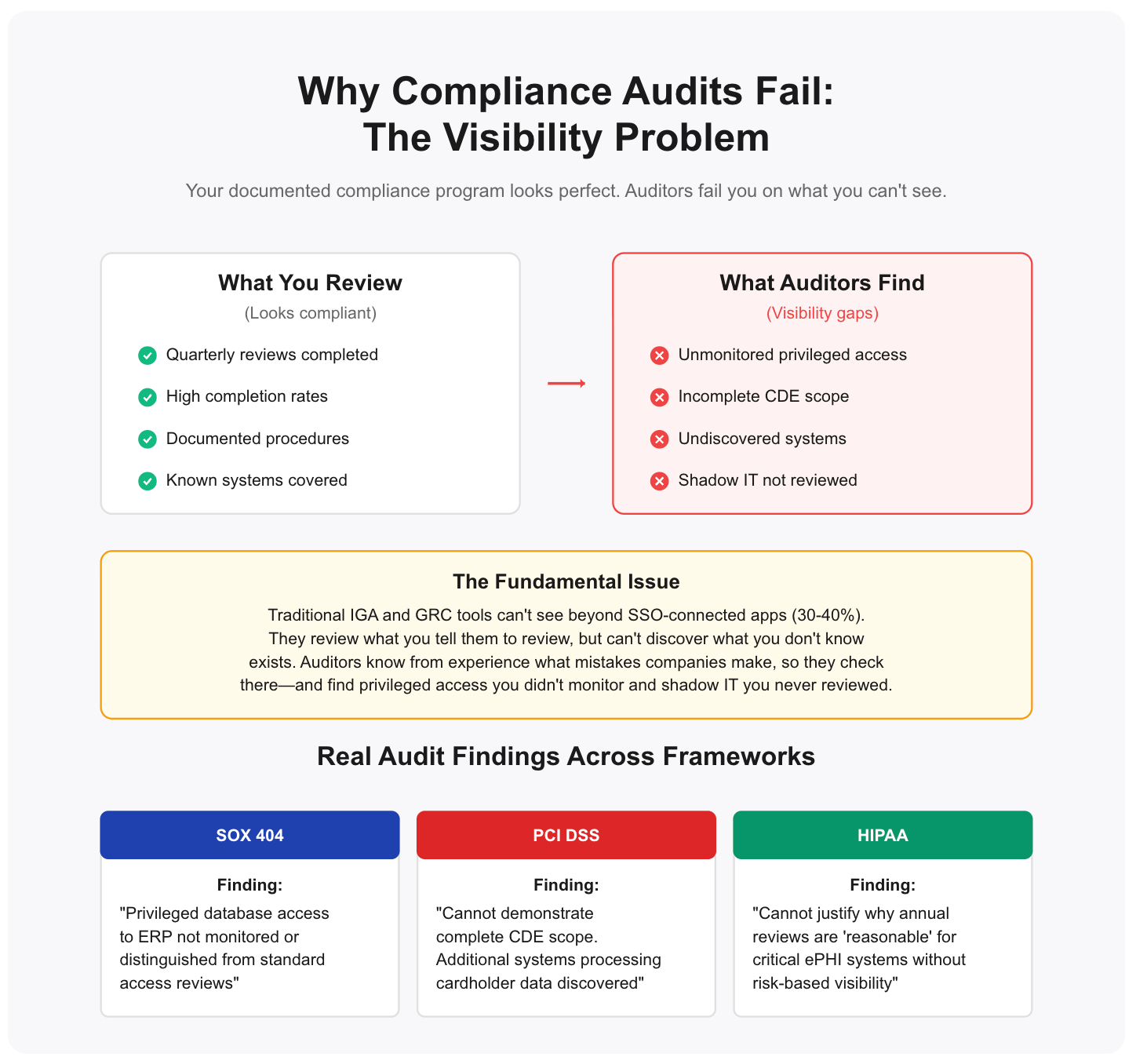

CDE scope boundary validation: Auditor validates clear definition of which systems, applications, networks fall within CDE scope. Access reviews must cover 100% of CDE scope.

Scope gaps = failing finding.

Visibility matters here. Most organizations define CDE scope through documentation—network diagrams, system inventories, data flow maps. But the auditor wants to know: How do you know this is complete?

We worked with a payments company that thought they had clear CDE boundaries until the auditor asked how they verified no additional systems processed cardholder data.

They couldn't demonstrate completeness, and the finding required expanding scope to include systems they'd missed.

Quarterly review requirement: PCI requires quarterly access review for CDE systems. Auditor validates four complete reviews within a 12-month assessment period.

Missing even a single quarter = compliance failure.

User access listing verification: Auditor requests current authorized user list for CDE systems. Samples users and validates access appropriate for job function.

Validates unnecessary access removed.

Privileged access focus: PCI emphasizes strong controls for privileged access (admins, root users).

Auditors pay particular attention to privileged account reviews, usage monitoring, and approval processes.

Vendor and third-party access: CDE often includes vendor access (payment processors, support vendors).

Auditors validate third-party access reviewed, time-limited, monitored, and removed when no longer needed.

Need-to-know principle validation: PCI requires access based on need-to-know and least privilege. Auditor samples users and evaluates whether access exceeds business need.

Over-permissioned users = finding.

Access removal verification: When employees change roles or leave the company, CDE access must be removed the same day.

Auditor samples terminated employees and role changes, validates immediate access revocation.

Common PCI DSS findings:

- Quarterly review cadence not maintained (missing quarters)

- Incomplete CDE scope coverage (some CDE systems not reviewed)

- Privileged access not sufficiently controlled or monitored

- Vendor/third-party access not reviewed or time-limited

- Terminated employee access not removed within 24 hours

HIPAA Security Rule Audit Procedures

HIPAA Security Rule audits evaluate safeguards protecting electronic protected health information (ePHI).

Access review controls fall under Administrative Safeguards §164.308(a)(3) and (a)(4).

Access authorization review: §164.308(a)(4)(ii)(C) requires implementing procedures to review ePHI access. Unlike SOX or PCI, HIPAA doesn't mandate specific frequencies.

You determine appropriate review intervals based on risk analysis.

Workforce clearance procedures: §164.308(a)(3)(ii)(B) requires procedures for determining access authorizations.

Auditors validate that only workforce members with legitimate need access ePHI systems—no blanket access grants.

Termination procedures: §164.308(a)(3)(ii)(C) requires procedures for terminating access when workforce member employment ends.

OCR (Office for Civil Rights) auditors specifically test whether terminated employees lost ePHI access immediately.

Audit controls: §164.312(b) requires mechanisms to record and examine ePHI access activity.

Access review evidence must demonstrate you're actually examining audit logs, not just collecting them.

Risk-based approach validation: HIPAA emphasizes reasonable and appropriate security measures based on risk analysis.

Auditors evaluate whether review frequency and scope align with your documented risk assessment. High-risk ePHI systems (patient records databases, billing systems) should have more frequent reviews than low-risk administrative systems.

We worked with a healthcare provider running annual access reviews across all systems—EHRs, billing, scheduling, internal wikis.

During their OCR audit, the auditor questioned why systems containing thousands of patient records received the same review frequency as internal HR systems. The organization had no risk-based justification.

They couldn't demonstrate why annual reviews were "reasonable and appropriate" for critical ePHI systems. This triggered a broader investigation into their risk analysis process.

Business associate access: When business associates (vendors, contractors, consultants) access ePHI on your behalf, their access must be reviewed.

Auditors validate business associate agreements (BAAs) exist and access reviews include BA accounts.

Minimum necessary principle: §164.502(b) requires limiting ePHI access to minimum necessary for job function.

Auditors sample users and evaluate whether access exceeds clinical or operational need. Healthcare staff with unnecessary access to patient records = finding.

Sanction policy enforcement: §164.308(a)(1)(ii)(C) requires applying appropriate sanctions against workforce members who violate policies.

When access reviews identify violations (accessing own records, accessing celebrity patient records, accessing ex-spouse records), auditors verify sanctions were applied per policy.

Common HIPAA findings:

- Review frequency not justified by risk analysis

- Terminated workforce members retained ePHI access beyond separation

- No documentation of review execution (just policies)

- Business associate access not included in reviews

- Access exceeds minimum necessary without documented justification

- Policy violations identified but no sanctions applied

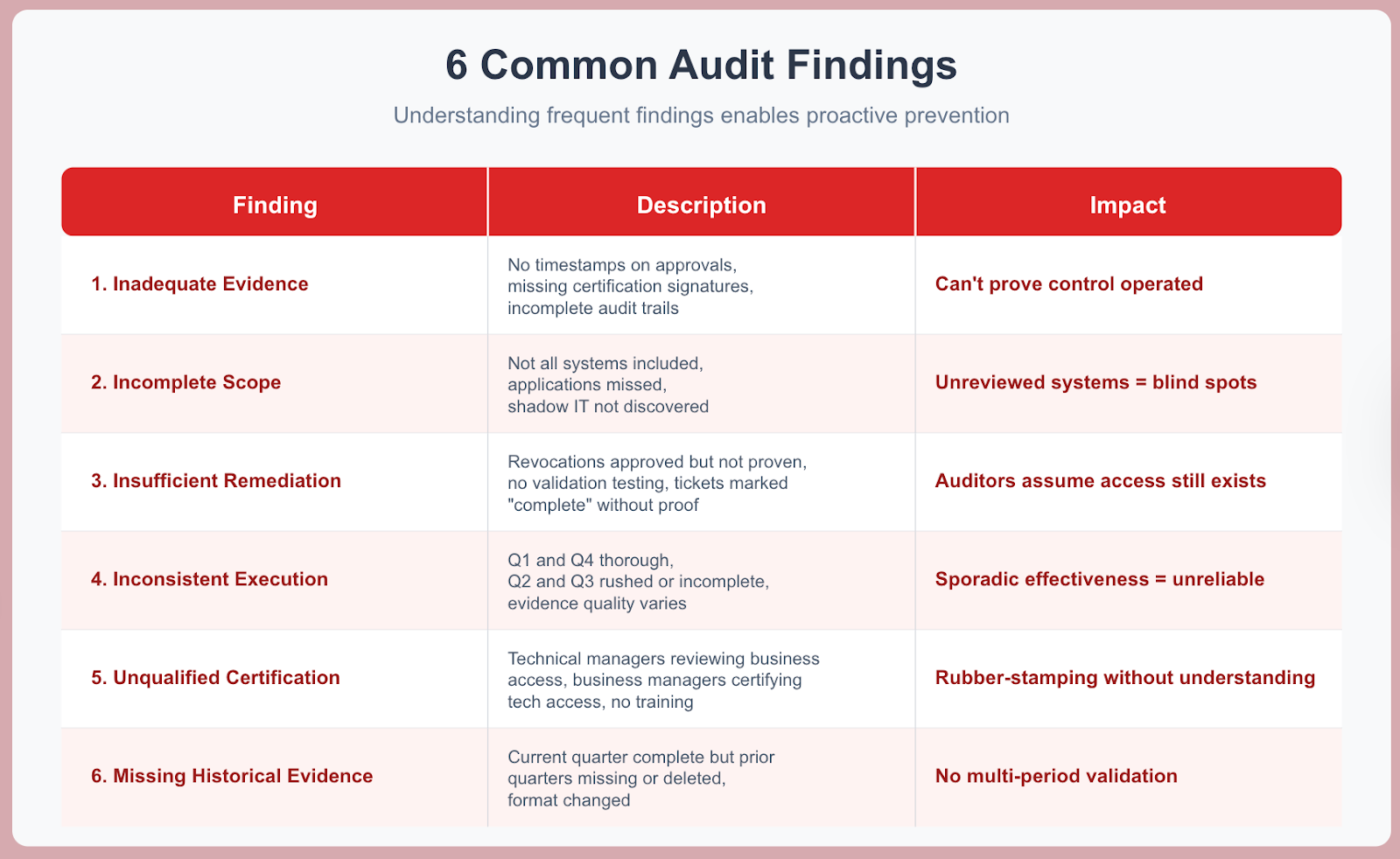

Common Audit Findings and Remediation

Understanding frequent audit findings enables proactive prevention.

Finding 1: Inadequate Evidence of Review Completion

No timestamps on approval decisions, missing certification owner signatures, incomplete audit trails, verbal confirmations without documentation. You can't prove the control operated even if it actually did. Insufficient evidence = failed test.

Implement platform capturing electronic signatures with timestamps. Configure audit logging for all certification decisions.

Retain system-generated reports, not manual summaries.

We've worked with hundreds of IT teams where the evidence problem isn't malicious—it's architectural. Manual processes, spreadsheet-based reviews, email-driven approvals don't generate audit-grade evidence by default.

You're trying to retrofit evidence onto a process not designed to produce it.

Finding 2: Incomplete Scope Coverage

Not all required systems included in review, applications missed, user populations excluded, privileged access not separately reviewed. Incomplete scope means control is only partially effective.

Unreviewed systems create security blind spots and compliance gaps.

Document complete inventory of in-scope systems. Validate inventory quarterly before review launch. Use discovery tools to identify shadow IT.

Create a checklist ensuring all system categories are covered.

Most user access review solutions assume you already know everything that needs reviewing. They're governance engines with no discovery element. You define scope manually, and any gaps in your knowledge become gaps in your review.

We've seen this repeatedly: one healthcare organization ran diligent quarterly reviews for three years, only to discover during an audit that they'd never included 15 SaaS apps used by employees that contained PHI data.

Finding 3: Insufficient Remediation Evidence

Revocations approved but no proof of execution, tickets marked complete without validation testing, revocations delayed beyond SLA without documented justification. Access review findings mean nothing if remediation didn't occur.

Auditors assume access is still inappropriate without proof of removal.

Implement validation testing attempting authentication post-revocation. Document test results with screenshots. Automate revocation through platform APIs (eliminates manual execution risk).

Track remediation metrics proving SLA compliance.

Finding 4: Inconsistent Execution Across Review Cycles

Q1 and Q4 thorough, Q2 and Q3 rushed or incomplete. Evidence quality varies. Some departments complete fully, others partially. Control must operate consistently to be reliable.

Sporadic effectiveness = unreliable control.

Standardize process across all departments and quarters. Use the same platform, templates, and evidence collection. Implement quality checks before considering a complete review.

Track consistency metrics to identify degradation early.

Finding 5: Unqualified Certification Owners

Technical managers reviewing business system access they don't understand. Business managers certifying developer privileged access without technical knowledge. Certification owners never trained on review criteria.Certification owners lacking appropriate knowledge make poor decisions.

Rubber-stamping occurs when reviewer doesn't understand access implications.

Match certification owner expertise to access type. Use multi-level reviews combining business and technical perspectives. Require certification owner training before first review.

Document reviewer qualifications.

Most organizations assign reviews based on org charts—manager reviews their direct reports' access. This seems logical until you consider what's being reviewed.

The sales VP has no basis to evaluate whether their team's Salesforce admin privileges are appropriate. The engineering manager can't determine if database read access violates compliance requirements.

The review structure assumes knowledge that doesn't exist.

Finding 6: Missing Historical Evidence

Current quarter evidence complete, but prior quarters missing. Evidence deleted after review instead of retained. Evidence format changed making historical comparison impossible. Auditors need multi-period evidence to validate consistent control operation.

Missing historical evidence = expanded audit scope, potential qualified opinion.

Implement evidence retention per regulatory requirements (SOX: 7 years, PCI: 1 year + 3 months online, SOC 2: report period + potential extension). Store evidence in a tamper-evident repository.

Never delete evidence, only archive.

Evidence Requirements: What Satisfies Auditors

Comprehensive evidence package prevents audit findings.

Pre-Review Evidence:

- Access review policy (effective date, approval, version)

- Review scope definition (systems, users, dates)

- Certification owner assignments (who reviews whom)

- Launch communication (notification emails with delivery confirmation)

- Training records (certification owner training dates, materials)

Review Execution Evidence:

- Certification decisions (who certified what, when, decision, justification)

- Complete audit trail (system-generated logs, immutable, timestamped)

- Escalations (overdue items, who escalated when)

- Exceptions (approvals granted despite policy, documented justification)

- Support tickets (questions asked, answers provided, timestamps)

Remediation Evidence:

- Approved revocations (what access, who approved revocation, when)

- Remediation tickets (what action, who executed, completion date)

- Validation testing (authentication attempt results, screenshots, test dates)

- SLA compliance (remediation time per item, SLA met/missed)

- Incomplete remediations (what couldn't complete, why, compensating controls)

Post-Review Evidence:

- Executive summary (completion metrics, findings, trends)

- Detailed report (by department, application, finding type)

- Metrics dashboard (completion rate, revocation rate, remediation time)

- Trend analysis (quarter-over-quarter comparison)

- Evidence archive (complete package stored per retention requirements)

Control Testing Evidence:

- Design documentation (how control should work)

- Operating effectiveness (proof control worked as designed)

- Sample results (auditor can select sample and validate same results)

- Exception documentation (why control deviated, approval, compensating controls)

Evidence must be:

- Complete: All required components present

- Accurate: Data correct and verifiable

- Contemporaneous: Created during activity, not retroactively

- Accessible: Can be retrieved within hours when auditor requests

- Immutable: Can't be altered after creation

- Retained: Stored per regulatory requirements

Missing any evidence component creates audit findings regardless of actual control effectiveness.

You can execute perfect access reviews and still fail the audit if you can't prove what

happened.

Preparing for Successful Audits

90 days before audit:

- Conduct internal control testing using auditor testing procedures

- Identify evidence gaps and remediate before external audit

- Validate evidence retention complete for all required periods

- Update control documentation to reflect current state

30 days before audit:

- Prepare evidence package organized by control tested

- Create index mapping controls to evidence locations

- Validate all evidence accessible and retrievable

- Brief control owners on audit process and expected questions

During audit:

- Provide complete evidence packages promptly (within 24-48 hours of request)

- Answer auditor questions clearly and directly

- Document all auditor inquiries and responses

- Flag potential issues immediately rather than hoping auditor doesn't notice

Post-audit:

- Address all findings with documented remediation plans

- Implement preventive measures to avoid recurrence

- Update control documentation based on audit findings

- Track remediation completion with target dates

Internal testing before external audit catches most potential findings, enabling proactive remediation before formal audit opinion.

When you test internally first, you identify evidence gaps while there's still time to generate proper documentation. You discover scope issues before the auditor does.

You validate your remediation process actually works—that revoked access really is revoked, that tickets marked complete actually reflect completed work.

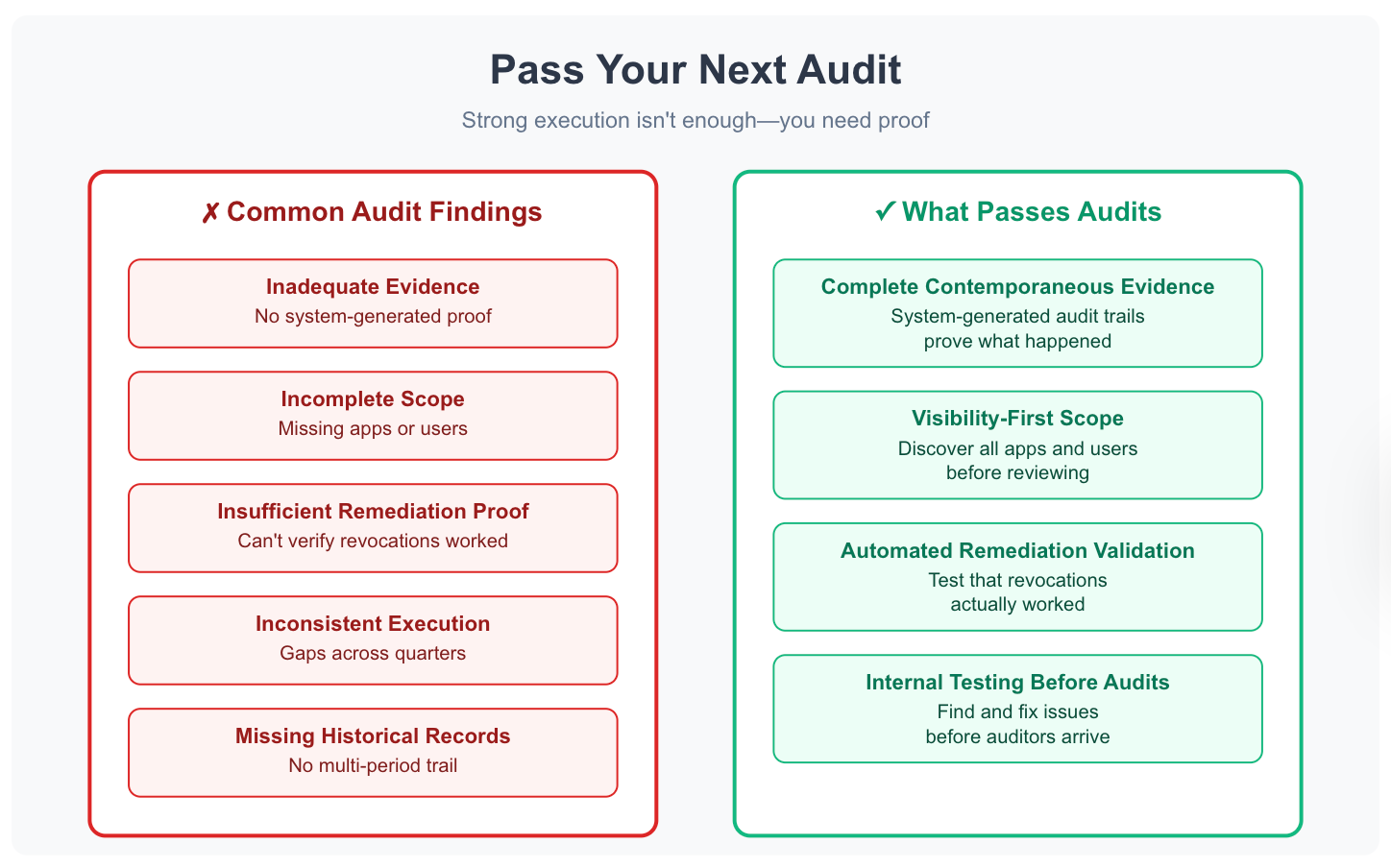

Pass Your Next Audit

Access review audit success requires demonstrating control effectiveness through verifiable evidence.

Strong execution isn't enough—you need proof.

Auditors evaluate both control design and operating effectiveness. They test using system-generated evidence, reperformance validation, and multi-period consistency.

Framework requirements vary: SOX demands quarterly financial system reviews, SOC 2 requires consistent execution across testing periods, ISO 27001 evaluates continuous improvement, PCI DSS mandates quarterly CDE reviews with immediate remediation, HIPAA requires risk-based review frequencies justified by documented analysis.

Common findings are preventable: inadequate evidence, incomplete scope, insufficient remediation proof, inconsistent execution, unqualified reviewers, missing historical records.

The solution is proactive evidence management and control documentation.

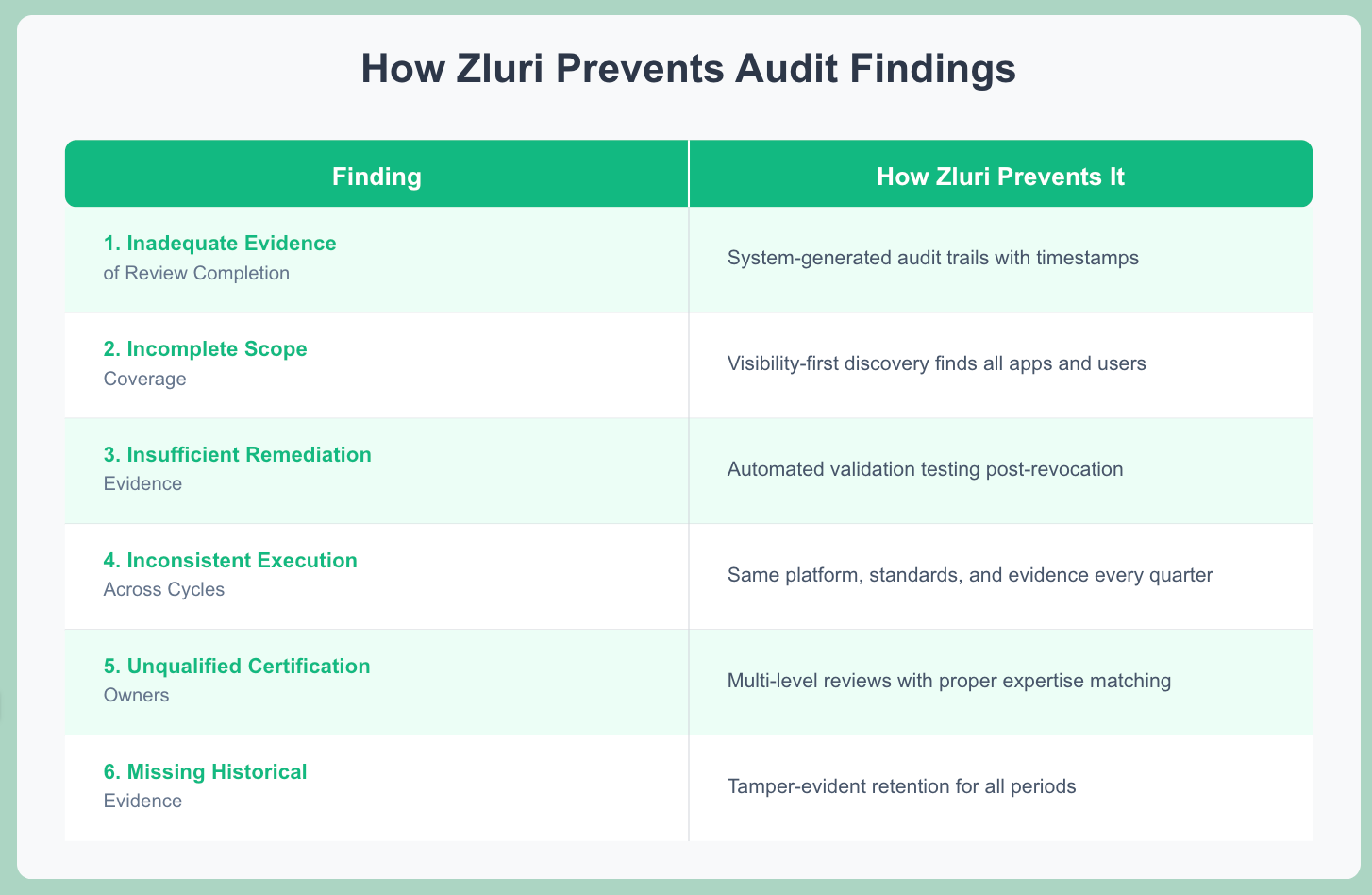

We built Zluri around this reality. Visibility-first access reviews ensure complete scope. Automated evidence collection generates audit-grade documentation by default.

System-generated audit trails prove what happened. Built-in compliance controls identify issues during review, not during audit.

Organizations passing audits consistently do three things: maintain complete contemporaneous evidence, conduct internal testing before external audits, remediate findings immediately.

Audit preparation isn't a last-minute scramble—it's continuous evidence management.

Execute effectively. Document clearly. Collect evidence comprehensively. Test internally. Pass audits.

Get Started

See automated audit evidence collection → Book a Demo

.png)

.svg)