Your CISO just announced: "We're implementing quarterly access reviews. The first one launches in 3 weeks."

You're the IT manager tasked with making it happen. You open a spreadsheet. Then you realize:

- You don't know all the apps your company uses (your IDP shows 80, but Finance's expense reports show 150+)

- You don't know who should review what (managers? app owners? security?)

- You don't know what "good" looks like (approve all active users? flag dormant accounts?)

- You definitely don't know what happens AFTER the review (who removes access? how?)

This is why most organizations haven't fully automated their access reviews. The path from "we should do this" to "we're actually doing this" isn't obvious.

The problem isn't understanding WHY you need access reviews. It's understanding HOW to launch one without drowning in complexity.

Here's the reality: Your first access review doesn't need to be perfect. It needs to be done. Organizations that wait for the "perfect process" never start. Organizations that start with a simple process improve over time.

In this article, we discuss how to launch your first access review in 3-8 weeks, depending on your approach.

We've helped 200+ mid-market companies (200-5,000 employees) implement their first automated access reviews.

We've sat with IT teams discovering 150+ shadow IT apps they didn't know existed, watched security leaders debate whether managers or app owners should review what, and seen compliance teams scramble to generate audit evidence after reviews were "done" but nothing was documented.

The process we'll walk you through works regardless of whether you're doing this manually, with basic automation, or with a user access review platform. The sequence stays the same—only the timeline and effort change.

As an IT manager, you'll learn how to drive this forward while partnering with Security and Compliance teams to make it successful.

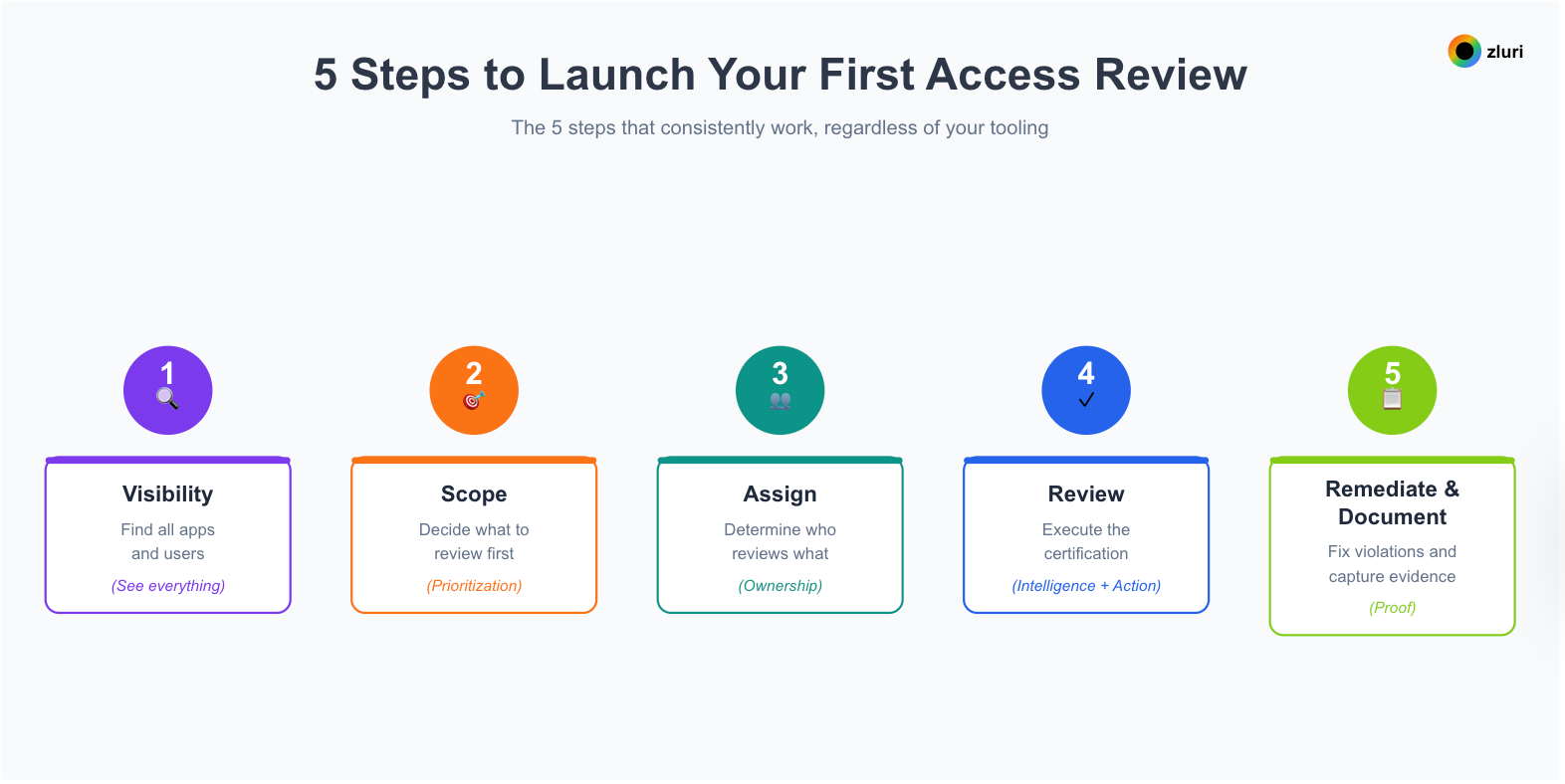

5 Steps to Launch Your First Access Review

The 5 steps that consistently work, regardless of your tooling:

- Visibility → Find all apps and users (See everything)

- Scope → Decide what to review first (Prioritization)

- Assign → Determine who reviews what (Ownership)

- Review → Execute the certification (Intelligence + Action)

- Remediate & Document → Fix violations and capture evidence (Proof)

Each step builds on the previous one. You can't review (Step 4) without knowing what to review (Step 2). You can't scope (Step 2) without visibility into what exists (Step 1). This sequence matters.

Timeline expectations:

- Manual approach: 6-8 weeks for first review

- Basic automation (IDP + spreadsheets): 4-5 weeks

- Modern platform (Zluri, SailPoint, Saviynt): 3 weeks

The framework is the same. The tools determine speed and completeness.

Step 1: Visibility (see everything)

The foundation: You can't review what you can't see

Your IDP (Okta, Azure AD, Google Workspace) says you have 80 apps. Your finance team's expense reports show 156 SaaS subscriptions. A combination of discovery methods (if you have them) detect 200+ apps in use.

Which number is right? All of them—depending on what you're measuring.

Visibility reveals the full picture: SSO-integrated apps (the 80 your IDP knows about), shadow IT (apps purchased with personal/corporate cards outside procurement), embedded apps (tools within tools—Salesforce has 5,000+ integrations), free trials (that became paid subscriptions without IT approval), developer tools (GitHub, AWS, cloud services accessed via personal accounts), and department purchases (Marketing bought HubSpot, Sales bought Gong, Support bought Zendesk).

Discovery approaches: manual vs automated

Manual discovery relies on three sources: your IDP's app catalog, Finance expense reports showing SaaS subscriptions, and department surveys asking "What apps does your team use?"

Expected coverage: 40-60% of actual apps. Time investment: 3-5 days of manual compilation.

Basic automation adds integration with finance systems and CASB logs to catch cloud app usage your IDP doesn't see.

Expected coverage: 50-75% of actual apps. Time investment: 1-2 days of setup plus 48 hours of data collection.

Comprehensive automation uses multiple discovery methods simultaneously. Next Gen IGA platforms like Zluri use 9 discovery methods: SSO/IDP (login events), Finance/Expense (transaction data), Direct Integrations (300+ pre-built connectors), Desktop Agents (apps installed on laptops), Browser Extensions (web-based SaaS usage), MDMs (mobile device apps), CASBs (cloud traffic monitoring), HRMS (employee data, department mapping), and Directories (user identities, group memberships).

Expected coverage: 99%+ of actual apps. Time investment: 4-6 hours of one time setup, 72 hours data collection.

If your first review only covers 80 apps but you actually have 200, you've reviewed 40% of your risk. The other 60%? Still exposed.

What you'll do in your first week: Building visibility and defining scope

If taking a manual approach: Export your app and users list from your IDP, request expense reports from Finance showing all SaaS subscriptions, and survey department heads asking "What apps does your team use?"

Compile everything into a master list—expect to find 2-3x what your IDP shows.

If using automation: Enable your discovery engine (4-6 hours setup), connect your SSO, HRMS, and Finance systems, then deploy desktop agents if you want the most complete picture. Zluri's approach, for instance, lets this run for 72 hours while building your app inventory.

Let it run for 72 hours while it builds your app inventory.

Most organizations discover 60-80 apps they didn't know existed. 20-30% of those have access to sensitive data.

Partner with Finance early—they often have the most complete picture of actual SaaS spending. Security and Compliance teams will want to see this full list for risk assessment.

Step 2: Scope (prioritization)

Don't review everything—start smart

Many teams make this mistake: Trying to review 2,000 users across 200 apps in your first review = overwhelm, delays, incomplete results.

The smart approach: Start with 20-30 high-risk apps in your first review. Expand scope in subsequent quarters.

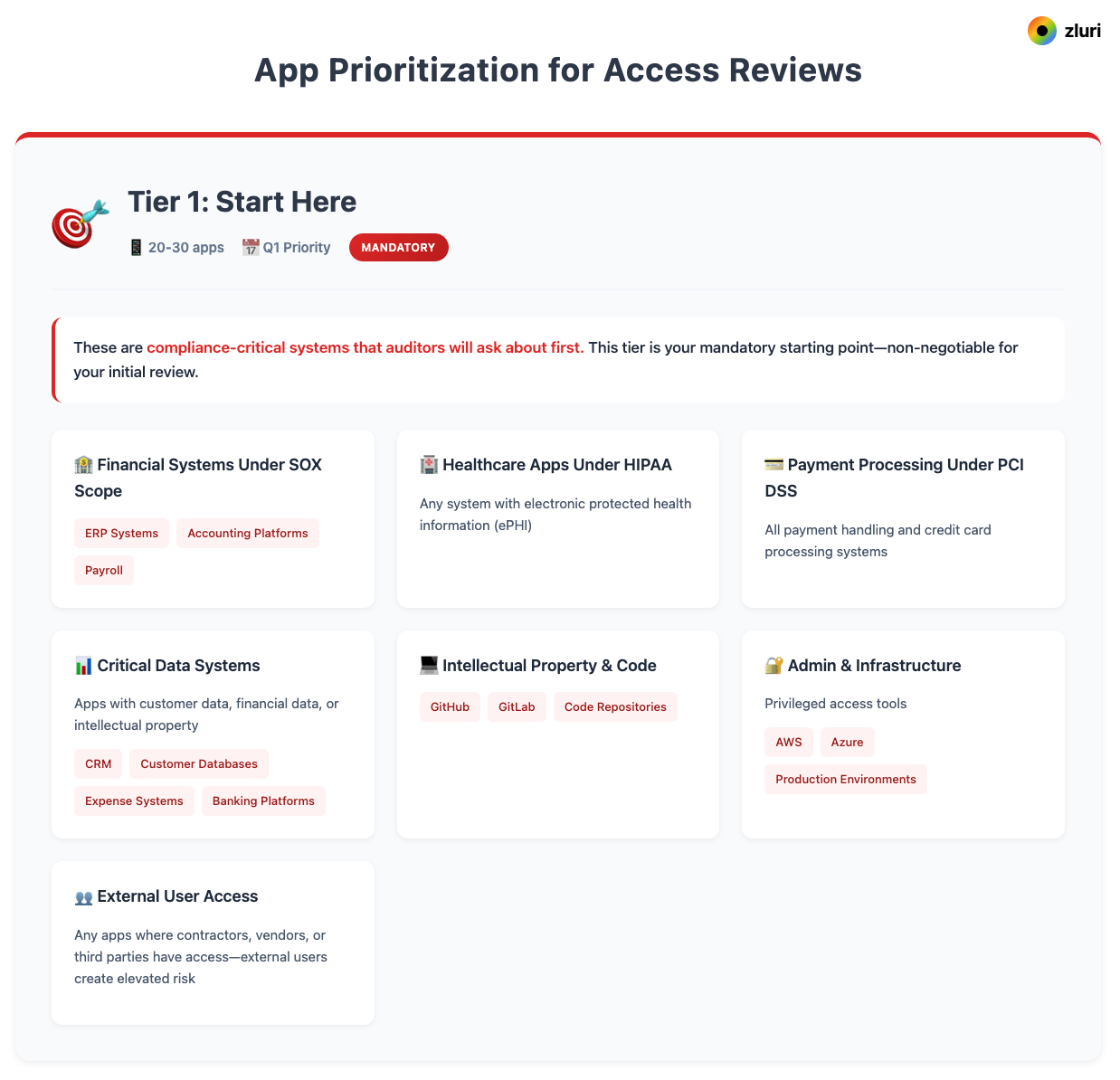

How to prioritize apps for first review

Tier 1 apps (20-30 apps) are your mandatory starting point. These are compliance-critical systems that auditors will ask about first: financial systems under SOX scope like your ERP, accounting platforms, and payroll; healthcare apps under HIPAA with electronic protected health information; and payment processing systems under PCI DSS.

Add any apps with customer data (your CRM, customer databases), financial data (expense systems, banking platforms), or intellectual property (GitHub, GitLab, code repositories).

Include admin and infrastructure tools where privileged access exists—AWS, Azure, production environments.

Finally, flag any apps where contractors, vendors, or third parties have access, since external users create elevated risk.

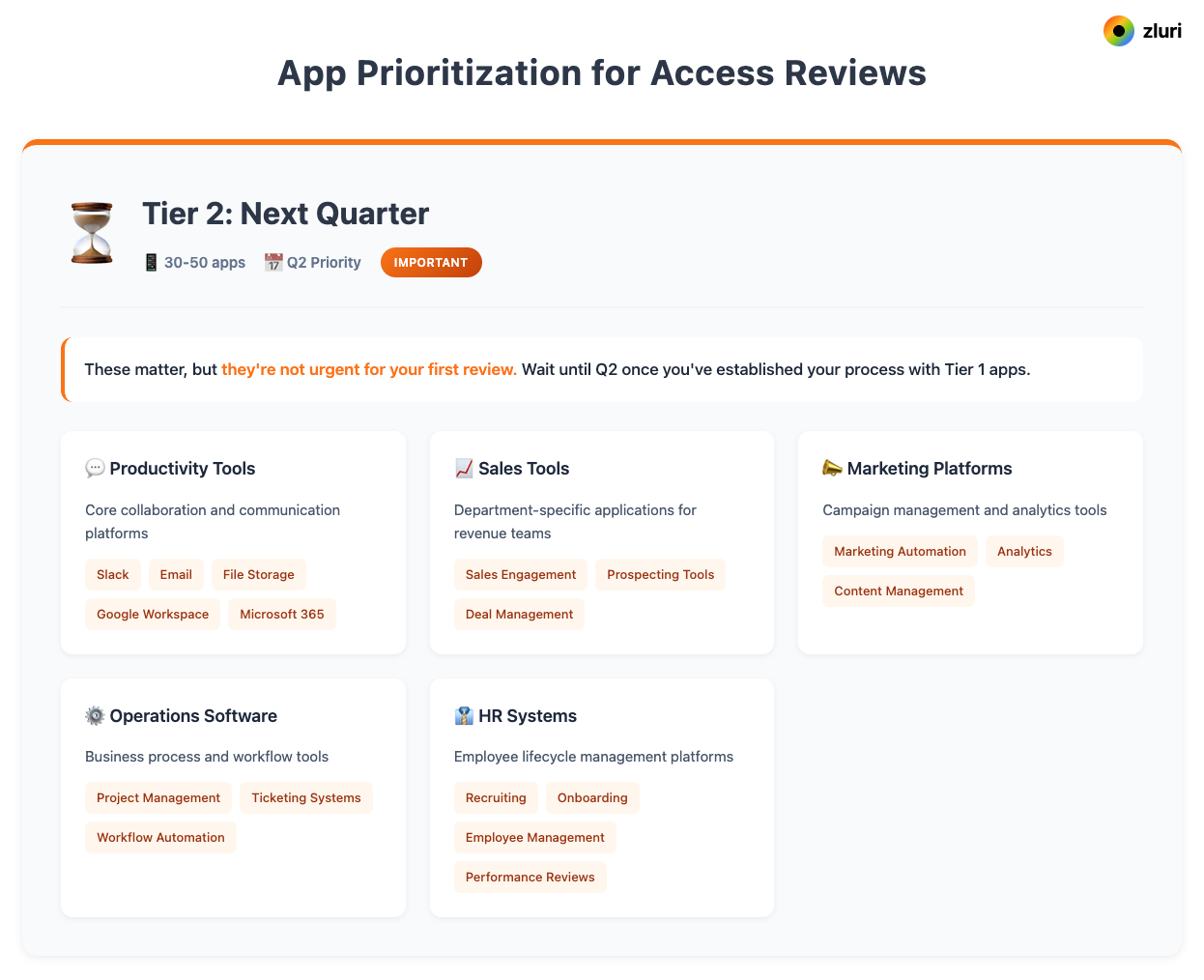

Tier 2 apps (30-50 apps) wait until Q2. These include productivity tools like Slack, email, and file storage; department-specific apps for sales, marketing, and operations; and HR systems for recruiting, onboarding, and employee management.

They matter, but they're not urgent for your first review.

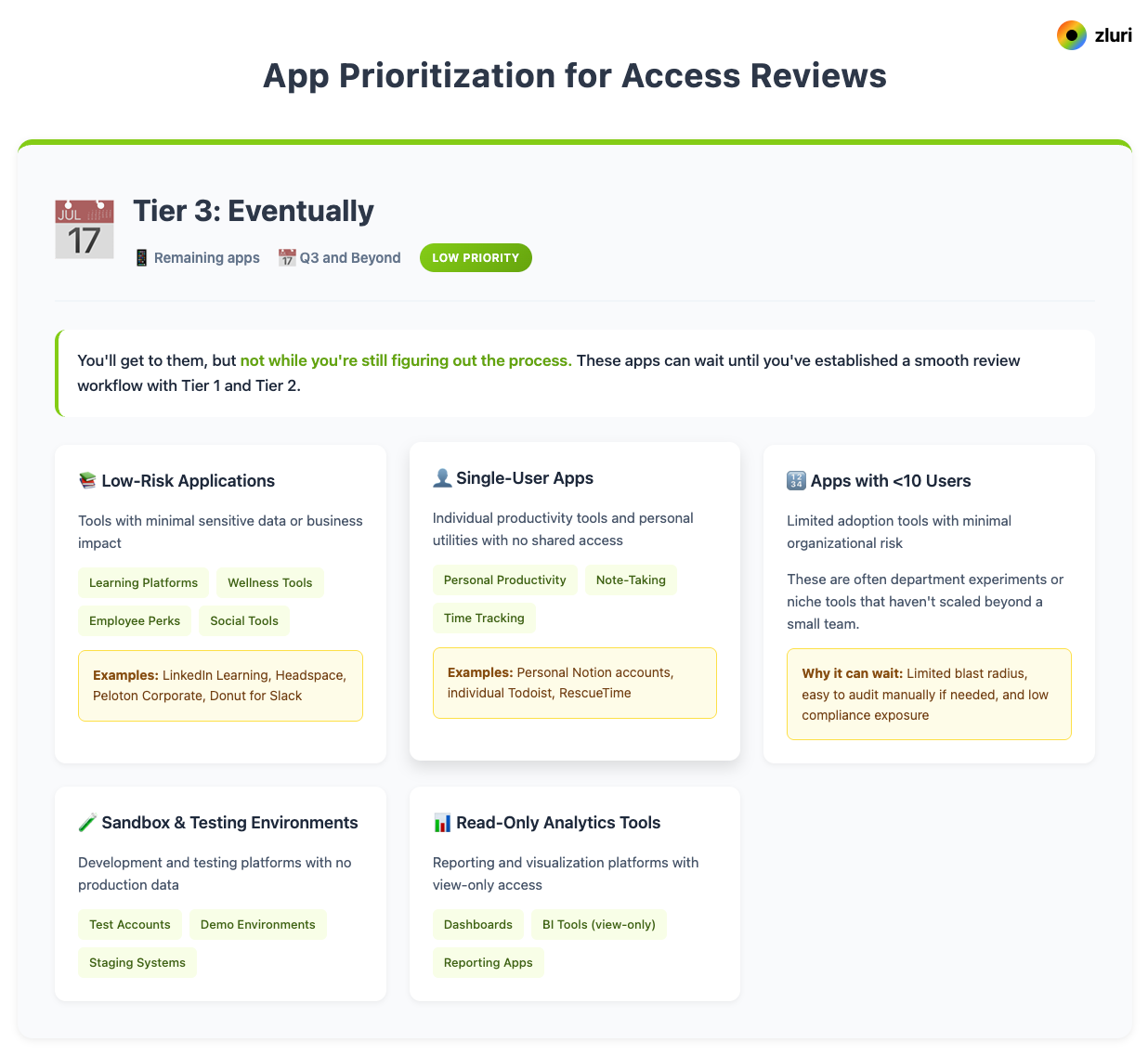

Tier 3 apps go to Q3 and beyond. Low-risk apps like learning platforms and wellness tools, single-user apps, and anything with fewer than 10 users can wait.

You'll get to them, but not while you're still figuring out the process.

User-based vs group-based scoping

For your first review, you need to decide whether you're reviewing individual users or SSO groups.

Option A is user-based review—the traditional approach where you review individual users across your scoped apps. This works if you're a small company (under 100 people) or your first review absolutely needs to be comprehensive.

The effort is high: 500 users × 30 apps = 15,000 data points to review.

Option B is group-based review—the modern approach where you review SSO groups and their entitlements. Use this when you grant access via SSO groups (most organizations do).

The effort is dramatically lower: review 15-20 groups instead of 500 users, making it 10x faster.

Platform capability note: Not all access review platforms support group-based reviews. Some IGA platforms focus exclusively on user-level certification. Modern platforms like Zluri give multiple options, including group-based reviews, recognizing that most mid-market companies grant access through SSO groups, not individual provisioning.

The decision framework is straightforward. Do you grant access via SSO groups? Go group-based.

Is most access assigned individually? Go user-based.

Mix of both? Use group-based for standard users and user-based only for privileged accounts.

Finishing week 1: Identifying which apps to review and calculating effort

Identify your Tier 1 apps—aim for 20-30 high-risk apps. Pull user lists for those apps, then identify which SSO groups grant access to each.

Calculate your review scope using the formula: number of users (or groups) × number of apps = total data points.

Set a realistic target of 90% completion by Week 3 (automated) or Week 6 (manual).

A clear scope statement looks like this: "We're reviewing 25 apps, covering 500 users (or 18 SSO groups), focusing on finance, customer data, and admin access."

Work with Security and Compliance to validate your Tier 1 list. They'll have insight into regulatory requirements (SOX, PCI, HIPAA) that should drive prioritization.

Step 3: Assign (who reviews what and why it matters)

This is where IT teams often get stuck: Who should actually do the reviewing? You're the orchestrator, not the solo performer. You're coordinating, but you need the right people making access decisions.

Four certification models for distributing review responsibility

Manager certification puts direct managers in charge of reviewing their team members' access. This works well for standard user access and role-based permissions because managers know what their teams need day-to-day.

The limitation is that managers may not understand technical apps or security implications.

Application owner certification assigns app owners to review all users with access to their specific app. Use this for critical apps and compliance-sensitive systems where app owners understand app-specific permissions and data sensitivity.

The drawback is that app owners may not know all users' roles or business justification for access.

Security certification hands high-risk access reviews to your Security team—admin accounts, production access, and sensitive data. Security understands risk and compliance requirements, but they may not know the business justification for every access decision.

The hybrid model is recommended for first reviews. Managers review standard user access, app owners review admin or privileged access to their apps, and Security reviews external user access and policy violations.

This distributes the work appropriately while keeping oversight where it matters most.

Week 2: Mapping reviewers, configuring workflows, and briefing stakeholders

Map reviewers to your scope. For each app, determine who reviews what.

Example: Salesforce has 200 standard users (Sales Managers review), 5 admin users (Salesforce Admin + Security review), and 10 external partners (Security reviews).

Configure your workflows. Most apps need single-level approval: Manager reviews → Approve/Deny → Remediation.

High-risk apps need multi-level approval: Manager reviews → Approve → Security validates → Final approval → Remediation.

Enable delegation so reviewers can reassign reviews if needed (manager on PTO, for example).

Brief your reviewers. Cover what they're reviewing (which apps, which users), what criteria to use (employment status, role fit, usage data), what decisions they can make (approve, deny, modify, escalate), and the deadline (end of Week 3 or Week 6).

Modern user access review tools can automate assignment—the system pulls reporting structure from HRMS, maps managers to their direct reports, assigns app owner reviews based on configured ownership, and sends automated briefing emails with reviewer dashboard links. Zluri handles this automatically, for example.

Manual approach: Create spreadsheet mapping apps → reviewers, send email briefs to each reviewer with their assigned apps, set up a shared folder for them to document decisions.

Every app in scope has assigned reviewers who know what to do and when.

You're the orchestrator here, not necessarily the decision-maker. Partner with Security to define criteria for high-risk access. They'll appreciate being consulted and will be more engaged during the review.

Step 4: Review (executing certification with intelligence and action)

Now reviewers actually make decisions. As the IT lead, you're monitoring progress and handling escalations.

What reviewers need to see when making certification decisions

What reviewers need to see: John Smith (Engineer) has access to GitHub (Admin) with daily usage and logged in today, AWS Production (Admin) with weekly usage and last login 2 days ago, Salesforce (User) with zero usage and no login in 90+ days, and Finance System (Viewer) that he's never accessed but has external data access risk.

The decision framework should be visual if possible. Green signals appropriate access that's actively used—approve these. Yellow flags anomalies like dormant accounts or unusual role fit—investigate before deciding.

Red marks high risk: external access, unused admin rights, or no business justification—deny these.

Context matters. Last login date makes denials obvious (never logged in? Easy denial).

Usage frequency shows the difference between daily access and never-accessed accounts. Risk scores combine factors like external user status plus admin access to flag elevated risk.

Employment status catches contractors, terminated employees, or role changes. Role fit analysis shows whether access matches job function.

Manual approach: Export user-app assignments to spreadsheets, manually look up last login dates from each app, email reviewers the spreadsheets with columns for Approve/Deny/Notes.

Basic automation: Single dashboard showing user access across apps with login dates from IDP, reviewers click through assignments making decisions.

The four decisions

Reviewers make one of four choices.

- Approve means access is appropriate—it remains logged as certified.

- Deny means access isn't appropriate—this triggers remediation to remove access.

- Modify means the access level is wrong—change permission from admin to user, for example.

- Escalate means unclear business justification—send to app owner or security for review.

Handling bulk reviews

The fatigue problem: Reviewing 500 users individually means clicking "Approve" 500 times, and reviewers stop reading after the first 50.

The solution is smart bulking. Modern platforms can auto-approve low-risk access automatically: active employees who logged in within 30 days, where role matches access, with no AI-flagged anomalies.

Result: 70-80% auto-approved, reviewers focus on 20-30% anomalies.

Group-based review multiplies efficiency. Instead of reviewing 500 individual users, review 15 SSO groups where each group represents 20-50 users, then approve or deny at the group level.

Result: 10x faster, same security outcome.

Week 3: Launching the review campaign and driving to completion

Launch the review campaign—reviewers receive emails with dashboard links (automated) or spreadsheets (manual).

Reviewers process their assignments over the next few days while reminders go to incomplete reviewers (automated or manual), and IT and Security stay available for escalations.

Push to escalate incomplete reviews with a target of 90%+ completion.

90%+ of access certified, 5-10% denied, 3-5% escalated for further review.

Don't let this become "IT makes all the decisions." When managers escalate unclear cases, loop in Security or the app owner. You're facilitating the process, not owning every judgment call.

Step 5: Remediate & Document (fixing violations and capturing audit-ready proof)

This is where IT teams traditionally get stuck with hundreds of tickets and manual work. Let's look at the options.

Why traditional remediation processes fail and what modern approaches offer

Traditional manual process: Review complete, violations identified. Export CSV of denied access. Create Jira tickets for each app. IT manually logs into apps to remove access.

Weeks later, 60% remediated, 40% "in progress."

Audit question arrives: "Prove all violations were fixed." Your answer: "We have tickets..." (not acceptable).

Basic automation (IDP-based): Review complete, violations identified. Platform triggers workflows to remove access from SSO-integrated apps. For non-SSO apps, still creating tickets.

The end result is that SSO apps are remediated in days, non-SSO apps still taking weeks (which is the majority of SaaS apps due to low SSO coverage.)

Comprehensive automation: Review complete, violations identified. Click "Execute Remediation." Done.

Access removed automatically via API for 300+ integrated apps (Zluri supports this many), or guided manual actions for apps without APIs. Audit log captured with timestamp and proof.

Same audit question: "Prove all violations were fixed." Your answer: "Here's the complete report with timestamps" ✓

How different remediation approaches compare in time and completeness

For manual remediation: IT receives list of denials, logs into each app individually, navigates to user management, removes access, documents action in spreadsheet or ticket.

Time per app: 5-15 minutes. For 50 denials across 20 apps: 8-12 hours of manual work.

For API-based remediation: Platform makes API calls to remove access immediately, modify permission levels, or deprovision users entirely.

Every action creates proof—the audit log shows the exact timestamp. Time per app: Instant for integrated apps.

For apps without APIs: Even modern platforms can't automate everything. Platforms like Zluri provide guided manual actions—step-by-step workflows: Open app → Go to users → Find John Smith → Remove access → Mark complete.

Manual actions still get logged with timestamp and who performed it.

Creating an audit trail that satisfies compliance requirements

Every remediation action should create evidence. What access was removed (access to [App] for [User]). Decision details (denied by [Reviewer] on [Date]). Action taken (revoked by [System/IT] on [Date]).

Proof of removal (confirmation of action). Duration tracking (time from decision to remediation—instant vs. days).

What documentation auditors need and how to generate it efficiently

What you need for auditors:

The Certification summary shows totals: users reviewed, access points reviewed, approved count, denied count, modified count, completion rate.

The Remediation report tracks violations: identified count, remediated immediately, remediated within 48 hours, outstanding with reasons.

The Reviewer activity report documents who participated: managers, app owners, security team, completion rate, average time per decision, total time investment.

The Evidence package bundles everything: complete user list reviewed, all access certified or denied, timestamp of every decision, proof of remediation for denials.

Manual approach: You're building these reports from spreadsheets and ticket systems. Plan 1-2 days for report compilation.

Automated approach: Platforms generate these reports automatically. Some platforms store audit logs indefinitely, while others have 90-day limits—verify retention policies match your compliance needs.

Finishing week 3: Executing remediation, generating reports, and closing the review

Close the review campaign and execute remediation for all denials—verify everything completed.

Generate audit reports, review exceptions (any outstanding remediations), and document reasons for exceptions.

Archive the evidence package, brief leadership on results, and schedule the next quarterly review.

100% of denials remediated, complete audit trail captured, evidence package ready for auditors, first review DONE.

This is where automation pays for itself. If you're creating Jira tickets and manually removing access from 47 apps, you'll burn out before Q2. The time and audit-readiness case for platforms becomes obvious here.

Common first-time user access review mistakes

Trying to review everything is the first trap. The problem: 200 apps and 2,000 users in your first review creates overwhelm, delays, and incomplete results.

The fix: Start with 20-30 high-risk apps, expand in Q2.

No clear decision criteria leaves reviewers guessing. The problem: Reviewers don't know what "good" looks like, so they either approve everything or get paralyzed.

The fix: Provide clear guidance like "Approve if: active employee, logged in within 90 days, role fit matches access."

Manual remediation with no follow-through kills momentum. The problem: Reviews are done, but there's no system for removing access—tickets pile up, nothing happens.

The fix: Either commit to manual remediation with dedicated IT time, or implement automated remediation.

No reviewer training assumes everyone knows what to do. The problem: Managers don't understand what they're certifying or how their decisions impact security.

The fix: 15-minute briefing covering what you're reviewing, how to decide, and what happens after.

Unrealistic timelines set teams up to fail. The problem: "We'll review everything in 1 week" sounds aggressive but leads to rushed decisions and incomplete coverage.

The fix: 3-week timeline with automation, 6-8 weeks manual—both are realistic and achievable.

Forgetting documentation means you did the work but can't prove it. The problem: Review happened but no audit trail was captured—auditors ask for evidence, you have none.

The fix: Document everything as you go, whether manual or automated.

The IT team doing everything alone and burning out. The problem: IT owns discovery, review decisions, remediation, and documentation—impossible workload for one team.

The fix: Partner with Security for criteria and risk assessment, with Compliance for evidence requirements, with managers for actual review decisions.

Your first review won't be perfect—and that's okay

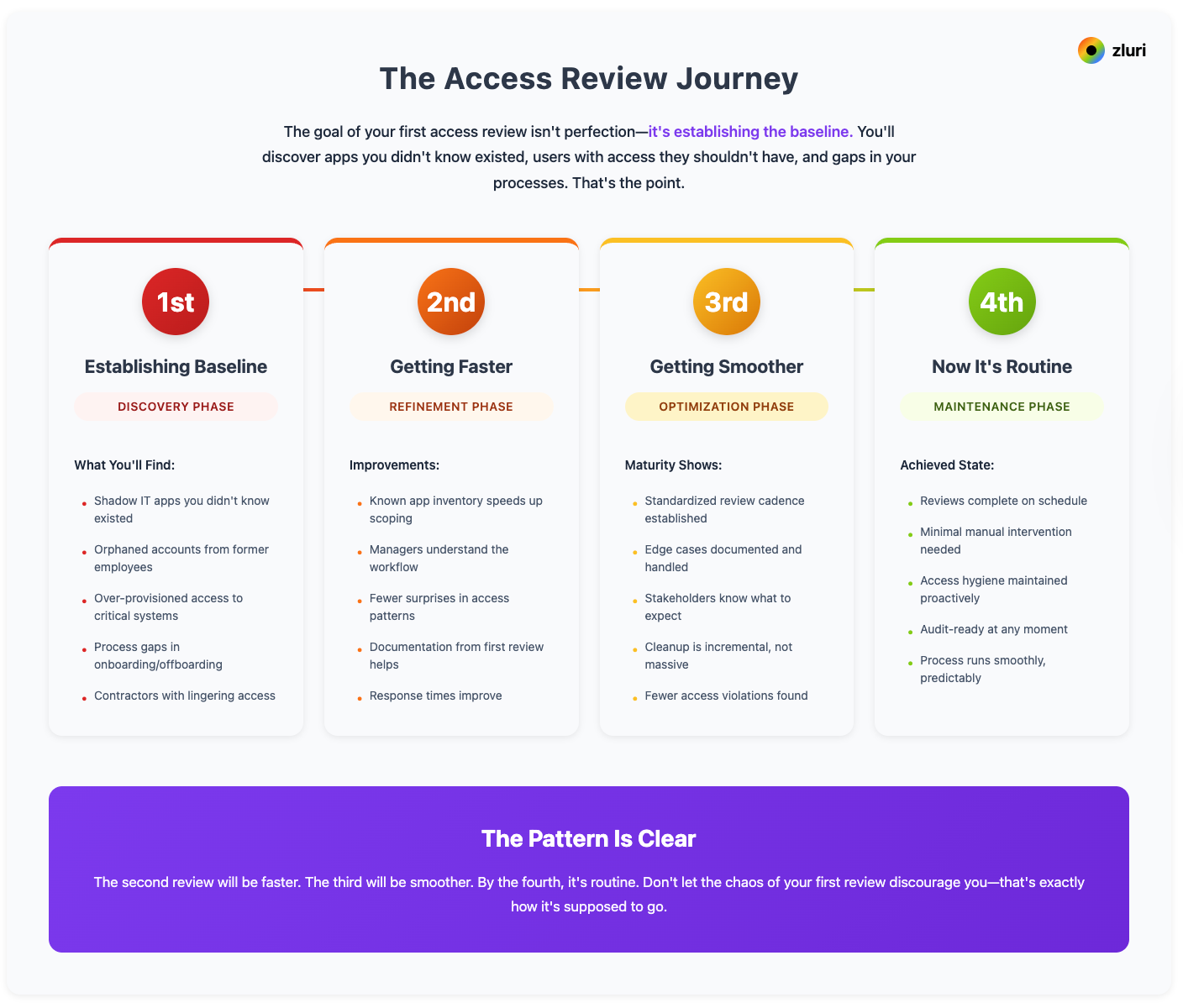

The goal of your first access review isn't perfection—it's establishing the baseline. You'll discover apps you didn't know existed, users with access they shouldn't have, and gaps in your processes.

That's the point. The second review will be faster. The third will be smoother. By the fourth, it's routine.

The 5 steps:

- Visibility → Find what exists (see everything)

- Scope → Prioritize high-risk (focus)

- Assign → Determine ownership (accountability)

- Review → Execute certification (intelligence + action)

- Remediate & Document → Fix and prove (compliance)

Time to start: Your first review can launch in 3 weeks with automation, 4-5 weeks with basic automation, or 6-8 weeks manually.

The best time to start was last quarter. The second best time is now.

For IT teams: You're the orchestrator, not the solo performer. Partner with Security for risk assessment, with Compliance for requirements, with managers for decisions.

You enable the process—you don't have to own every piece.

Choosing your approach

You have three paths forward:

Manual approach works for first reviews, especially if you're under 200 employees and have <20 critical apps. Plan 6-8 weeks, accept 40-60% visibility, commit IT resources for remediation.

Basic automation through your existing IDP (Okta Governance, Azure AD Access Reviews) covers SSO apps well but misses shadow IT. Plan 4-5 weeks, expect 50-75% visibility, most remediation is still manual.

Next Gen IGA platform (Zluri) provides comprehensive visibility across SSO and non-SSO apps, group-based reviews, automated remediation, and audit-ready documentation. Plan 3 weeks, expect 99%+ visibility, minimal manual work.

The framework works regardless of your choice. The tooling determines speed, completeness, and audit-readiness.

Next steps

If you want to see what full automation looks like → Explore Zluri's Access Review platform

.png)

.svg)