The numbers tell a story IT teams don't want to hear: Product and Engineering teams use 200+ unique AI applications, Sales and Marketing have adopted 170+, and Customer Success and Support operate with 140+.

These aren't our estimates. They're findings from Zluri's State of AI in the Workplace 2025 report, which analyzed 3,000+ AI applications across 160+ organizations and 400,000+ users.

What makes this more than just a big number is that each department adopts AI differently.

- Engineering gravitates toward code generation tools.

- Marketing wants content creation platforms.

- Support needs voice recognition and conversational intelligence.

- Design demands image and video generators.

You can't use a one-size-fits-all approach because what works for finding Engineering's tools will miss Marketing's. This isn't traditional shadow IT where a few rogue SaaS apps slip through procurement.

This is decentralized, department-specific adoption happening at a scale and speed that makes traditional discovery methods obsolete.

The question isn't whether AI sprawl exists in your organization, it's whether you have the right detection and control mechanisms to manage it.

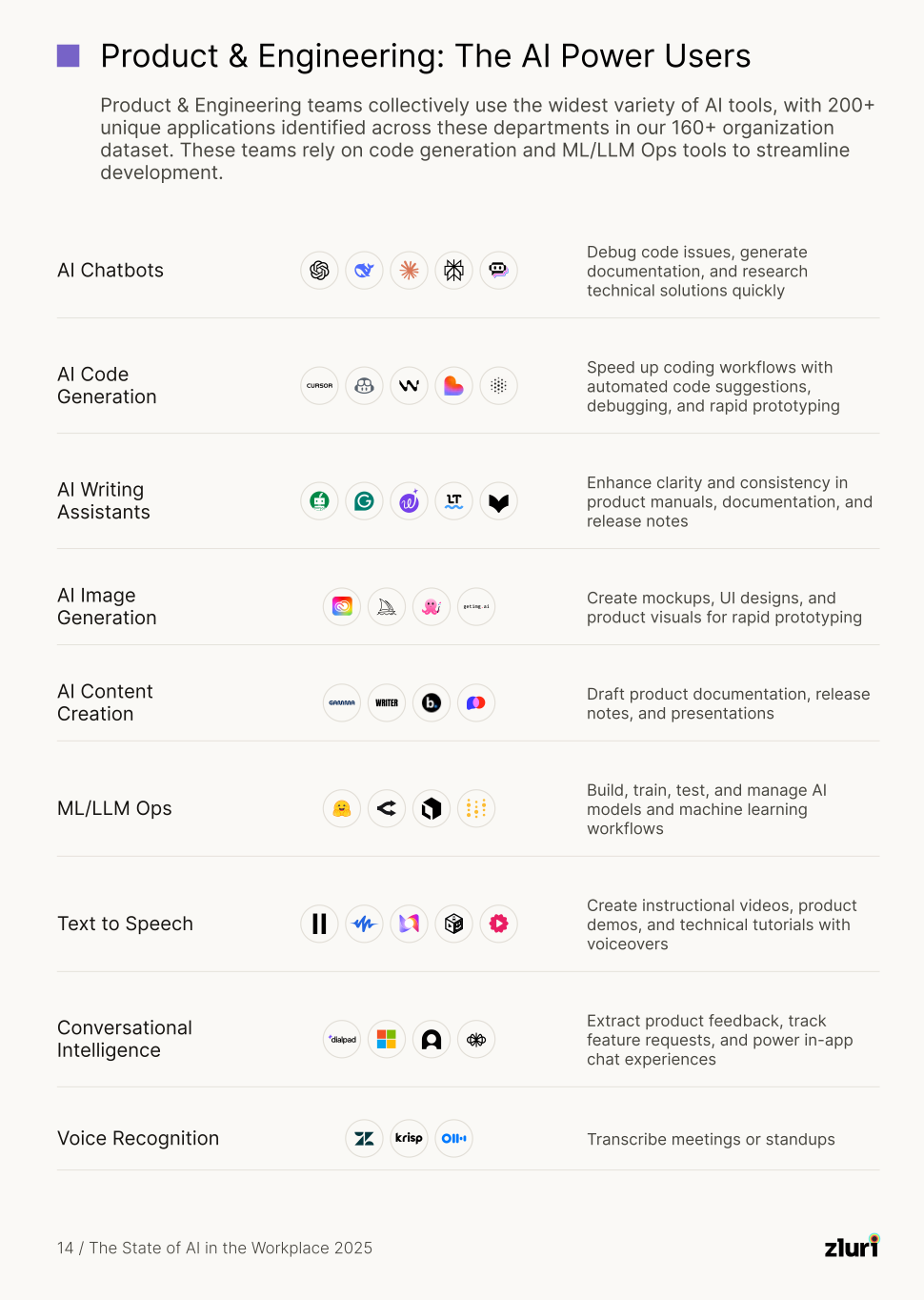

Why Engineering Uses 200+ AI Tools

Engineering teams aren't waiting for IT approval. They can deploy tools themselves, evaluate them technically, and integrate them into workflows before IT knows they exist. The culture drives adoption: move fast, ask forgiveness later.

Their top AI categories

- AI Code Generation (Cursor, GitHub Copilot, Windsurf, Lovable, Blackbox.ai)

- AI Chatbots (ChatGPT, Claude, DeepSeek)

- ML/LLM Ops platforms

- AI-powered documentation tools

Why they end up with 200+ tools

The reason is specialization. GitHub Copilot works well for Python, while Cursor is better for React. Windsurf excels at full-stack applications, and Lovable is optimized for rapid prototyping. Each tool has a niche.

The problem accelerates because Engineering isn't one team. It's frontend, backend, mobile, DevOps, data engineering, ML/AI, and QA across multiple locations. Each sub-team develops its own preferences. Now, multiply that across product lines, and you will understand how you reach 200+ tools.

How they bypass detection

Engineers deploy models directly on cloud platforms via API keys, bypassing identity systems entirely. They expense tools as "cloud services" or pay with personal credit cards. They use free tiers that never touch corporate systems.

The risk profile

IP exposure is the primary concern since engineers paste proprietary algorithms, internal APIs, and architectural designs into prompts. Security vulnerabilities emerge from AI-generated code that hasn't been reviewed. Custom model sprawl creates governance nightmares as each team spins up their own LLM with inconsistent security controls.

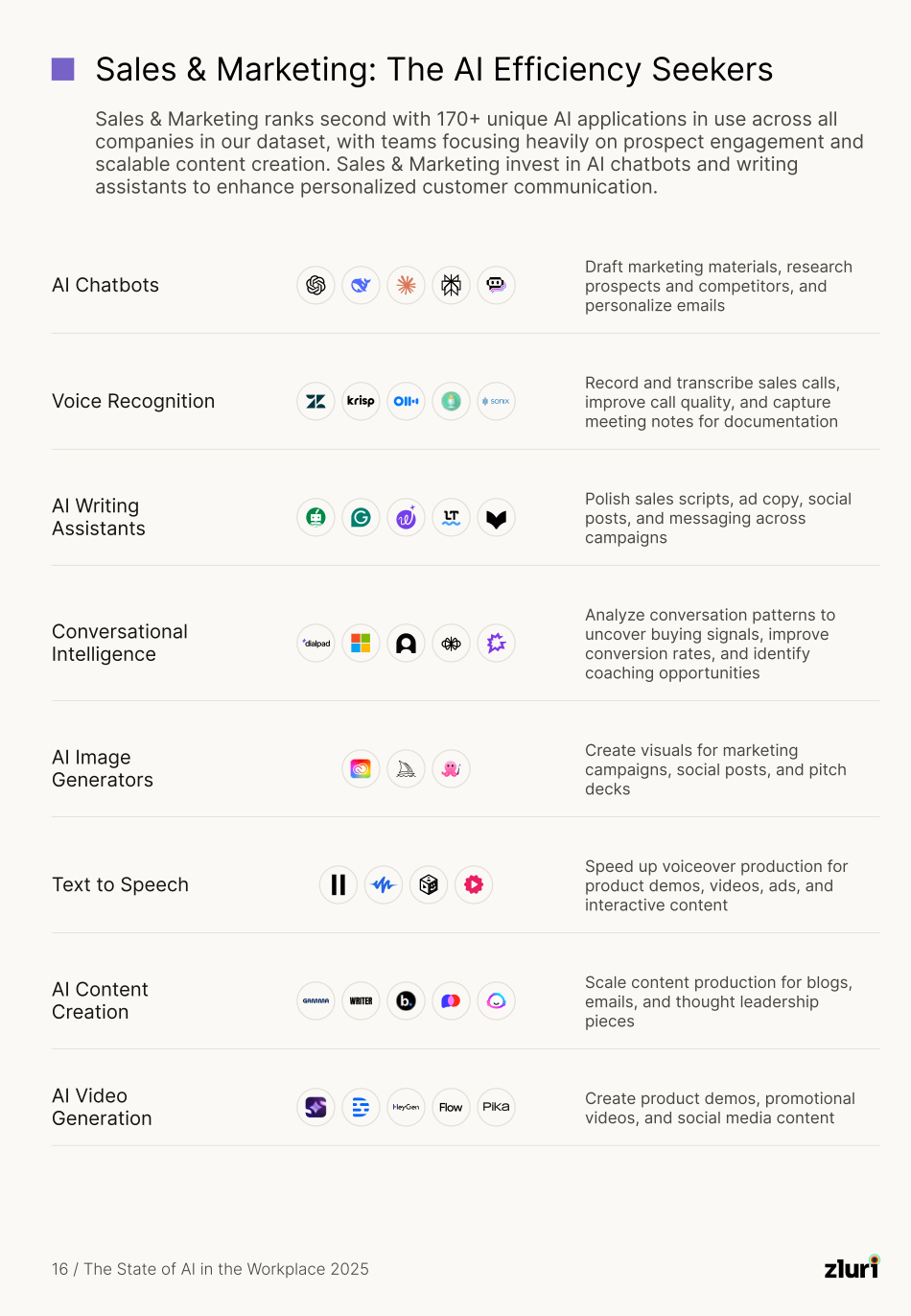

Why Marketing Uses 170+ AI Tools

Marketing and Sales face relentless pressure for personalization at scale. Budget autonomy accelerates adoption because Marketing often controls significant software budgets. When they find a tool that solves a problem, they buy it directly.

Their top AI categories

- AI Writing Assistants (QuillBot, Grammarly, Wordtune)

- AI Chatbots (ChatGPT, Claude, Perplexity)

- AI Content Creation (Gamma, Writer, Beautiful.ai)

- Conversational Intelligence (Dialpad, Gong.io)

- AI Agents for outreach (Clay)

The specialization problem

One VP of Business Systems at a major IT consulting firm captured it perfectly:

"Gemini and ChatGPT are great for generic tasks, but they falter for specific marketing content generation. When employees find specialized marketing platforms trained specifically for content creation, they gravitate to those tools that save them time, and we're right back where we were."

Even when IT provides approved general-purpose AI, marketing continues to adopt specialized alternatives. Generic chatbots don't cut it.

How they bypass detection

Marketing expenses AI as "software" or "marketing tools" in broad categories that hide specific purchases. They use personal Gmail accounts for quick content generation. They enable embedded AI features in existing tools (Notion AI, HubSpot AI) that don't trigger new authentication events.

The risk profile

Customer data leakage is the primary exposure. Sales reps paste prospect information, call transcripts, and strategic account plans into AI tools. Brand inconsistency emerges when different team members use different AI tools with different outputs. Compliance violation happens when AI tools process customer data without proper safeguards.

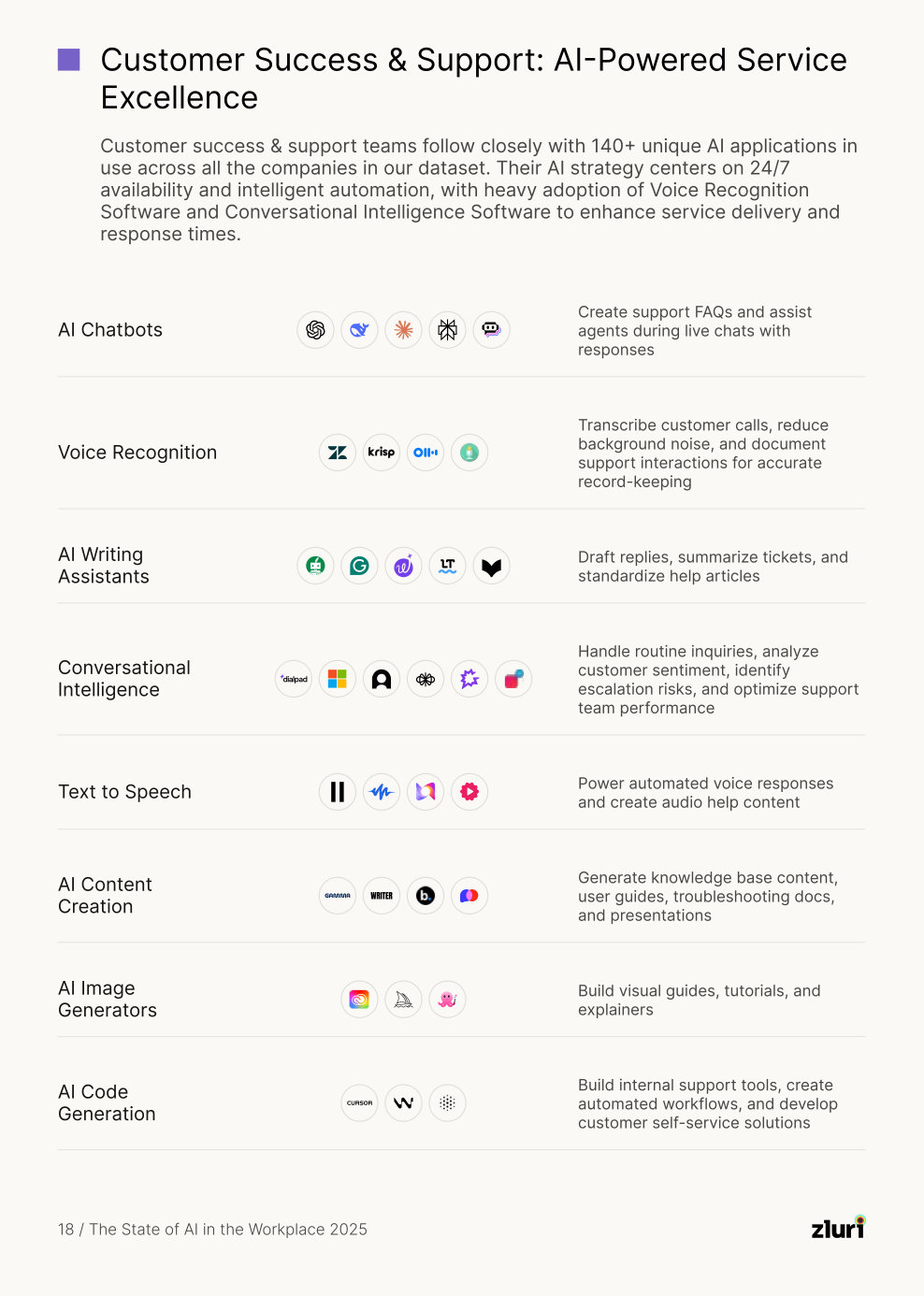

Why Support Uses 140+ AI Tools

Support teams face 24/7 availability requirements with finite headcount. They can't hire fast enough to meet scale, and response time metrics are unforgiving.

Their top AI categories

- Voice Recognition (Zendesk, Krisp, Otter.ai)

- Conversational Intelligence

- AI Chatbots

- AI Writing Assistants

Why they quietly exceed approved tools

Support teams use the approved helpdesk AI for 80% of queries, but when complex technical issues arise, agents open ChatGPT in another tab to draft clearer explanations. Each tool solves a specific pain point that the approved solution doesn't address.

How they bypass detection

Support agents use personal accounts (Gmail + ChatGPT) for quick questions, creating zero corporate trail. They install browser extensions that don't require IT approval. They access AI through mobile apps on personal devices.

The risk profile

PHI and PII exposure is critical because Support handles patient data, personal information, and financial details. When that flows into unsanctioned AI tools, you've violated HIPAA, GDPR, or industry-specific regulations. Quality control gaps emerge when AI-generated responses go directly to customers.

Why Business Operations Uses 100+ AI Tools

Business Operations teams handle cross-functional strategy, requiring tools that span market research, competitive analysis, financial modeling, and executive presentations.

Their top AI categories

- AI Chatbots for market research and strategic analysis

- AI Writing Assistants for polishing executive communications

- AI Content Creation for business proposals and presentations

- Voice Recognition for meeting documentation

Why they accumulate 100+ tools

Operations sits at the intersection of every department. They need Finance's modeling tools, Marketing's presentation platforms, Sales' competitive intelligence tools, and Engineering's data analysis capabilities. Each cross-functional project introduces new AI requirements.

How they bypass detection

Operations expenses AI tools under vague categories like "business software" or "strategic consulting tools." They use embedded AI features in existing platforms (Microsoft Copilot, Notion AI, Salesforce Einstein) that don't trigger new procurement approvals. They access AI through professional subscriptions expensed as "research services."

The risk profile

Competitive intelligence leakage is the critical exposure. A strategist researching market entry might paste your expansion plans into an AI tool. An executive preparing for a board meeting might upload confidential financial projections for summarization. Operations handles M&A discussions, strategic initiatives, and financial performance data that creates significant exposure when it flows into shadow AI.

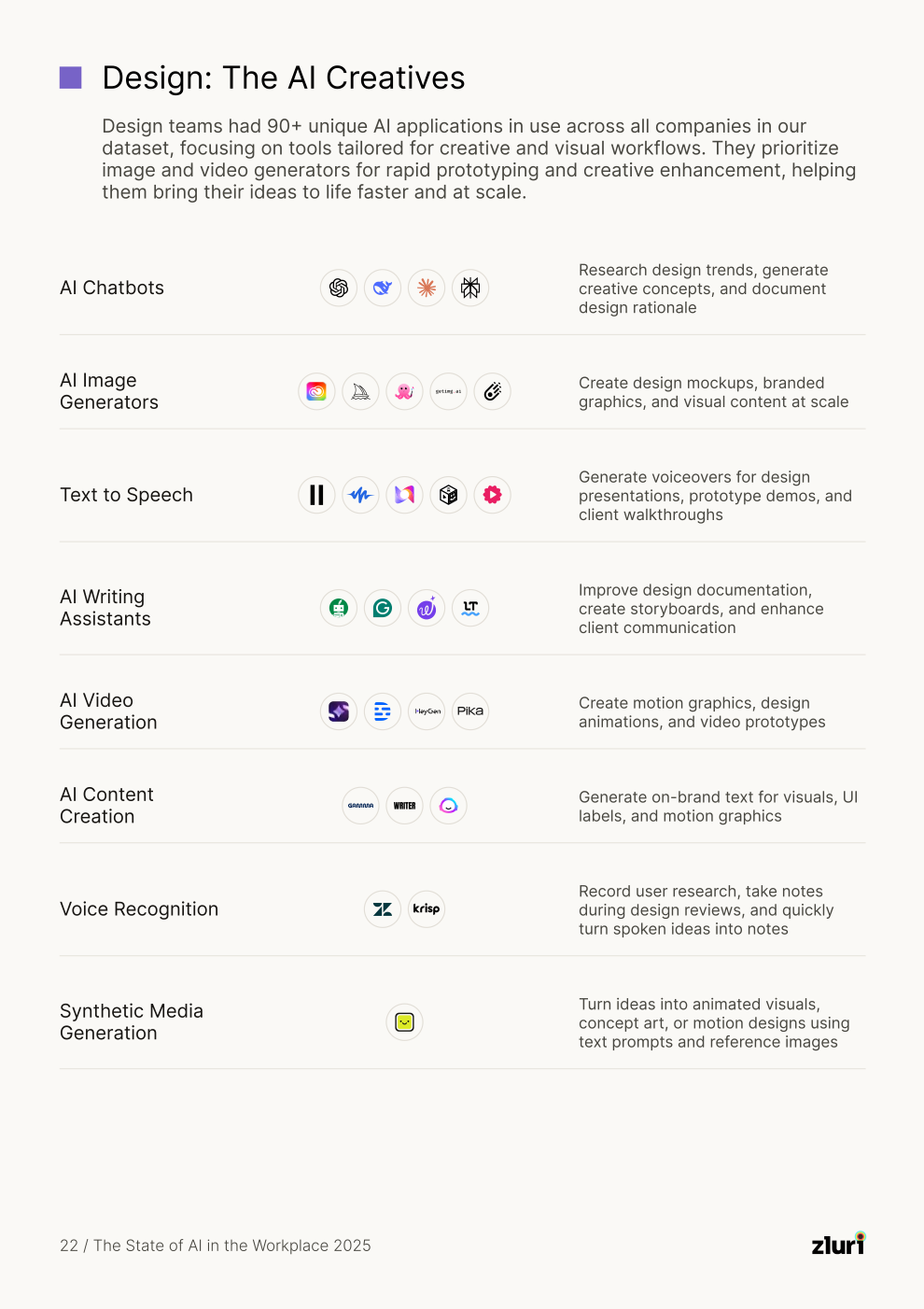

Why Design Uses 90+ AI Tools

Design teams focus on visual workflows and creative prototyping, requiring specialized tools for image generation, video editing, and rapid concept testing.

Their top AI categories

- AI Image Generators (Adobe Creative Cloud, Midjourney, Gencraft)

- AI Video Generators (Runway AI, Descript, HeyGen)

- AI Chatbots for design research and concept generation

- Text to Speech for prototype voiceovers

Why they accumulate 90+ tools

Creative work demands specialization. Midjourney excels at concept art while Adobe Firefly integrates with existing workflows. Runway AI handles video while Descript manages audio. Each designer develops preferences based on their specific creative style and project requirements.

How they bypass detection

Designers expense AI tools as "creative software" or "design resources." They use personal accounts for rapid prototyping before formal project approval. They access AI through free tiers or trials that never hit corporate procurement. Many design AI tools operate as plugins or extensions within existing creative suites.

The risk profile

Copyright infringement when AI-generated designs incorporate protected elements without proper attribution. Brand inconsistency occurs when different designers use different AI styles and outputs. IP exposure happens when proprietary design systems, brand guidelines, or unreleased product concepts are uploaded as training references or generation prompts.

The Scale of the Problem

Traditional discovery methods catch less than 20%. You're managing 60-80 tools while 240-320 operate in the shadows.

When departments don't coordinate, capabilities get purchased multiple times. Content generation appears in Marketing (Jasper), Product (Writer), and Support (ChatGPT). No knowledge sharing, duplicate training costs, fragmented data.

What IT Must Do: The Detection Playbook

Traditional discovery won't work. You need multiple detection methods running in parallel.

Method 1: Purpose-Built AI Governance Platforms

Automated discovery is specifically designed for AI sprawl. For example, Zluri detects sanctioned and shadow AI across 37+ categories through continuous scanning.

These platforms connect to your identity providers, expense systems, or browser/endpoint agents. They use pattern matching against known AI domains and APIs, analyze user behavior to detect AI usage patterns, and integrate with cloud platforms to find developer-deployed models.

Coverage: 70-80% of AI tools automatically

When to use: Essential for organizations with 50+ AI tools or current visibility below 50%. Beyond 500+ employees, automation becomes necessary.

Method 2: Identity Provider & SSO Log Analysis

Export SSO logs weekly and filter for domains containing keywords: "ai", "gpt", "chat", "copilot", "assistant", plus specific vendor names.

Cross-reference discoveries with your approved app list, then investigate unknowns by visiting the domains, checking G2 reviews, and contacting the department that connected.

Coverage: 30-40% of AI tools

Best for: Finding AI tools that followed good integration practices but weren't formally approved.

Method 3: Expense Report & Financial Analysis

Run monthly exports from your expense management system with keyword searches: "AI", "GPT", "intelligence", "copilot", "assistant", "chatbot", plus specific vendor names.

Investigate generic software charges over $50/month since these often hide AI subscriptions coded vaguely.

Coverage: 20-30% of AI tools

Best for: Finding paid subscriptions that bypassed procurement. Also useful for quantifying hidden AI spend.

Method 4: Network & Browser Monitoring

Configure network monitoring tools with AI-specific domain lists and update monthly as new tools launch. Inventory browser extensions via endpoint management platforms since many AI tools operate as Chrome or Edge extensions.

Coverage: 40-50% of active usage

Best for: Detecting active usage even when you can't see exactly what data is being shared.

What IT Must Do: Prevention Strategies

Discovery is the foundation, but prevention requires strategies that reduce shadow adoption while enabling legitimate innovation.

Strategy 1: Build the Approved Catalog

Create a curated list of 15-20 vetted AI tools covering major use cases:

- General chatbot: ChatGPT Enterprise or Claude Team

- Code generation: GitHub Copilot or Cursor

- Writing assistance: Grammarly Business

- Content creation: 2-3 specialized tools

- Voice/transcription: Otter.ai Business or Zoom AI

- Customer intelligence: Approved conversational intelligence platform

Make it accessible through a single sign-on and self-service portal with one-click access requests that auto-approve for standard tools.

Define clear criteria for "low-risk" (SOC 2 certified, no PHI/PII processing, standard SaaS terms, under $100/user/year) and track request status transparently.

Strategy 2: Risk-Based Approval Tiers

Everything shouldn't go through the same 6-12 week approval process regardless of risk.

- Tier 1 (No approval needed): Approved catalog tools

- Tier 2 (48-hour approval): Low-risk tools with standard features

- Tier 3 (1-2 week approval): Medium-risk tools needing vendor assessment

- Tier 4 (3-4 week approval): High-risk tools processing sensitive data

Risk-based tiers prevent bottlenecks while maintaining appropriate control over highly risky tools.

Strategy 3: Enable Departmental Innovation Zones

Give each department $5,000 per quarter for AI tool testing in sandbox environments with synthetic or anonymized data through 90-day pilot programs.

Post-pilot decision: adopt broadly, reject, or iterate. IT provides support and security guidance throughout.

Real implementation: Engineering gets $5K/quarter for code AI tools to test Cursor, Windsurf, and Lovable in sandboxes. After 90 days, they pick Cursor for broad adoption. You've reduced potential sprawl from 12 code tools to 2 while enabling the team to choose the best option.

Strategy 4: Automated Policy-Based Controls

Implement browser-level blocking for prohibited AI tools, real-time alerts when restricted domains are accessed, and DLP rules preventing sensitive data uploads to AI endpoints.

Block dangerous tools with known data breaches or no compliance certifications. Alert on medium-risk tools to educate users without blocking. Enable low-risk tools freely to reduce friction.

One IT leader described how they use Zluri to implement this: "Zluri shares email notifications, identifying which new tools are being used and which ones are risky, so you can prioritize risks."

Two Control Mechanisms That Work

1. The Alternative Suggestion System

When an employee requests a blocked tool, they receive an automatic notification with the specific restriction reason, followed by 2-3 approved alternatives with one-click access links and brief descriptions of how they solve similar problems.

Example: Employee tries to access Deepseek (flagged for data privacy concerns). System displays: "Deepseek is restricted due to questions about data handling and storage. Try these alternatives: ChatGPT Enterprise (same conversational AI with enterprise security), Claude Pro (advanced reasoning with compliance certifications), Google Gemini Business (integrated with Workspace)."

Impact: According to organizations using this approach, 60-70% of users adopt suggested alternatives rather than pursuing the blocked tool.

2. Quarterly Tool Consolidation Reviews

Every quarter, review all AI tools discovered, usage patterns across departments, redundant capabilities, cost versus value delivered, and employee feedback.

Retire unused tools, consolidate overlapping tools, promote high-value tools, and fast-track commonly requested tools (if 3+ departments request similar capabilities, evaluate adding an approved option).

Why quarterly matters: New AI tools launch constantly and needs evolve. Quarterly reviews ensure your approved catalog stays current.

Measuring Success

At this point, it's best to keep it simple. You can track three critical metrics:

1. Reduction in shadow AI: Month-over-month decrease in unapproved tools. Target: 70%+ reduction in 6 months.

2. Request fulfillment time: Average days from tool request to approval decision. Target: 2 days for low-risk, 7 days for medium-risk

3. High-risk tool count: Should decrease steadily as you block dangerous tools and migrate users to safer alternatives. Target: approaching zero.

Sprawl Is Inevitable, Chaos Is Optional

AI sprawl isn't going away because the forces driving it are too strong: competitive pressure, legitimate productivity gains, ease of adoption, and specialization by use case.

As one Senior Cloud Infrastructure & Security Engineer explained: "We have to balance between enabling AI products today and managing security. If we don't use AI, our competitors will use it and increase their productivity."

The question isn't whether to stop AI sprawl. It's how to manage it.

What doesn't work?

- Blocking everything (employees find workarounds faster)

- Ignoring the problem (the 80% you can't see compounds daily)

- Traditional shadow IT approaches (too slow for AI's adoption speed)

What works?

- Multi-method detection catching 90%+ of tools

- Risk-based controls that block dangerous tools while enabling safe tools

- Fast approval for legitimate needs (days, not months)

- Approved alternatives that genuinely solve problems

- Continuous monitoring and quarterly optimization

The mindset shift: Stop thinking of AI sprawl as behavior to eliminate. Start thinking of it as adoption energy to redirect.

The next step: Start with detection using the four-method playbook to discover your true AI landscape. Based on the report's findings, IT typically sees fewer than 20% of tools.

Find the other 80%. Understand which departments drive adoption and why, then build detection and controls that match those patterns.

You can't control AI sprawl until you can see it.

For organizations managing sprawl across 100+ AI applications, Zluri's AI Governance platform provides automated discovery across 56+ categories, department-specific usage insights, and policy-based controls.

.svg)