The Compliance-Optimization Gap

You've been running quarterly access reviews for two years. Your auditors are satisfied. Every review gets completed on schedule, remediation happens, evidence gets filed.

But here's what the audit reports don't show:

Your IT team spent 149 person-days on your last review cycle. That's nearly three full-time employees for an entire quarter doing nothing but access reviews.

Reviewers spent seven days clicking through spreadsheets with 25,000 data points (500 emp*50 apps). Remediation dragged on for 18 days after reviews closed. You discovered 600 access violations—the same types you found last quarter, and the quarter before that.

The auditor sees: "Access reviews completed quarterly ✓"

Your CFO sees: "Why does this cost so much if we're still finding the same violations every quarter?"

Here's the reality: most organizations do access reviews. Few do them well. There's a significant gap between passing an audit and actually optimizing the process. Compliant doesn't equal optimal. Detected doesn't equal prevented. Reviewed doesn't equal remediated. Completed doesn't equal effective.

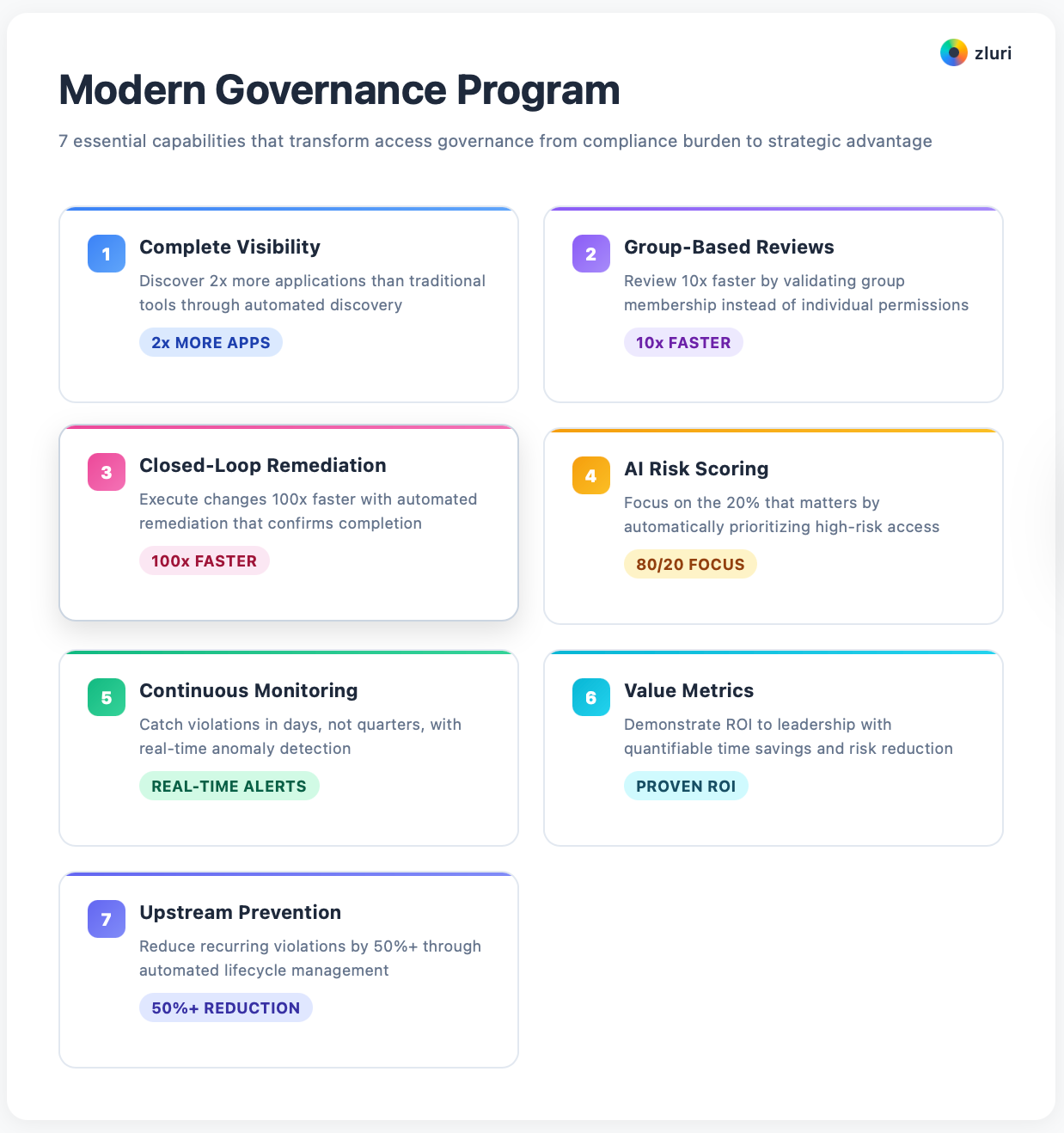

We'll cover seven best practices that transform access reviews from a compliance requirement into a strategic security capability that reduces risk, saves money, and requires minimal IT resources.

The 7 User Access Review Best Practices That Compound

These aren't incremental improvements—they're multiplicative. Each practice alone delivers value. Combined, they transform your entire access governance program.

Best Practice 1: Start with Complete Visibility

You can't secure what you can't see. This sounds obvious, but you're probably reviewing only 40-60% of your actual application landscape.

You pull user access data from your identity provider—Okta, Azure AD, or Google Workspace. Apps integrated with SSO are visible. Apps not integrated with SSO are invisible, that means the majority of your SaaS apps due to SSO tax.

Your IDP shows the 50 applications your IT team formally approved, integrated with SSO, and manages centrally.

But your IDP doesn't show the marketing team's HubSpot instance they purchased last month, the developer tools purchased with personal credit cards, the free trials that converted to paid without IT knowing, the 100+ apps buried inside Salesforce (there are 5000+ apps inside Salesforce integration library,) and the collaboration tools accessed with personal email addresses instead of SSO.

We've seen this pattern across hundreds of mid-market companies (500-5000 employees): you discover 2.3x more applications when using comprehensive discovery versus IDP/SSO-only visibility. If you only review IDP-visible apps, you're reviewing 40% of your risk while 60% remains unmanaged.

Traditional IGA platforms use one discovery method—direct API integrations. You govern users in the known applications. If it is unknown, it's invisible. The best platforms use multiple simultaneous discovery methods. For example, Zluri uses these 9 methods:

- SSO/IDP integration (Okta, Entra ID, Google Workspace)

- Finance system integration (NetSuite, QuickBooks—catches personal card purchases)

- Direct API connectors (300+ pre-built integrations)

- Desktop agents (apps installed on employee laptops)

- Browser extensions (web-based SaaS usage tracking)

- MDM integration (mobile device management for mobile apps)

- CASB integration (cloud access security broker traffic monitoring)

- HRMS integration (employee data and org structure)

- Directories integration (Google and Azure Active directory)

The best access reviews platforms don't depend on unreliable methods like employee self-reporting (employees declare tools they use).

No single method provides complete visibility. SSO only sees SSO-enabled apps. Finance only sees apps with active spend. Browser extensions only see web apps. But nine methods running simultaneously? That's 99%+ coverage.

Every undiscovered application represents unmanaged access—former employees still logged in, contractors with access months after their contracts ended, dormant accounts creating security gaps. The apps you don't know about are the ones that become breach entry points.

Start by comparing your IDP app count against your finance system's software spend categories. The difference is your shadow IT gap.

Partner with Security to prioritize which discovery methods to implement first based on your biggest blind spots—usually it's finance integration and browser-based discovery that reveal the most hidden applications.

Best Practice 2: Review Groups, Not Individual Users

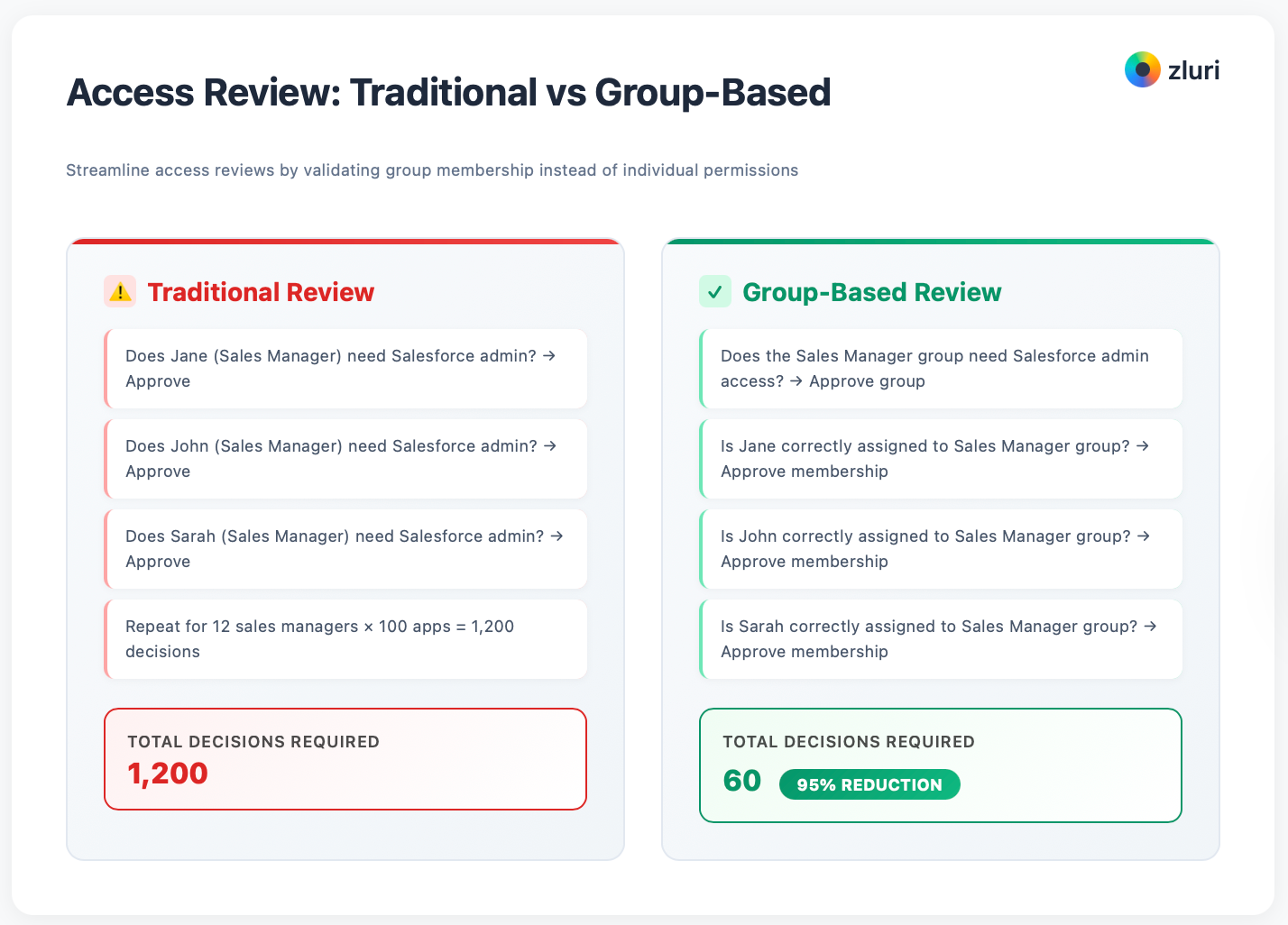

The traditional approach is reviewing user-by-user: "Does Jane need Salesforce? Does John need Slack? Does Sarah need GitHub?" This doesn't scale.

For your organization with 1000 employees and 100 applications, that's 100,000 potential user-app combinations to review. Even if 90% have no access, you're still reviewing 10,000 active access relationships every quarter.

Instead of reviewing "Does Jane need Salesforce admin access?" review "Does the Sales Manager role need Salesforce admin access?" Once you validate the group, everyone in that group is automatically validated. This is how you review 10x faster without sacrificing accuracy.

You already use groups for access provisioning—Active Directory groups, Okta groups, Google Workspace groups, Salesforce permission sets. Users get added to groups, groups get assigned to applications. But then you review users individually, ignoring the group structure you already built.

Group-Based Access Reviews flip this: review the group assignments once, validate all members automatically. Instead of reviewing 10,000 individual user-access pairs, you review 200 group assignments and 500 group memberships.

Traditional review:

- Does Jane (Sales Manager) need Salesforce admin? → Approve

- Does John (Sales Manager) need Salesforce admin? → Approve

- Does Sarah (Sales Manager) need Salesforce admin? → Approve

- Repeat for 12 sales managers × 100 apps = 1,200 decisions

Group-based review:

- Does the Sales Manager group need Salesforce admin access? → Approve group

- Is Jane correctly assigned to Sales Manager group? → Approve membership

- Is John correctly assigned to Sales Manager group? → Approve membership

- Is Sarah correctly assigned to Sales Manager group? → Approve membership

- Result: 1 group validation + 12 membership validations = 13 decisions (99% reduction)

Group-based reviews deliver 10-15x efficiency gains for organizations with 500+ employees. The larger your company, the greater the benefit. At 1,000 employees, you're reviewing hundreds of groups instead of hundreds of thousands of user-access pairs.

You don't need to migrate to group-based access overnight. Start by mapping your existing groups to applications—you probably already have 60-70% of access managed through groups (AD groups, SSO groups, app-level roles). Review those group-based first.

For the remaining direct-assigned access, continue user-based reviews until you can migrate them to groups. Partner with Security to ensure your group structure follows least-privilege principles—groups should represent job functions, not be catch-all "everyone" groups.

Best Practice 3: Implement Closed-Loop Remediation

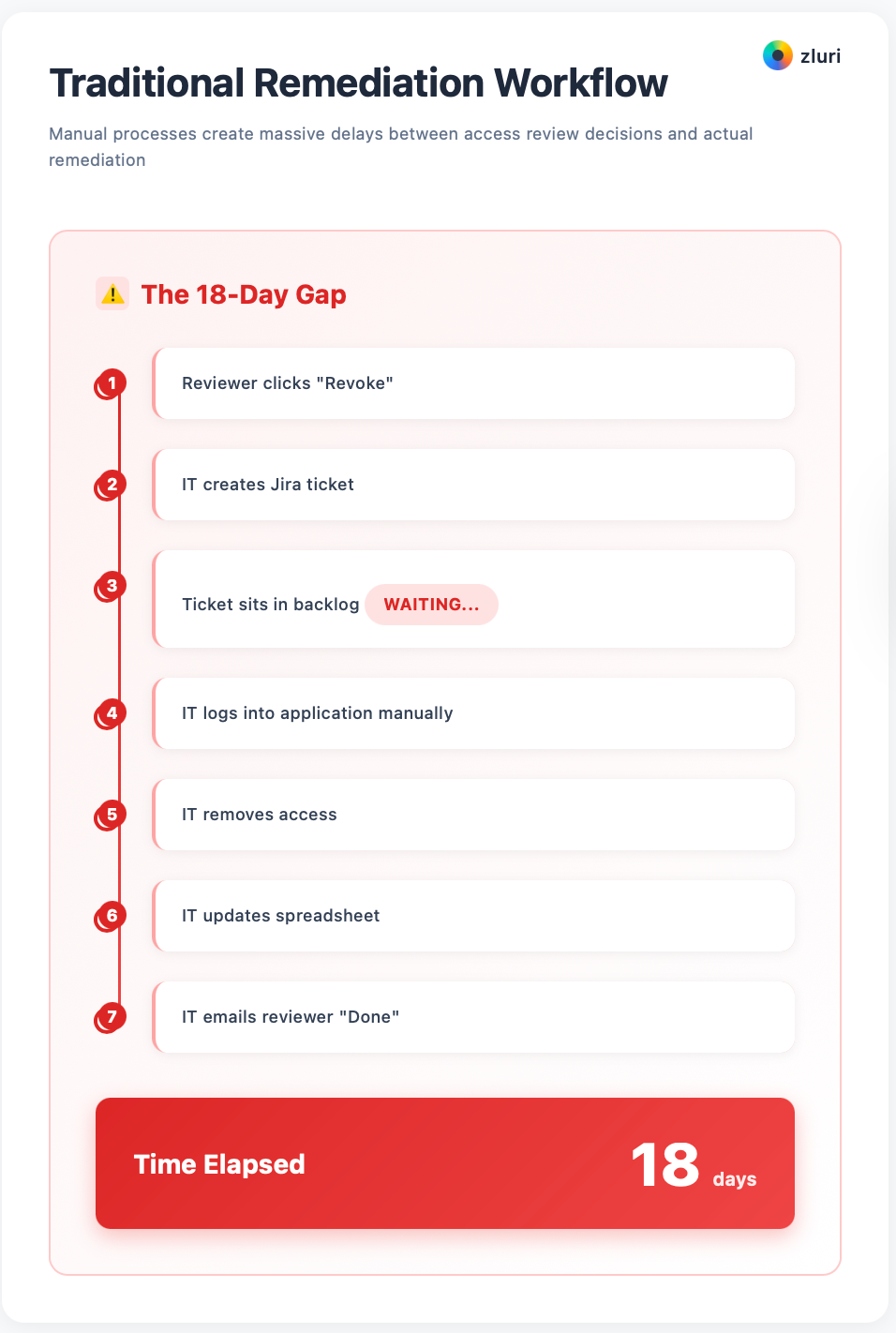

Access reviews are pointless if violations don't get fixed. Yet your manual remediation process creates a massive gap—decisions get made, but execution drags on for weeks or doesn't happen at all.

Your traditional workflow: Reviewer clicks "Revoke" → IT creates Jira ticket → Ticket sits in backlog → IT logs into application manually → IT removes access → IT updates spreadsheet → IT emails reviewer "Done" → 18 days elapsed.

At each step, things fall through the cracks. Tickets get buried. Manual work gets delayed. Spreadsheets fall out of sync. Reviewers never get confirmation. Violations persist for weeks after reviews close.

Organizations with manual remediation processes take weeks from review decision to executed change. Your critical access revocations—terminated employees, compromised accounts—can sit in the queue even longer.

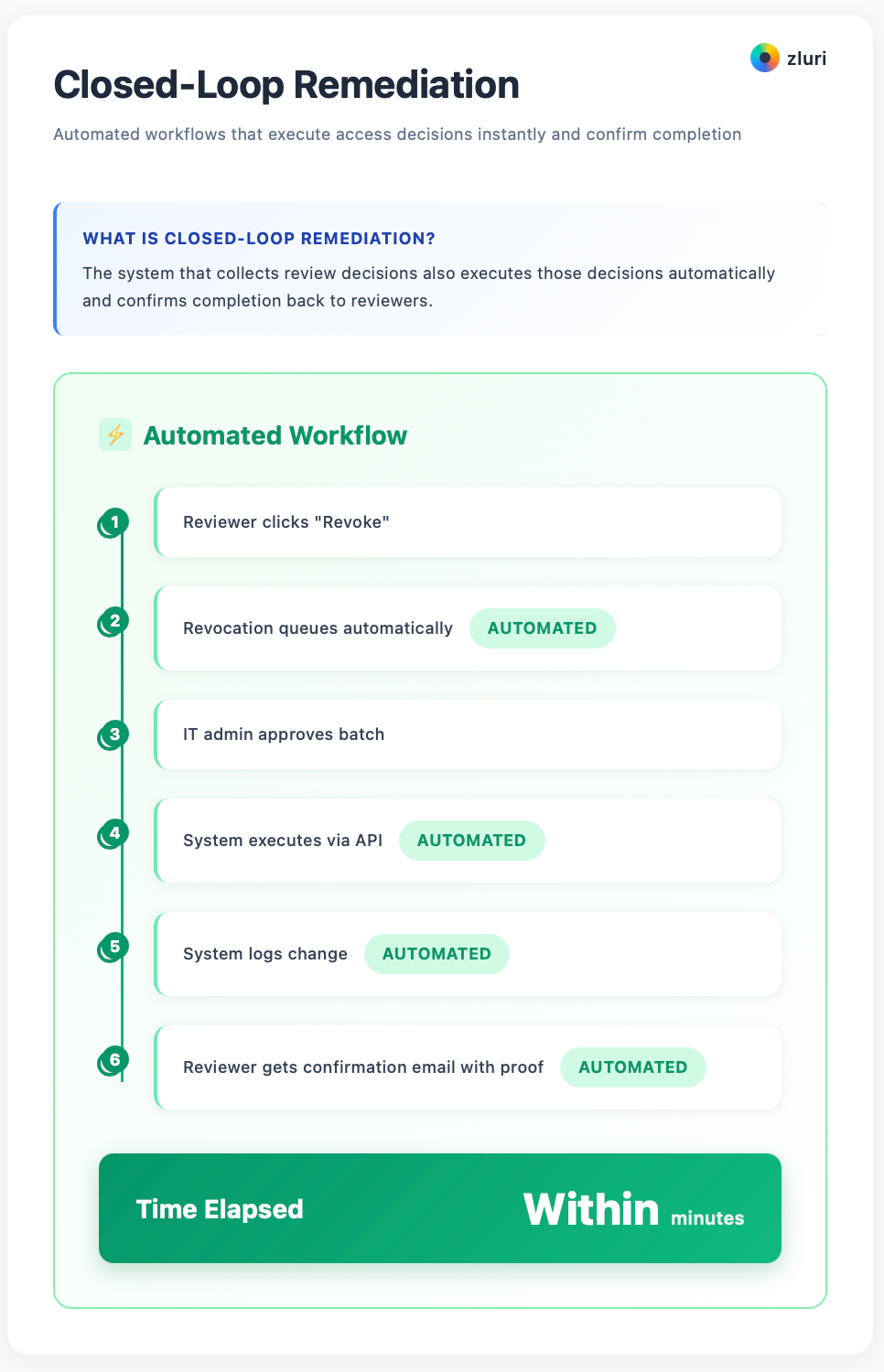

Closed-loop remediation means the system that collects review decisions also executes those decisions automatically and confirms completion back to reviewers.

Here's the workflow: Reviewer clicks "Revoke" → Revocation queues automatically → IT admin approves batch → System executes via API → System logs change → Reviewer gets confirmation email with proof—all within minutes, not weeks.

The "closed loop" means:

- Decision capture (review system records the decision)

- Automated execution (system makes the change via API)

- Validation (system confirms change was applied)

- Notification (reviewer gets proof it's done)

No Jira tickets. No manual logins. No spreadsheet tracking. No "we'll get to it eventually."

Your manual remediation:

- 284 revocation decisions

- 3 IT staff members assigned

- 67 hours of manual work

- 18-day average completion time

- 14 items (5%) never executed—lost in the backlog

- No automatic confirmation to reviewers

Closed-loop remediation:

- 284 revocation decisions

- 1 IT admin for batch approval

- 15 minutes of work

- Same-day completion

- 100% execution rate

- Automatic confirmation with audit trail

Time savings: 99.6%. Accuracy improvement: From 95% to 100%. Auditor satisfaction: Significantly higher with complete, automatic evidence.

Closed-loop remediation requires API access to your applications. Not every app has an API, but most modern SaaS tools do.

Start with your top 20 critical applications and automate remediation there—that's usually 80% of your access changes.

For the remaining apps without APIs, continue manual remediation but track it within the same platform.

Partner with Security and Compliance to justify investing in platforms that offer closed-loop capabilities—the ROI is usually realized within the first review cycle.

Best Practice 4: Use AI to Surface High-Risk Access

You're reviewing 25,000 access data points manually. Humans can't meaningfully evaluate that volume. You need AI to pre-score risk and surface what actually needs human attention.

When your reviewers face 500 rows in a spreadsheet with no context, they default to "approve all" because evaluating each one is impossible. Manual review processes have 92-97% approval rates, which means most reviews aren't meaningful evaluations—they're rubber stamps.

The issue isn't laziness. It's cognitive overload. A manager drowning in 500 user-access pairs can't meaningfully assess risk on each one. They click "approve" on everything and move on.

Smart platforms use AI to analyze access patterns and flag high-risk items that need human review.

They look at signals like inactive access (no login in 30/90+ days), excessive permissions (admin access without justification), anomalous access (user in Finance with engineering tool access), orphaned accounts (former employees still active), recent grants (access given yesterday, could be legitimate), and role mismatches (access doesn't align with job function).

Instead of reviewing 25,000 equal-priority data points, your reviewers see: 300 high-risk items flagged for review, 2,400 medium-risk items with recommendations, 22,300 low-risk items bulk-approved based on policy.

Human time gets focused on the 20% that actually matters. The other 80% gets handled by policy-based automation.

Example:

Without AI:

- You send spreadsheet with 8,400 rows to Sales VP

- Sales VP sees: 8,400 undifferentiated access items

- Sales VP response: "I don't have time for this" → Approves all

- Result: 23 violations approved because nothing was flagged

With AI:

- Platform flags 47 high-risk items for Sales VP review

- Sales VP sees: "3 users haven't logged in for 30+ days—review needed"

- Sales VP response: Revokes the 3 inactive accounts, approves legitimate ones

- Platform auto-approves the remaining 8,353 low-risk items based on policy

- Result: Violations caught, VP spent 5 minutes instead of ignoring it entirely

This isn't just "flagging inactive users." Advanced systems combine multiple signals. A user who hasn't logged in for 60 days might be acceptable (on sabbatical). But a user with no logins, admin access, and whose manager left the company? That's high risk. The AI combines context that humans can't process at scale.

AI risk scoring is only as good as the policies you define. Work with Security to establish clear risk criteria—what constitutes "dormant" in your environment (30 days? 60 days? 90 days?), what permission levels require justification.

The AI applies these rules consistently across thousands of access items, but you need to define the rules first. Don't just accept default settings—customize the risk model to your organization's actual risk profile.

Best Practice 5: Make Reviews Continuous, Not Just Quarterly

Your quarterly reviews find violations that happened over the past 90 days. By the time you discover an orphaned account, it's been active for 60+ days. Continuous monitoring catches violations within days or hours.

Compliance frameworks require periodic reviews—quarterly, semi-annually, annually. But "periodic" doesn't mean you should only check access every 90 days.

What happens in between your quarterly reviews? Employees get terminated but access isn't immediately revoked. Contractors finish projects but credentials stay active. Users accumulate permissions they don't need.

These violations sit undetected for weeks or months before the next scheduled review catches them.

Continuous access monitoring doesn't replace your quarterly formal reviews—it complements them. Formal reviews satisfy compliance requirements. Continuous monitoring catches violations in real-time.

How it works: The platform continuously monitors access across all applications using the same discovery methods, watches for risk signals (inactive accounts, permission changes, new high-risk access grants), automatically flags violations as they occur, and can even auto-remediate based on policy (terminate employee → auto-revoke all access immediately).

High-risk events:

- Employee terminations (auto-revoke all access same day)

- Contractors reaching end date (auto-expire access)

- Users going inactive (flag after 60 days dormant)

- Admin access grants (alert Security immediately)

- Bulk data exports (flag unusual activity)

Policy violations:

- Access granted without approval

- Permissions elevated without justification

- Segregation of duties conflicts (user gains conflicting access)

- Sensitive data access by unauthorized users

Drift detection:

- Group membership changes

- Direct access grants bypassing groups

- Permission creep (gradual accumulation of access)

- Role changes not reflected in access

Think of quarterly reviews as comprehensive health checkups—thorough, formal, generates evidence for compliance. Continuous monitoring is like wearing a fitness tracker—catches issues in real-time, prevents small problems from becoming larger ones.

You still need the quarterly checkup for compliance. But continuous monitoring significantly reduces what the quarterly review finds, because violations get caught and fixed immediately instead of piling up for 90 days.

Start with continuous monitoring for your highest-risk scenarios—terminated employee access and dormant admin accounts. These are the violations that create the most risk if left unaddressed.

As you build confidence in the system, expand to other policy violations. Partner with Security to define alert thresholds—you don't want alert fatigue from too many low-risk notifications, but you do want immediate visibility into critical access changes.

Best Practice 6: Measure What Actually Matters

You track review completion rates. That's necessary but insufficient. You need to track metrics that prove business value, not just compliance activity.

Compliance metrics (everyone tracks these):

- Review completion rate: 94%

- On-time completion: 87%

- Number of reviews conducted: 4 per year

- Number of users reviewed: 1,247

These numbers satisfy auditors. They don't tell you if reviews are effective or worth the investment.

Value metrics (you should track these):

Efficiency metrics:

- IT person-hours per review cycle (target: <20 hours)

- Time from launch to completion (target: <7 days)

- Remediation execution time (target: <72 hours)

Security metrics:

- Orphaned accounts discovered and removed (ex-employees with lingering access)

- Dormant accounts identified (no login 90+ days)

- Over-provisioned access downgraded (admin → standard user)

- Segregation of duties violations resolved

- Access violations per 100 users (trending down quarter-over-quarter)

Business metrics:

- Licenses reclaimed from unused access ($X saved)

- Applications rationalized due to discovery (consolidated duplicate tools)

- Audit findings reduction (fewer access-related findings each cycle)

- Mean time to remediation improvement (how fast violations get fixed)

Show your leadership the business impact, not just compliance status.

Cost:

- Platform cost: $50,000/year

- IT labor: 55 person-days per cycle × 4 cycles × $150/day = $33,000/year

- Total cost: $83,000/year

Value:

- Licenses reclaimed: 127 unused licenses × $40/month × 12 months = $61,000/year

- IT efficiency gain: 94 person-days saved per cycle × 4 cycles × $150/day = $56,400/year

- Audit cost reduction: 40 hours less audit prep × $200/hour = $8,000/year

- Risk reduction: Prevented 1 breach scenario = $250,000+ (conservative)

- Total value: $375,400/year

ROI: 352% (4.5x return)

This tells your CFO whether access reviews are worth doing well, not just doing.

Build an executive dashboard with:

- Time invested (person-hours this quarter vs last quarter)

- Money saved (license reclamation, tool consolidation)

- Risk reduced (violations found and fixed, trending over time)

- Compliance status (audit-ready evidence, no findings)

- Efficiency trends (is the process getting faster or slower?)

Update it quarterly. Share it with leadership. Demonstrate that optimized access reviews are strategic, not just compliance requirements.

Don't wait for leadership to ask for ROI—proactively demonstrate value.

Track baseline metrics from your first manual review, then show improvement as you implement best practices.

Partner with Finance to translate security improvements into business language—"reduced audit findings" becomes "avoided $X in potential fines," "reclaimed licenses" becomes "recovered $X in unnecessary spending."

Make access reviews visible as a value driver, not a cost center.

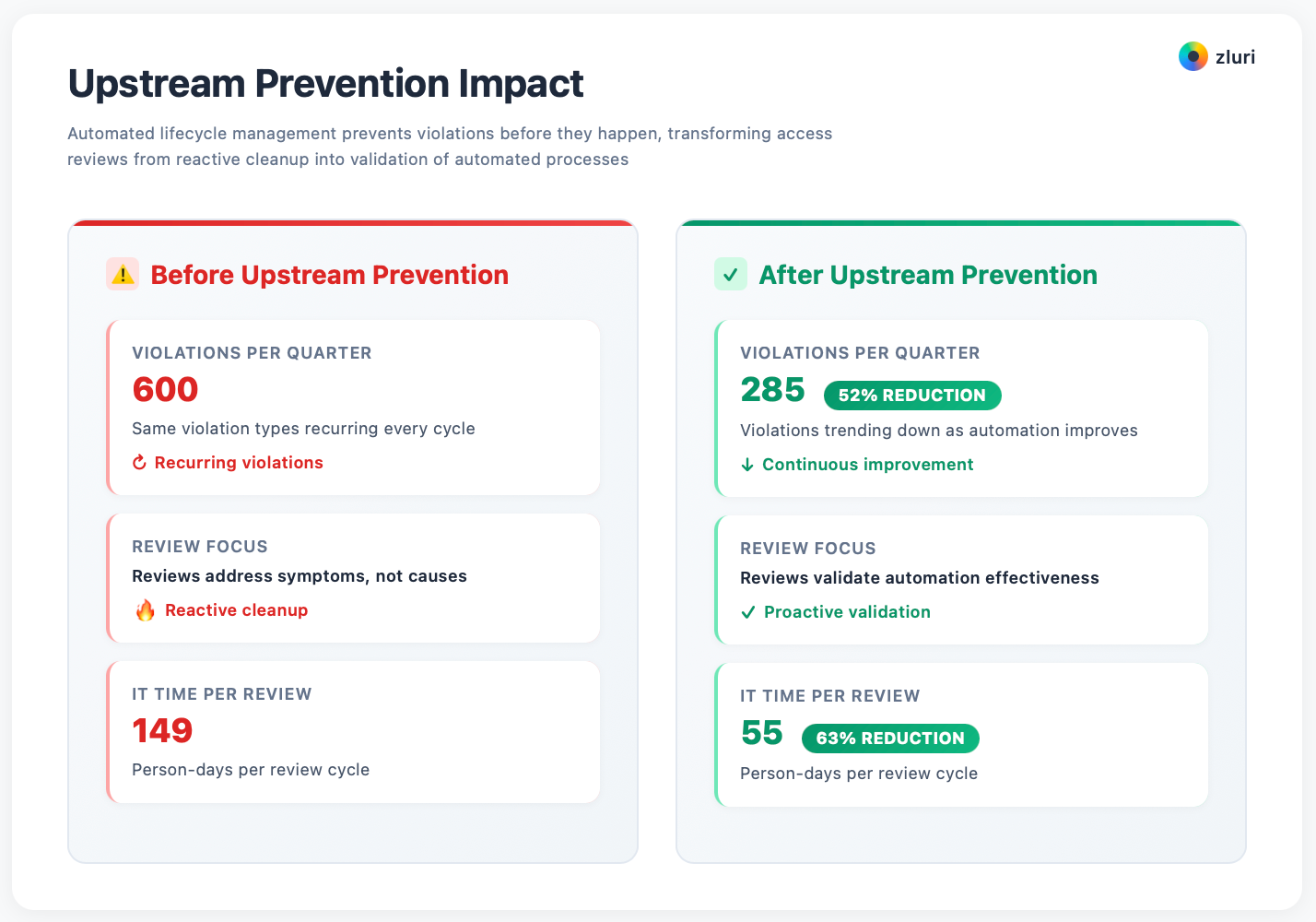

Best Practice 7: Close the Loop to Upstream Processes

If you find the same violations every quarter, you're not fixing root causes—you're just cleaning up the same mess repeatedly. You need to prevent violations from happening, not just detect them after the fact.

Your Quarter 1 review: 47 orphaned accounts (ex-employees with lingering access)

Your Quarter 2 review: 52 orphaned accounts

Your Quarter 3 review: 49 orphaned accounts

Your Quarter 4 review: 51 orphaned accounts

Your access reviews keep finding orphaned accounts because your offboarding isn't automated. You're detecting the problem quarterly, but you're not preventing it from recurring. This satisfies the audit requirement without actually reducing risk.

Close the loop between your access reviews and the processes that create violations. Access reviews reveal patterns. Fix the patterns, not just the instances.

Pattern 1: Orphaned accounts (ex-employees with access)

- Root cause: Your offboarding isn't automated

- Upstream fix: Integrate HR system with access platform → Employee marked "terminated" in Workday → All access auto-revoked within 1 hour

- Result: Orphaned accounts drop from 50 per quarter to 2-3 (manual edge cases)

Pattern 2: Dormant access (users not using granted tools)

- Root cause: Your onboarding gives everyone "standard access bundle" regardless of need

- Upstream fix: Move from role-based provisioning to request-based provisioning → New hires get minimal access → They request tools as needed → Reduces over-provisioning by 60%

- Result: Dormant accounts drop from 200 per quarter to 40

Pattern 3: Permission creep (users accumulating access over time)

- Root cause: Access gets added but never removed when roles change

- Upstream fix: Automate role-change workflows → Employee changes roles → Old role's access auto-removed → New role's access auto-provisioned

- Result: Permission creep violations drop from 120 per quarter to 15

Pattern 4: Contractor access overstays (vendors with access after contract ends)

- Root cause: No expiration dates on contractor accounts

- Upstream fix: Time-bound all contractor access → Tie to contract end date → Auto-expire and notify before expiration → Contractor can request extension if needed

- Result: Contractor overstay violations drop from 35 per quarter to 1-2

The best access governance platforms don't just do reviews—they also automate the identity lifecycle that prevents violations.

Same platform (for example, Zluri) handles:

- Onboarding: Provision access based on least-privilege templates

- Changes: Auto-adjust access when employees change roles

- Offboarding: Auto-revoke all access immediately upon termination

- Reviews: Validate that automated processes are working correctly

Your access reviews become validation of upstream automation, not reactive cleanup of accumulated violations.

Before upstream prevention:

- 600 violations per quarter

- Same violation types recurring every cycle

- Reviews address symptoms, not causes

- IT time: 149 person-days per review

After upstream prevention:

- 285 violations per quarter (52% reduction)

- Violations trending down as automation improves

- Reviews validate automation effectiveness

- IT time: 55 person-days per review (63% reduction)

The goal isn't perfect reviews—it's needing fewer reviews because your upstream processes prevent violations.

Review your last three quarters of access review findings and categorize violations by root cause. Which patterns repeat? Those are your upstream automation opportunities.

Partner with HR to automate offboarding, with Security to implement least-privilege provisioning, with managers to automate role-change workflows.

Don't think of access reviews as a standalone compliance process—think of them as the validation layer for your entire identity lifecycle automation program.

Bringing It All Together: The Compounding Effect

Each best practice delivers standalone value. Together, they transform your entire access governance program.

Before (manual process with basic tools):

- 149 person-days per review cycle

- 7 days to complete reviews

- 18 days average remediation time

- 600 violations per quarter (mostly recurring)

- 75% application coverage

- $50K platform cost, unknown ROI

After (automated process with all 7 best practices):

- 55 person-days per review cycle (63% reduction)

- 4 days to complete reviews (43% faster)

- 4 minutes average remediation time (99.9% faster)

- 285 violations per quarter (52% reduction, trending down)

- 98% application coverage

- $50K platform cost, $375K+ annual value (7.5x ROI)

From compliance requirement → Strategic security capability

From resource intensive → Efficiency multiplier

From quarterly cleanup → Continuous governance

From detection → Prevention

From Good to Great

Most organizations do access reviews. Few do them well.

The difference isn't effort—it's approach. Companies stuck with manual processes work harder but get limited results. Companies that implement these seven best practices work more efficiently and achieve significantly better outcomes.

Compliant ≠ Optimal

Detected ≠ Prevented

Reviewed ≠ Remediated

Completed ≠ Effective

The best access reviews are those that reduce risk, save money, reclaim resources, and demonstrate measurable value—not just satisfy auditors.

Get Started

See these best practices in action → Book a Demo

Download: Access Review Optimization Checklist → Get the Checklist

.png)

.svg)